Along with other end of the year tasks, I’m installing several different XQuery tools. Not all tools support all extensions and so a variety of tools can be a useful thing.

The README for XQila-2.3.2 comes close to winning a prize for being terse:

1. Download a source distribution of Xerces-C 3.1.2

2. Build Xerces-C

cd xerces-c-3.1.2/

./configure

make

4. Build XQilla

cd xqilla/

./configure –with-xerces=`pwd`/../xerces-c-3.1.2/

make

A few notes that may help:

Obtain Xerces-c-3.1.2 homepage.

Xerces project homepage. Home of Apache Xerces C++, Apache Xerces2 Java, Apache Xerces Perl, and, Apache XML Commons.

On configuring the make file for XQilla:

./configure –with-xerces=`pwd`/../xerces-c-3.1.2/

the README is presuming you built xerces-c-3.1.2 in a sub-directory of the XQilla source. You could, just out of habit I built xerces-c-3.1.2 in a separate directory.

The configuration file for XQilla reads in part:

–with-xerces=DIR Path of Xerces. DIR=”/usr/local”

So you could build XQilla with an existing install of xerces-c-3.1.2 if you are so-minded. But if you are that far along, you don’t need these notes.

Strictly for my system (your paths will be different), after building xerces-c-3.1.2, I changed directories to XQilla-2.3.2 and typed:

./configure --with-xerces=/home/patrick/working/xerces-c-3.1.2

No error messages so I am now back at the command prompt and enter make.

Welllll, that was supposed to work!

Here is the error I got:

libtool: link: g++ -O2 -ftemplate-depth-50 -o .libs/xqilla

xqilla-commandline.o

-L/home/patrick/working/xerces-c-3.1.2/src

/home/patrick/working/xerces-c-3.1.2/src/

.libs/libxerces-c.so ./.libs/libxqilla.so -lnsl -lpthread -Wl,-rpath

-Wl,/home/patrick/working/xerces-c-3.1.2/src

/usr/bin/ld: warning: libicuuc.so.55, needed by

/home/patrick/working/xerces-c-3.1.2/src/.libs/libxerces-c.so,

not found (try using -rpath or -rpath-link)

/home/patrick/working/xerces-c-3.1.2/src/.libs/libxerces-c.so:

undefined reference to `uset_close_55'

/home/patrick/working/xerces-c-3.1.2/src/.libs/libxerces-c.so:

undefined reference to `ucnv_fromUnicode_55'

...[omitted numerous undefined references]...

collect2: error: ld returned 1 exit status

make[1]: *** [xqilla] Error 1

make[1]: Leaving directory `/home/patrick/working/XQilla-2.3.2'

make: *** [all-recursive] Error 1

To help you avoid surfing the web to track down this issue, realize that Ubuntu doesn’t use the latest releases. Of anything as far as I can tell.

The bottom line being that Ubuntu 14.04 doesn’t have libicuuc.so.55.

If I manually upgrade libraries, I might create an inconsistency package management tools can’t fix.  And break working tools. Bad joss!

And break working tools. Bad joss!

Fear Not! There is a solution, which I will cover in my next XQilla-2.3.2 post!

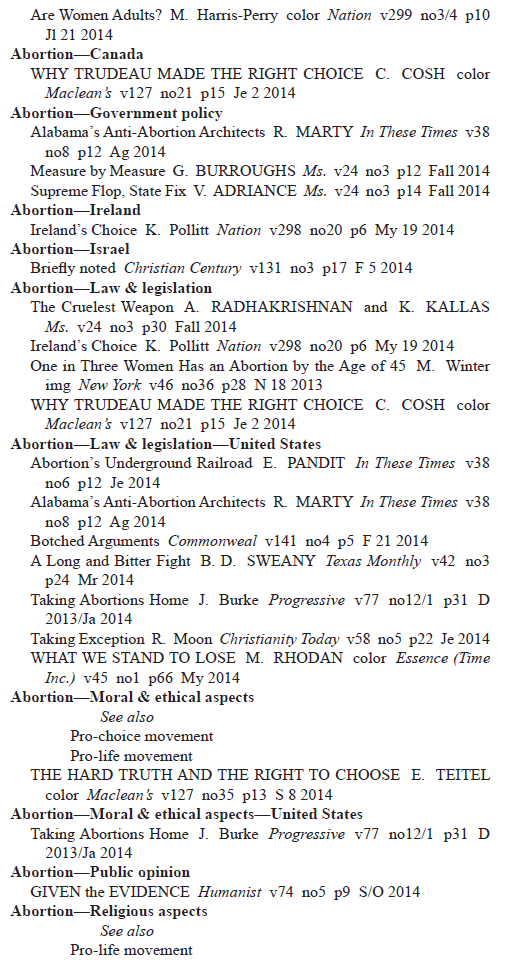

PS: I didn’t get back to the sorting post in time to finish it today. Not to mention that I encountered another nasty list in Most Vulnerable Software of 2015! (Perils of Interpretation!, Advice for 2016).

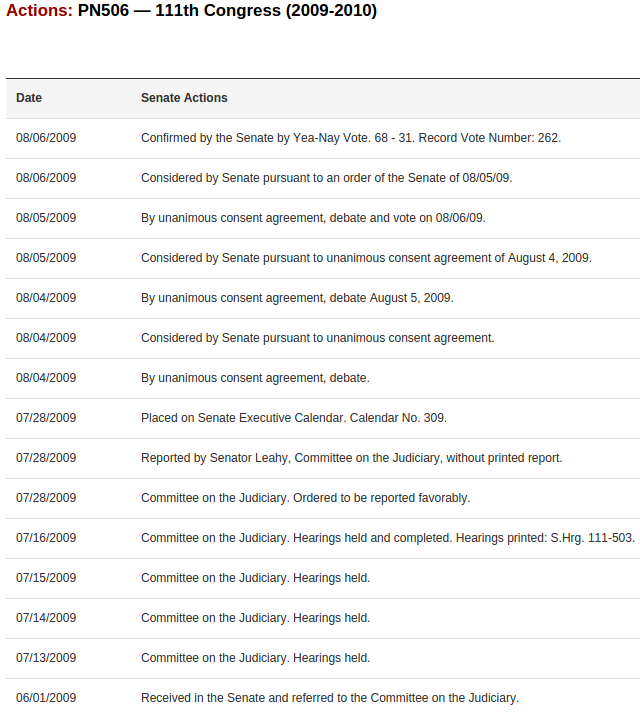

I say “nasty,” you should see some of the lists you can find at Congress.gov. Valid XML I’ll concede but not as useful as they could be.

Improving online lists, combining them with other data, etc., are some of the things I want to cover this coming year.

And break working tools. Bad joss!

And break working tools. Bad joss!