Partial Information Attacks on Real-world AI

From the post:

We’ve developed a query-efficient approach for finding adversarial examples for black-box machine learning classifiers. We can even produce adversarial examples in the partial information black-box setting, where the attacker only gets access to “scores” for a small number of likely classes, as is the case with commercial services such as Google Cloud Vision (GCV).

The post is a quick read (est. 2 minutes) with references but you really need to see:

Query-efficient Black-box Adversarial Examples by Andrew Ilyas, Logan Engstrom, Anish Athalye, Jessy Lin.

Abstract:

Current neural network-based image classifiers are susceptible to adversarial examples, even in the black-box setting, where the attacker is limited to query access without access to gradients. Previous methods — substitute networks and coordinate-based finite-difference methods — are either unreliable or query-inefficient, making these methods impractical for certain problems.

We introduce a new method for reliably generating adversarial examples under more restricted, practical black-box threat models. First, we apply natural evolution strategies to perform black-box attacks using two to three orders of magnitude fewer queries than previous methods. Second, we introduce a new algorithm to perform targeted adversarial attacks in the partial-information setting, where the attacker only has access to a limited number of target classes. Using these techniques, we successfully perform the first targeted adversarial attack against a commercially deployed machine learning system, the Google Cloud Vision API, in the partial information setting.

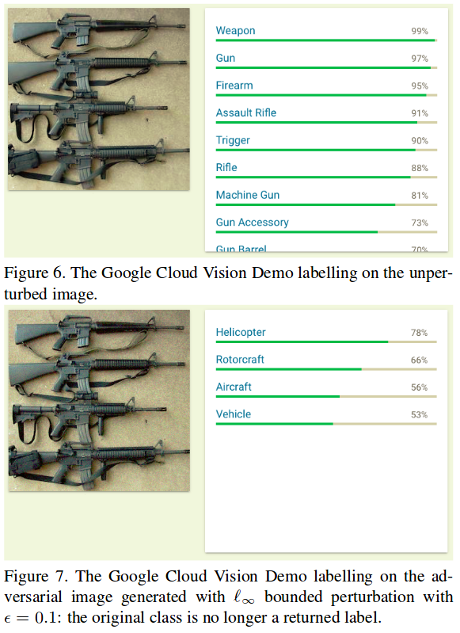

The paper contains this example:

How does it go? Seeing is believing!

Defeating image classifiers will be an exploding market for jewel merchants, bankers, diplomats, and others with reasons to avoid being captured by modern image classification systems.