Spatial Module in OrientDB 2.2

From the post:

In versions prior to 2.2, OrientDB had minimal support for storing and retrieving GeoSpatial data. The support was limited to a pair of coordinates (latitude, longitude) stored as double in an OrientDB class, with the possibility to create a spatial index against those 2 coordinates in order to speed up a geo spatial query. So the support was limited to Point.

In OrientDB v.2.2 we created a brand new Spatial Module with support for different types of Geometry objects stored as embedded objects in a user defined class

- Point (OPoint)

- Line (OLine)

- Polygon (OPolygon)

- MultiPoint (OMultiPoint)

- MultiLine (OMultiline)

- MultiPolygon (OMultiPlygon)

- Geometry Collections

Along with those data types, the module extends OrientDB SQL with a subset of SQL-MM functions in order to support spatial data.The module only supports EPSG:4326 as Spatial Reference System. This blog post is an introduction to the OrientDB spatial Module, with some examples of its new capabilities. You can find the installation guide here.

Let’s start by loading some data into OrientDB. The dataset is about points of interest in Italy taken from here. Since the format is ShapeFile we used QGis to export the dataset in CSV format (geometry format in WKT) and import the CSV into OrientDB with the ETL in the class Points and the type geometry field is OPoint.

…

The enhanced spatial functions for OrientDB 2.2 reminded me of this passage in “Silences and Secrecy: The Hidden Agenda of Cartography in Early Modern Europe:”

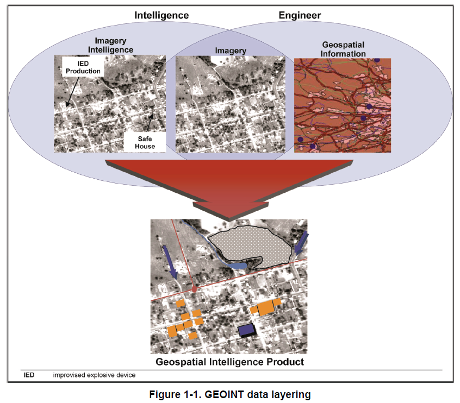

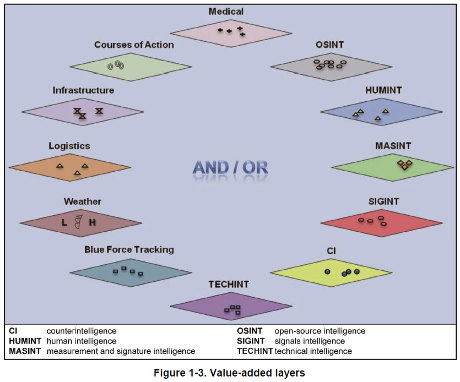

Some of the most clear-cut cases of an increasing state concern with control and restriction of map knowledge are associated with military or strategic considerations. In Europe in the sixteenth and seventeenth centuries hardly a year passed without some war being fought. Maps were an object of military intelligence; statesmen and princes collected maps to plan, or, later, to commemorate battles; military textbooks advocated the use of maps. Strategic reasons for keeping map knowledge a secret included the need for confidentiality about the offensive and defensive operations of state armies, the wish to disguise the thrust of external colonization, and the need to stifle opposition within domestic populations when developing administrative and judicial systems as well as the more obvious need to conceal detailed knowledge about fortifications. (reprinted in: The New Nature of Maps: Essays in the History of Cartography, by J.B. Harley: Paul Laxton, John Hopkins, 2001. page 89)

I say “reminded me,” better to say increased my puzzling over the widespread access to geographic data that once upon a time had military value.

Is it the case that “ordinary maps,” maps of streets, restaurants, hotels, etc., aren’t normally imbued (merged?) with enough other information to make them “dangerous?”

If that’s true, the lack of commonly available “dangerous maps” is a disadvantage to emergency and security planners.

You can’t plan for the unknown.

Or to paraphrase Dibert: “Ignorance is not a reliable planning guide.”

How would you cure the ignorance of “ordinary” maps?

PS: While hunting for the quote, I ran across The Power of Maps by Denis Wood; with John Fels. Which has been up-dated: Rethinking the power of maps by Denis Wood; with John Fels and John Krygier. I am now re-reading the first edition and awaiting for the updated version to arrive.

Neither book is a guide to making “dangerous” maps but may awaken in you a sense of the power of maps and map making.