Luke Anderson‘s post at Clickhole, titled: Humanity Could Totally Pull Off The Tower Of Babel At This Point, was a strong reminder of the Internet of Things (IoT).

See what you think:

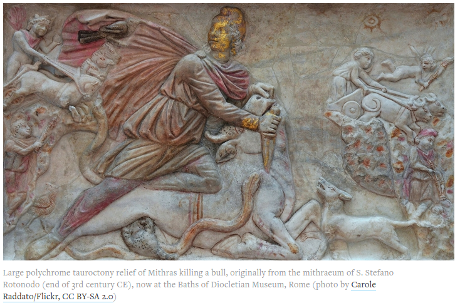

If you went to Sunday school, you know the story: After the Biblical flood, the people of earth came together to build the mighty Tower of Babel. Speaking with one language and working tirelessly, they built a tower so tall that God Himself felt threatened by it. So, He fractured their language so that they couldn’t understand each other, construction ceased, and mankind spread out across the ancient world.

We’ve come a long way in the few millennia since then, and at this point, humanity could totally pull off the Tower of Babel.

Just look at the feats of human engineering we’ve accomplished since then: the Great Wall; the Golden Gate Bridge; the Burj Khalifa. And don’t even get me started on the International Space Station. Building a single tall building? It’d be a piece of cake.

Think about it. Right off the bat, we’d be able to communicate with each other, no problem. Besides most of the world speaking either English, Spanish, and/or Chinese by now, we’ve got translators, Rosetta Stone, Duolingo, the whole nine yards. Hell, IKEA instructions don’t even have words and we have no problem putting their stuff together. I can see how a guy working next to you suddenly speaking Arabic would throw you for a loop a few centuries ago. But now, I bet we could be topping off the tower and storming heaven in the time it took people of the past to say “Hey, how ya doing?”

…

Compare this Internet of Things statement from the Masters of Contracts that Yield No Useful Result:

…

IoT implementation, at its core, is the integration of dozens and up to tens of thousands of devices seamlessly communicating with each other, exchanging information and commands, and revealing insights. However, when devices have different usage scenarios and operating requirements that aren’t compatible with other devices, the system can break down. The ability to integrate different elements or nodes within broader systems, or bringing data together to drive insights and improve operations, becomes more complicated and costly. When this occurs, IoT can’t reach its potential, and rather than an Internet of everything, you see siloed Internets of some things.

…

The first, in case you can’t tell from it being posted at Clickhole, was meant as sarcasm or humor.

The second was deadly serious from folks who would put a permanent siphon on your bank account. Whether their services are cost effective or not is up to you to judge.

The Tower of Babel is a statement about semantics and the human condition. It should come as no surprise that we all prefer our language over that of others, whether those are natural or programming languages. Moreover, judging from code reuse, to say nothing of the publishing market, we prefer our restatements of the material, despite equally useful statements by others.

How else would you explain the proliferation of MS Excel books?  One really good one is more than enough. Ditto for Bible translations.

One really good one is more than enough. Ditto for Bible translations.

Creating new languages to “fix” semantic diversity just adds another partially adopted language to the welter of languages that need to be integrated.

The better option, at least from my point of view, is to create mappings between languages, mappings that are based on key/value pairs to enable others to build upon, contract or expand those mappings.

It simply isn’t possible to foresee every use case or language that needs semantic integration but if we perform such semantic integration as returns ROI for us, then we can leave the next extension or contraction of that mapping to the next person with a different ROI.

It’s heady stuff to think we can cure the problem represented by the legendary Tower of Babel, but there is a name for that. It’s called hubris and it never leads to a good end.

One really good one is more than enough. Ditto for Bible translations.

One really good one is more than enough. Ditto for Bible translations.