In Battle to Defang ISIS, U.S. Targets Its Psychology by Eric Schmitt.

From the post:

Maj. Gen. Michael K. Nagata, commander of American Special Operations forces in the Middle East, sought help this summer in solving an urgent problem for the American military: What makes the Islamic State so dangerous?

Trying to decipher this complex enemy — a hybrid terrorist organization and a conventional army — is such a conundrum that General Nagata assembled an unofficial brain trust outside the traditional realms of expertise within the Pentagon, State Department and intelligence agencies, in search of fresh ideas and inspiration. Business professors, for example, are examining the Islamic State’s marketing and branding strategies.

“We do not understand the movement, and until we do, we are not going to defeat it,” he said, according to the confidential minutes of a conference call he held with the experts. “We have not defeated the idea. We do not even understand the idea.” (emphasis added)

An honest member of the any administration in Washington is so unusual that I wanted to draw your attention to Maj. General Michael K. Nagata.

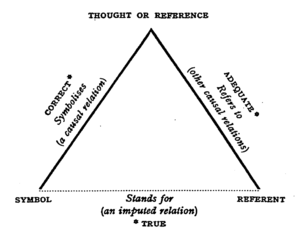

His problem, as you will quickly recognize, is one of a diversity of semantics. What is heard one way by a Western audience is heard completely differently by an audience with a different tradition.

The general may not think of it as “progress,” but getting Washington policy makers to acknowledge that there is a legitimate semantic gap between Western policy makers and IS is a huge first step. It can’t be grudging or half-hearted. Western policy makers have to acknowledge that there are honest views of the world that are different from their own. IS isn’t practicing dishonest, deception, perversely refusing to acknowledge the truth of Western statements, etc. Members of IS have an honest but different semantic view of the world.

If the good general can get policy makers to take that step, then and only then can the discussion of what that “other” semantic is and how to map it into terms comprehensible to Western policy makers can begin. If that step isn’t taken, then the resources necessary to explore and map that “other” semantic are never going to be allocated. And even if allocated, the results will never figure into policy making with regard to IS.

Failing on any of those three points: failing to concede the legitimacy of the IS semantic, failing to allocate resources to explore and understand the IS semantic, failing to incorporate an understanding of the IS semantic into policy making, is going to result in a failure to “defeat” IS, if that remains a goal after understanding its semantic.

Need an example? Consider the Viet-Nam war, in which approximately 58,220 Americans died and millions of Vietnamese, Laotions and Cambodians died, not counting long term injuries among all of the aforementioned. In case you have not heard, the United States lost the Vietnam War.

The reasons for that loss are wide and varied but let me suggest two semantic differences that may have played a role in that defeat. First, the Vietnamese have a long term view of repelling foreign invaders. Consider that Vietnam was occupied by the Chinese from 111 BCE until 938 CE, a period of more than one thousand (1,000) years. American war planners had a war semantic of planning for the next presidential election, not a winning strategy for a foe with a semantic that was two hundred and fifty (250) times longer.

The other semantic difference (among many others) was the understanding of “democracy,” which is usually heralded by American policy makers as a grand prize resulting from American involvement. In Vietnam, however, the villages and hamlets already had what some would consider democracy for centuries. (Beyond Hanoi: Local Government in Vietnam) Different semantic for “democracy” to be sure but one that was left unexplored in the haste to import a U.S. semantic of the concept.

Fighting a war where you don’t understand the semantics in play for the “other” side is risky business.

General Nagata has taken the first step towards such an understanding by admitting that he and his advisors don’t understand the semantics of IS. The next step should be to find someone who does. May I suggest talking to members of IS under informal meeting arrangements? Such that diplomatic protocols and news reporting doesn’t interfere with honest conversations? I suspect IS members are as ignorant of U.S. semantics as U.S. planners are of IS semantics so there would be some benefit for all concerned.

Such meetings would yield more accurate understandings than U.S. born analysts who live in upper middle-class Western enclaves and attempt to project themselves into foreign cultures. The understanding derived from such meetings could well contradict current U.S. policy assessments and objectives. Whether any administration has the political will to act upon assessments that aren’t the product of a shared post-Enlightenment semantic remains to be seen. But such a assessments must be obtained first to answer that question.

Would topic maps help in such an endeavor? Perhaps, perhaps not. The most critical aspect of such a project would be conceding for all purposes, the legitimacy of the “other” semantic, where “other” depends on what side you are on. That is a topic map “state of mind” as it were, where all semantics are treated equally and not any one as more legitimate than any other.

PS: A litmus test for Major General Michael K. Nagata to use in assembling a team to attempt to understand IS semantics: Have each applicant write their description of the 9/11 hijackers in thirty (30) words or less. Any applicant who uses any variant of coward, extremist, terrorist, fanatic, etc. should be wished well and sent on their way. Not a judgement on their fitness for other tasks but they are not going to be able to bridge the semantic gap between current U.S. thinking and that of IS.

The CIA has a report on some of the gaps but I don’t know if it will be easier for General Nagata to ask the CIA for a copy or to just find a copy on the Internet. It illustrates, for example, why the American strategy of killing IS leadership is non-productive if not counter-productive.

If you have the means, please forward this post to General Nagata’s attention. I wasn’t able to easily find a direct means of contacting him.

Diamonds, other valuable commodities, foreign deposit arrangements can be had.

Diamonds, other valuable commodities, foreign deposit arrangements can be had.