GraphDBLP: a system for analysing networks of computer scientists through graph databases by Mario Mezzanzanica, et al.

Abstract:

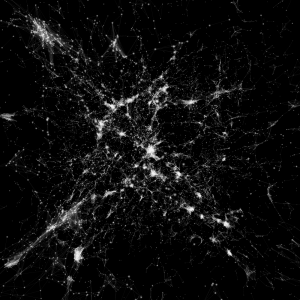

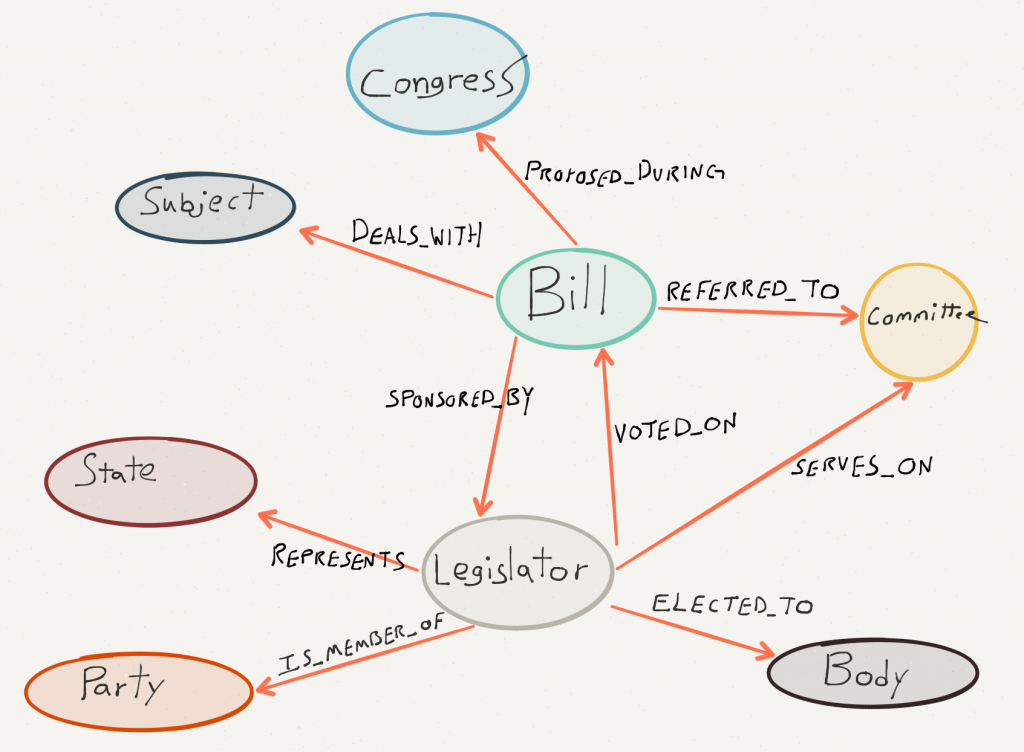

This paper presents GraphDBLP, a system that models the DBLP bibliography as a graph database for performing graph-based queries and social network analyses. GraphDBLP also enriches the DBLP data through semantic keyword similarities computed via word-embedding. In this paper, we discuss how the system was formalized as a multi-graph, and how similarity relations were identified through word2vec. We also provide three meaningful queries for exploring the DBLP community to (i) investigate author profiles by analysing their publication records; (ii) identify the most prolific authors on a given topic, and (iii) perform social network analyses over the whole community. To date, GraphDBLP contains 5+ million nodes and 24+ million relationships, enabling users to explore the DBLP data by referencing more than 3.3 million publications, 1.7 million authors, and more than 5 thousand publication venues. Through the use of word-embedding, more than 7.5 thousand keywords and related similarity values were collected. GraphDBLP was implemented on top of the Neo4j graph database. The whole dataset and the source code are publicly available to foster the improvement of GraphDBLP in the whole computer science community.

Although the article is behind a paywall, GraphDBLP as a tool is not! https://github.com/fabiomercorio/GraphDBLP.

From the webpage:

GraphDBLP is a tool that models the DBLP bibliography as a graph database for performing graph-based queries and social network analyses.

GraphDBLP also enriches the DBLP data through semantic keyword similarities computed via word-embedding.

GraphDBLP provides to users three meaningful queries for exploring the DBLP community:

- investigate author profiles by analysing their publication records;

- identify the most prolific authors on a given topic;

- perform social network analyses over the whole community;

- perform shortest-paths over DBLP (e.g., the shortest-path between authors, the analysis of co-author networks, etc.)

… (emphasis in original)

Sorry to see author, title, venue, publication, keyword all as flat strings but that’s not uncommon. Disappointing but not uncommon.

Viewing these flat strings as parts of structured representatives will be in addition to this default.

Not to minimize the importance of improving the usefulness of the dblp, but imagine integrating the GraphDBLP into your local library system. Without a massive data mapping project. That’s what lies just beyond the reach of this data project.