Infinite Dimensional Word Embeddings by Eric Nalisnick and Sachin Ravi.

Abstract:

We describe a method for learning word embeddings with stochastic dimensionality. Our Infinite Skip-Gram (iSG) model specifies an energy-based joint distribution over a word vector, a context vector, and their dimensionality, which can be defined over a countably infinite domain by employing the same techniques used to make the Infinite Restricted Boltzmann Machine (Cote & Larochelle, 2015) tractable. We find that the distribution over embedding dimensionality for a given word is highly interpretable and leads to an elegant probabilistic mechanism for word sense induction. We show qualitatively and quantitatively that the iSG produces parameter-efficient representations that are robust to language’s inherent ambiguity.

Even better from the introduction:

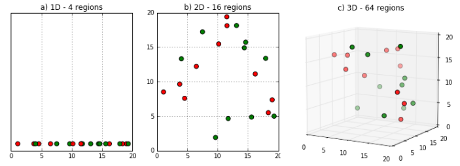

To better capture the semantic variability of words, we propose a novel embedding method that produces vectors with stochastic dimensionality. By employing the same mathematical tools that allow the definition of an Infinite Restricted Boltzmann Machine (Côté & Larochelle, 2015), we describe ´a log-bilinear energy-based model–called the Infinite Skip-Gram (iSG) model–that defines a joint distribution over a word vector, a context vector, and their dimensionality, which has a countably infinite domain. During training, the iSGM allows word representations to grow naturally based on how well they can predict their context. This behavior enables the vectors of specific words to use few dimensions and the vectors of vague words to elongate as needed. Manual and experimental analysis reveals this dynamic representation elegantly captures specificity, polysemy, and homonymy without explicit definition of such concepts within the model. As far as we are aware, this is the first word embedding method that allows representation dimensionality to be variable and exhibit data-dependent growth.

Imagine a topic map model that “allow[ed] representation dimensionality to be variable and exhibit data-dependent growth.”

Simple subjects, say the sort you find at schema.org, can have simple representations.

More complex subjects, say the notion of “person” in U.S. statutory law (no, I won’t attempt to list them here), can extend its dimensional representation as far as is necessary.

Of course in this case, the dimensions are learned from a corpus but I don’t see any barrier to the intentional creation of dimensions for subjects and/or a combined automatic/directed creation of dimensions.

Or as I put it in the title, Death to All Triples.

More precisely, not just triples but any pre-determined limit on representation.

Looking forward to taking a slow read on this article and those it cites. Very promising.