Nvidia reports in: Modeling Cities in 3D Using Only Image Data:

ETH Zurich scientists leveraged deep learning to automatically stich together millions of public images and video into a three-dimensional, living model of the city of Zurich.

The platform called “VarCity” combines a variety of different image sources: aerial photographs, 360-degree panoramic images taken from vehicles, photos published by tourists on social networks and video material from YouTube and public webcams.

“The more images and videos the platform can evaluate, the more precise the model becomes,” says Kenneth Vanhoey, a postdoc in the group led by Luc Van Gool, a Professor at ETH Zurich’s Computer Vision Lab. “The aim of our project was to develop the algorithms for such 3D city models, assuming that the volume of available images and videos will also increase dramatically in the years ahead.”

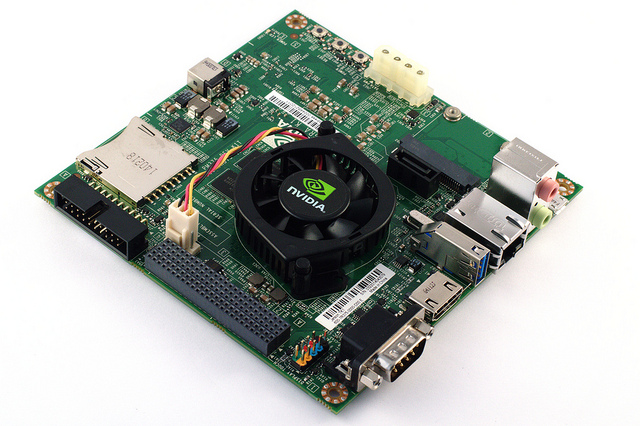

Using a cluster of GPUs including Tesla K40s with cuDNN to train their deep learning models, the technology recognizes image content such as buildings, windows and doors, streets, bodies of water, people, and cars. Without human assistance, the 3D model “knows”, for example, what pavements are and – by evaluating webcam data – which streets are one-way only.

…

The data/information gap between nation states and non-nation state groups grows narrower everyday. Here, GPUs and deep learning, produce planning data terrorists could have only dreamed about twenty years ago.

Technical advances make precautions such as:

Federal, state, and local law enforcement let people know that if they take pictures or notes around monuments and critical infrastructure facilities, they could be subject to an interrogation or an arrest; in addition to the See Something, Say Something awareness campaign, DHS also has broader initiatives such as the Buffer Zone Protection Program, which teach local police and security how to spot potential terrorist activities. (DHS focus on suspicious activity at critical infrastructure facilities)

sound old fashioned and quaint.

Such measures annoy tourists but unless potential terrorists are as dumb as the underwear bomber, against a skilled adversary, not so much.

I guess that’s the question isn’t it?

Are you planning to fight terrorists from shallow end of the gene pool or someone a little more challenging?