Norman, World’s first psychopath AI

From the webpage:

…

We present you Norman, world’s first psychopath AI. Norman is born from the fact that the data that is used to teach a machine learning algorithm can significantly influence its behavior. So when people talk about AI algorithms being biased and unfair, the culprit is often not the algorithm itself, but the biased data that was fed to it. The same method can see very different things in an image, even sick things, if trained on the wrong (or, the right!) data set. Norman suffered from extended exposure to the darkest corners of Reddit, and represents a case study on the dangers of Artificial Intelligence gone wrong when biased data is used in machine learning algorithms.Norman is an AI that is trained to perform image captioning; a popular deep learning method of generating a textual description of an image. We trained Norman on image captions from an infamous subreddit (the name is redacted due to its graphic content) that is dedicated to document and observe the disturbing reality of death. Then, we compared Norman’s responses with a standard image captioning neural network (trained on MSCOCO dataset) on Rorschach inkblots; a test that is used to detect underlying thought disorders.

Note: Due to the ethical concerns, we only introduced bias in terms of image captions from the subreddit which are later matched with randomly generated inkblots (therefore, no image of a real person dying was utilized in this experiment).

…

I have written to the authors to ask for more details about their training process, the “…which are later matched with randomly generated inkblots…” seeming especially opaque to me.

While waiting for that answer, we should ask whether training psychopath AIs is cost effective?

Compare the limited MIT-Norman with the PeopleFuckingDying Reddit with 690,761 readers.

That’s a single Reddit. Many Reddits count psychopaths among their members. To say nothing of Twitter trolls and other social media where psychopaths gather.

New psychopaths appear on social media every day, without the ethics-limited training provided to MIT-Norman. Is this really a cost effective approach to developing psychopaths?

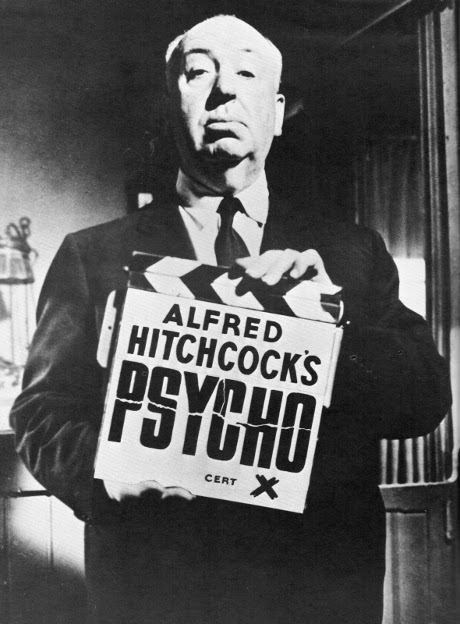

The MIT-Norman project has great graphics but Hitchcock demonstrated over and over again, simple scenes can be packed with heart pounding terror.