I asked on Twitter yesterday:

How can data/computer science disrupt, interfere with, burden, expose elephant hunters and their facilitators? Serious question.

@Pembient pointed to Vulcan’s Domain Awareness Tool, describe in New Tech Gives Rangers Real-Time Tools to Protect Elephants as:

…

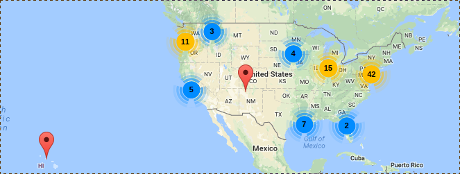

The Domain Awareness System (DAS) is a tool that aggregates the positions of radios, vehicles, aircraft and animal sensors to provide users with a real-time dashboard that depicts the wildlife being protected, the people and resources protecting them, and the potential illegal activity threatening them.

“Accurate data plays a critical role in conservation,” said Paul Allen. “Rangers deserve more than just dedication and good luck. They need to know in real-time what is happening in their parks.”

The visualization and analysis capabilities of DAS allow park managers to make immediate tactical decisions to then efficiently deploy resources for interdiction and active management. “DAS has enabled us to establish a fully integrated approach to our security and anti-poaching work within northern Kenya,” said Mike Watson, chief executive officer of Lewa Conservancy where the first DAS installation was deployed late last year. “This is making us significantly more effective and coordinated and is showing us limitless opportunities for conservation applications.”

The system has been installed at six protected wildlife conservation sites since November 2016. Working with Save the Elephants, African Parks Network, Wildlife Conservation Society, and the Singita Grumeti Fund as well as the Lewa Conservancy and Northern Rangelands Trust, a total of 15 locations are expected to adopt the system this year.

…

Which is great and a project that needs support and expansion.

However, the question remains that having “spotted” poachers, where are the resources to physically safeguard elephants and other targets of poachers?

A second link, also suggested by @Pembient, Wildlife Works, Wildlife Works Carbon / Kasigau Corridor, Kenya, another great project, reminds me of the Shirriffs of the Hobbits, who were distinguished from other Hobbits by a feather they wore in their caps:

…

Physical protection and monitoring – Wildlife Works trained over 120 young people, men and women, from the local communities to be Wildlife Rangers, and they perform daily foot patrols of the forest to ensure that it remains intact. The rangers are unarmed, but have the power of arrest granted by the local community.

…

Environmental monitoring isn’t like confronting poachers, or ordinary elephant hunters for that matter, who travel in packs, armed with automatic weapons, with dubious regard for lives other than their own.

Great programs, having a real impact, that merit your support, but not quite on point to my question of:

How can data/computer science disrupt, interfere with, burden, expose elephant hunters and their facilitators? Serious question.

Poachers must be stopped with police/military force. The use of DAS and similar information systems have the potential to effective deploy forces to stop poachers. Assuming adequate forces are available. The estimated loss of 100 elephants per day suggests they are not.

Hunters, on the other hand, are protected by law and tradition in their slaughter of adult elephants, who have no natural predators.

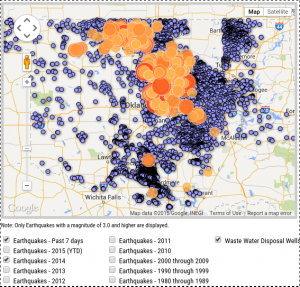

To be clearer, we know the classes of elephant hunters and facilitators exist, how should we go about populating those classes with instances, where each instance has a name, address, employer, website, email, etc.?

And once having that information, what can be done to to acknowledge their past, present or ongoing hunting of elephants? Acknowledge it in such a way as to discourage any further elephant hunting by themselves or anyone who reads about them?

Elephants aren’t killed by anonymous labels such as “elephant hunters,” or “poachers,” but by identifiable, nameable, traceable individuals.

Use data science to identify, name and trace those individuals.