From the webpage:

This gem is a pure Ruby implementation of the HyperLogLog algorithm for estimating cardinalities of sets observed via a stream of events. A Redis instance is used for storing the counters. A minimal example:

require 'redis' require 'hyperloglog-redis' counter = HyperLogLog::Counter.new(Redis.new) ['john', 'paul', 'george', 'ringo', 'john', 'paul'].each do |beatle| counter.add('beatles', beatle) end puts "There are approximately #{counter.count('beatles')} distinct Beatles"Each HyperLogLog counter uses a small, fixed amount of space but can estimate the cardinality of any set of up to around a billion values with relative error of 1.04 / Math.sqrt(2 ** b) with high probability, where b is a parameter passed to the

HyperLogLog::Counterinitializer that defaults to 10. With b = 10, each counter is represented by a 1 KB string in Redis and we get an expected relative error of 3%. Contrast this with the amount of space needed to compute set cardinality exactly, which is over 100 MB for a even a bit vector representing a set with a billion values.The basic idea of HyperLogLog (and its predecessors PCSA, LogLog, and others) is to apply a good hash function to each value observed in the stream and record the longest run of zeros seen as a prefix of any hashed value. If the hash function is good, the bits in any hashed value should be close to statistically independent, so seeing a value that starts with exactly X zeros should happen with probability close to 2 ** -(X + 1). So, if you’ve seen a run of 5 zeros in one of your hash values, you’re likely to have around 2 ** 6 = 64 values in the underlying set. The actual implementation and analysis are much more advanced than this, but that’s the idea.

This gem implements a few useful extensions to the basic HyperLogLog algorithm which allow you to estimate unions and intersections of counters as well as counts within specific time ranges. These extensions are described in detail below.

The HyperLogLog algorithm is described and analyzed in the paper “HyperLogLog: the analysis of a near-optimal cardinality estimation algorithm” by Flajolet, Fusy, Gandouet, and Meunier. Our implementation closely follows the program described in Section 4 of that paper.

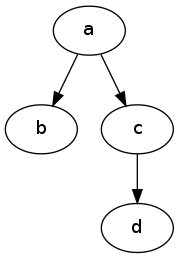

The same paper is mentioned in: Count a billion distinct objects w/ 1.5KB of Memory (Coarsening Graph Traversal). Consult the implementation details there as well.

I first saw this in NoSQL Weekly, Issue 116.