Politics Moves Fast. Peer Review Moves Slow. What’s A Political Scientist To Do? by Maggie Koerth-Baker

From the post:

Politics has a funny way of turning arcane academic debates into something much messier. We’re living in a time when so much in the news cycle feels absurdly urgent and partisan forces are likely to pounce on any piece of empirical data they can find, either to champion it or tear it apart, depending on whether they like the result. That has major implications for many of the ways knowledge enters the public sphere — including how academics publicize their research.

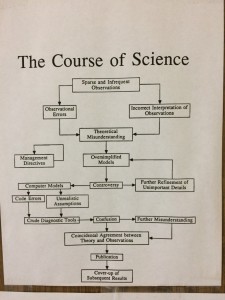

That process has long been dominated by peer review, which is when academic journals put their submissions in front of a panel of researchers to vet the work before publication. But the flaws and limitations of peer review have become more apparent over the past decade or so, and researchers are increasingly publishing their work before other scientists have had a chance to critique it. That’s a shift that matters a lot to scientists, and the public stakes of the debate go way up when the research subject is the 2016 election. There’s a risk, scientists told me, that preliminary research results could end up shaping the very things that research is trying to understand.

…

The legend of peer review catching and correcting flaws has a long history. A legend much tarnished by the Top 10 Retractions of 2017 and similar reports. Retractions are self admissions of the failure of peer review. By the hundreds.

Withdrawal of papers isn’t the only debunking of peer review. The reports, papers, etc., on the failure of peer review include: “Data fabrication and other reasons for non-random sampling in 5087 randomised, controlled trials in anaesthetic and general medical journals,” Anaesthesia, Carlisle 2017, DOI: 10.1111/anae.13962; “The peer review drugs don’t work” by Richard Smith; “One in 25 papers contains inappropriately duplicated images, screen finds” by Cat Ferguson.

Koerth-Baker’s quoting of Justin Esarey to support peer review is an example of no or failed peer review at FiveThirtyEight.

…

But, on aggregate, 100 studies that have been peer-reviewed are going to produce higher-quality results than 100 that haven’t been, said Justin Esarey, a political science professor at Rice University who has studied the effects of peer review on social science research. That’s simply because of the standards that are supposed to go along with peer review – clearly reporting a study’s methodology, for instance – and because extra sets of eyes might spot errors the author of a paper overlooked.

…

Koerth-Baker acknowledges the failures of peer review but since the article is premised upon peer review insulating the public from “bad science,” she runs in Justin Esarey, “…who has studied the effects of peer review on social science research.” One assumes his “studies” are mentioned to embue his statements with an aura of authority.

Debunking Esarey’s authority to comment on the “…effects of peer review on social science research” doesn’t require much effort. If you scan his list of publications you will find Does Peer Review Identify the Best Papers?, which bears the sub-title, A Simulation Study of Editors, Reviewers, and the Social Science Publication Process.

Esarey’s comments on the effectiveness of peer review are not based on fact but on simulations of peer review systems. Useful work no doubt but hardly the confessing witness needed to exonerate peer review in view of its long history of failure.

To save you chasing the Esarey link, the abstract reads:

How does the structure of the peer review process, which can vary from journal to journal, influence the quality of papers published in that journal? In this paper, I study multiple systems of peer review using computational simulation. I find that, under any system I study, a majority of accepted papers will be evaluated by the average reader as not meeting the standards of the journal. Moreover, all systems allow random chance to play a strong role in the acceptance decision. Heterogen eous reviewer and reader standards for scientific quality drive both results. A peer review system with an active editor (who uses desk rejection before review and does not rely strictly on reviewer votes to make decisions ) can mitigate some of these effects.

If there were peer reviewers, editors, etc., at FiveThirtyEight, shouldn’t at least one of them looked beyond the title Does Peer Review Identify the Best Papers? to ask Koerth-Baker what evidence Esarey has for his support of peer review? Or is agreement with Koerth-Baker sufficient?

Peer review persists for a number of unsavory reasons, prestige, professional advancement, enforcement of discipline ideology, pretension of higher quality of publications, let’s not add a false claim of serving the public.