BehaviorMatrix Issues Groundbreaking Foundational Patent for Advertising Platforms

From the post:

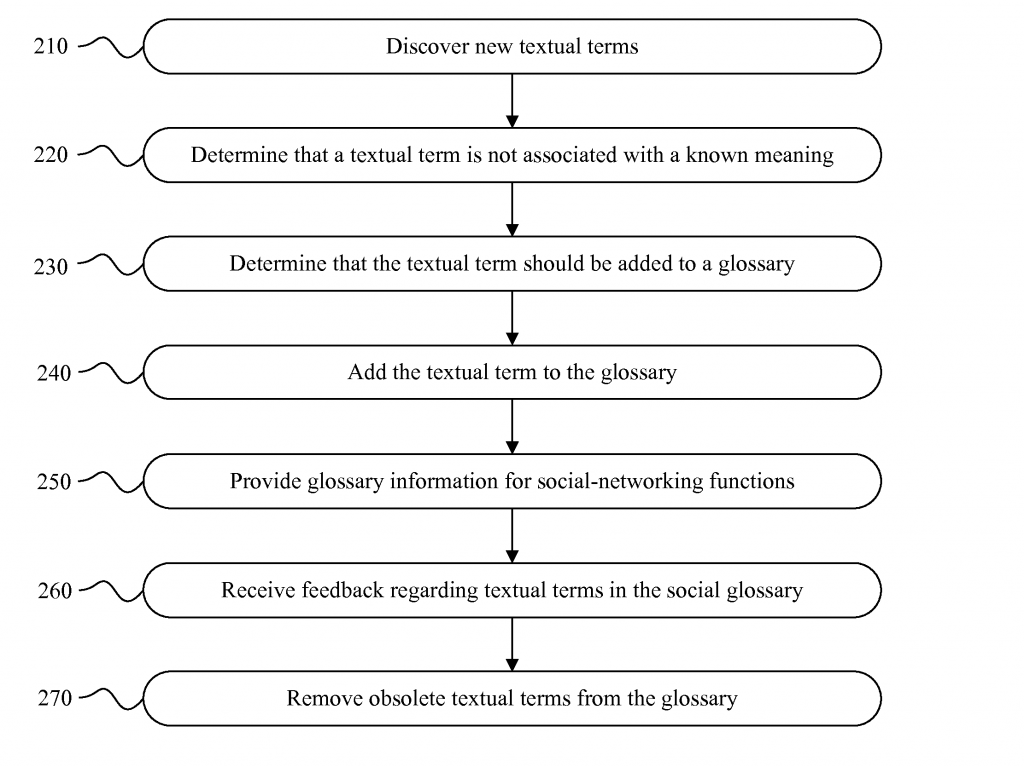

Applied behavior analytics company BehaviorMatrix, LLC, announced today that it was granted a groundbreaking foundational patent by the United States Patent and Trademark Office (USPTO) that establishes a system for classifying, measuring and creating models of the elements that make up human emotions, perceptions and actions leveraged from the Internet and social media. The BehaviorMatrix patent, U.S. patent number 8,639,702, redefines the online advertising platform and social CRM industries by ushering in a new era of assessment and measurement of emotion by digital means. It covers a method for detecting and measuring emotional signals within digital content. Emotion is based on perception – and perception is based on exteroceptive stimuli – what is seen, touched, tasted, heard and smelled.

If you look up U.S. patent number 8,639,702, you will find this background of the invention:

A data element forms the premise on which an inference may be drawn and represents the lowest level of abstraction from which information and then knowledge are derived. In humans, the perception of environment or condition is comprised of data gathered by the senses, i.e., the physiological capacity to provide input for perception. These “senses” are formally referred to as the exteroceptive senses and in humans comprise quantifiable or potential sensory data including, sight, smell, hearing, touch, taste, temperature, pressure, pain, and pleasure, the admixture of which determine the spectrum of human emotion states and resultant behaviors.

Potentials in these senses work independently, or in combination, to produce unique perceptions. For instance, the sense of sight is primarily used to identify a food item, but the flavor of the food item incorporates the senses of both taste and smell.

In biological terms, behavior can generally be regarded as any action of an organism that changes its relationship to its environment. Definable and measurable behaviors are predicated on the association of stimuli within the domain of exteroceptive sensation, to perception, and ultimately, a behavioral outcome.

The ability to determine the exteroceptive association and impact on behavior from data that is not physical but exists only in digital form has profound implications for how data is viewed, both intrinsically and associatively.

An advantage exists, therefore, for a system and method for dynamically associating digital data with values that approximate exteroceptive stimuli potentials, and from those values forecasting probabilistically the likely behavioral response to that data, thereby promoting the ability to design systems and models to predict behavioral outcomes that are inherently more accurate in determining behavioral response. In turn, interfaces and computing devices may be developed that would “expect” certain behaviors, or illicit them through the manipulation of data. Additionally, models could be constructed to classify data not only for the intrinsic value of the data but for the potential behavioral influence inherent in the data as well.

Really? People’s emotions influence their “digital data?” You mean like a person’s emotions influence their speech (language, volume), their body language (tense, pacing), their communications (angry tweets, letters, emails), etc.?

Did you ever imagine such a thing? Emotions being found in digital data?

Have I ever mentioned: Emotional Content of Suicide Notes, Jacob Tuckman; Robert J. Kleiner; Martha Lavell, Am J Psychiatry 1959;116:59-63, to you?

Abstract:

An analysis was made of the emotional content of notes left by 165 suicides in Philadelphia over a 5-year period. Over half the notes showed such positive affect as gratitude, affection, and concern for the welfare of others, while only 24% expressed hostile or negative feelings directed toward themselves or the outside world, and 25% were completely neutral in affect.2. Persons aged 45 and over showed less affect than those under 45, with a concomitant increase in neutral affect.3. Persons who were separated or divorced showed more hostility than those single, married, or widowed.4. It is believed that these findings have certain implications for further understanding of suicide and ultimate steps toward prevention. The recognition that positive or neutral feelings are present in the majority of cases should lead to a more promising outlook in the care and treatment of potential suicides if they can be identified.

That was written in 1959. Do you think it is a non-obvious leap to find emotions in digital data? Just curious.

Of course, that isn’t the only thing claimed for this invention:

capable of detecting one or data elements including, without limitation, temperature, pressure, light, sound, motion, distance and time.

Wow, it can also act as a thermostat.

Feel free to differ I think measuring emotion in all form of communications, including digital data, has been around for a long time.

The filing fee motivated U.S. Patent Office is doing a very poor job of keeping the prior art commons free of patents that infringe on it. Which costs everyone, except for patent trolls, a lot of time and effort.

Thoughts on a project to defend the prior art commons more generally? How much does the average patent infringement case cost? Win or lose?

Of course I am thinking about integrating search across the patent database with searches in relevant domains for prior art to load up examiners with detailed reports of prior art.

Stopping a patent in its tracks avoids more expensive methods later on.

Could lead to a new yearly patent count: Patents Denied.

You know, it’s possible to be so self-centered as to be self-defeating.

You know, it’s possible to be so self-centered as to be self-defeating.