Automata by Jeff Ullman.

From the course description:

Why Study Automata Theory?

This subject is not just for those planning to enter the field of complexity theory, although it is a good place to start if that is your goal. Rather, the course will emphasize those aspects of the theory that people really use in practice. Finite automata, regular expressions, and context-free grammars are ideas that have stood the test of time. They are essential tools for compilers. But more importantly, they are used in many systems that require input that is less general than a full programming language yet more complex than “push this button.”

The concepts of undecidable problems and intractable problems serve a different purpose. Undecidable problems are those for which no computer solution can ever exist, while intractable problems are those for which there is strong evidence that, although they can be solved by a computer, they cannot be solved sufficiently fast that the solution is truly useful in practice. Understanding this theory, and in particular being able to prove that a problem you are facing belongs to one of these classes, allows you to justify taking another approach — simplifying the problem or writing code to approximate the solution, for example.

During the course, I’m going to prove a number of things. The purpose of these proofs is not to torture you or confuse you. Neither are the proofs there because I doubt you would believe me were I merely to state some well-known fact. Rather, understanding how these proofs, especially inductive proofs, work, lets you think more clearly about your own work. I do not advocate proofs that programs are correct, but whenever you attempt something a bit complex, it is good to have in mind the inductive proofs that would be needed to guarantee that what you are doing really works in all cases.

Recommended Background

You should have had a second course in Computer Science — one that covers basic data structures (e.g., lists, trees, hashing), and basic algorithms (e.g., tree traversals, recursive programming, big-oh running time). In addition, a course in discrete mathematics covering propositional logic, graphs, and inductive proofs is valuable background.

If you need to review or learn some of these topics, there is a free on-line textbook Foundations of Computer Science, written by Al Aho and me, available at http://i.stanford.edu/~ullman/focs.html. Recommended chapters include 2 (Recursion and Induction), 3 (Running Time of Programs), 5 (Trees), 6 (Lists), 7 (Sets), 9 (Graphs), and 12 (Propositional Logic). You will also find introductions to finite automata, regular expressions, and context-free grammars in Chapters 10 and 11. Reading Chapter 10 would be good preparation for the first week of the course.

The course includes two programming exercises for which a knowledge of Java is required. However, these exercises are optional. You will receive automated feedback, but the results will not be recorded or used to grade the course. So if you are not familiar with Java, you can still take the course without concern for prerequisites.

All of “Foundations of Computer Science” is worth reading but for this course:

Chapter 2 Iteration, Induction, and Recursion

Chapter 3 The Running Time of Programs

Chapter 5 The Tree Data Model

Chapter 6 The List Data Model

Chapter 7 The Set Data Model

Chapter 9 The Graph Data Model

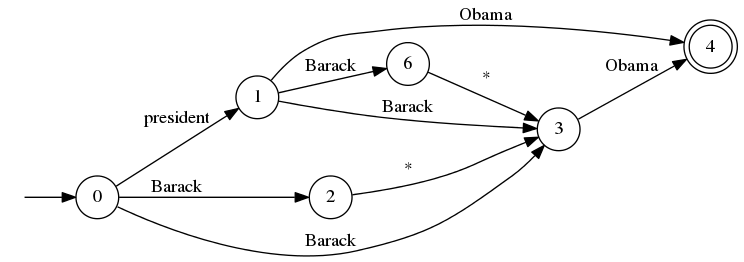

Chapter 10 Patterns, Automata, and Regular Expressions

Chapter 11 Recursive Description of Patterns

Chapter 12 Propositional Logic

Six very intensive weeks but on the bright side, you will be done before the holiday season.