Paper of the Day (Po’D): Multi-tasking with joint semantic spaces by Bob L. Sturm.

From the post:

Hello, and welcome to the Paper of the Day (Po’D): Multi-tasking with joint semantic spaces edition. Today’s paper is: J. Weston, S. Bengio and P. Hamel, “Multi-tasking with joint semantic spaces for large-scale music annotation and retrieval,” J. New Music Research, vol. 40, no. 4, pp. 337-348, 2011.

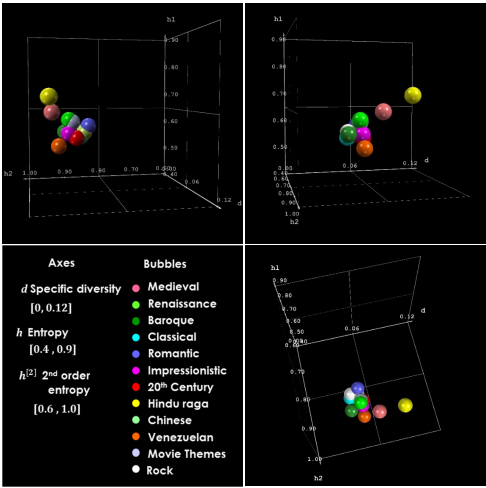

This article proposes and tests a novel approach (pronounced MUSCLES but written MUSLSE) for describing a music signal along multiple directions, including semantically meaningful ones. This work is especially relevant since it applies to problems that remain unsolved, such as artist identification and music recommendation (in fact the first two authors are employees of Google). The method proposed in this article models a song (or a short excerpt of a song) as a triple in three vector spaces learned from a training dataset: one vector space is created from artists, one created from tags, and the last created from features of the audio. The benefit of using vector spaces is that they bring quantitative and well-defined machinery, e.g., projections and distances.

MUSCLES attempts to learn each vector space together so as to preserve (dis)similarity. For instance, vectors mapped from artists that are similar (e.g., Brittney Spears and Christina Aguilera) should point in nearly the same direction; while those that are not similar (e.g., Engelbert Humperdink and The Rubberbandits), should be nearly orthogonal. Similarly, so should vectors mapped from tags that are semantically close (e.g., “dark” and “moody”), and semantically disjoint (e.g., “teenage death song” and “NYC”). For features extracted from the audio, one hopes the features themselves are comparable, and are able to reflect some notion of similarity at least at the surface level of the audio. MUSCLES takes this a step further to learn the vector spaces so that one can take inner products between vectors from different spaces — which is definitely a novel concept in music information retrieval.

Bob raises a number of interesting issues but here’s one that bites:

A further problem is that MUSCLES judges similarity by magnitude inner product. In such a case, if “sad” and “happy” point in exact opposite directions, then MUSCLES will say they are highly similar.

Ouch! For all the “precision” of vector spaces, there are non-apparent biases lurking therein.

For your convenience:

Multi-tasking with joint semantic spaces for large-scale music annotation and retrieval (full text)

Abstract:

Music prediction tasks range from predicting tags given a song or clip of audio, predicting the name of the artist, or predicting related songs given a song, clip, artist name or tag. That is, we are interested in every semantic relationship between the different musical concepts in our database. In realistically sized databases, the number of songs is measured in the hundreds of thousands or more, and the number of artists in the tens of thousands or more, providing a considerable challenge to standard machine learning techniques. In this work, we propose a method that scales to such datasets which attempts to capture the semantic similarities between the database items by modelling audio, artist names, and tags in a single low-dimensional semantic embedding space. This choice of space is learnt by optimizing the set of prediction tasks of interest jointly using multi-task learning. Our single model learnt by training on the joint objective function is shown experimentally to have improved accuracy over training on each task alone. Our method also outperforms the baseline methods tried and, in comparison to them, is faster and consumes less memory. We also demonstrate how our method learns an interpretable model, where the semantic space captures well the similarities of interest.

Just to tempt you into reading the article, consider the following passage:

Artist and song similarity is at the core of most music recommendation or playlist generation systems. However, music similarity measures are subjective, which makes it difficult to rely on ground truth. This makes the evaluation of such systems more complex. This issue is addressed in Berenzweig (2004) and Ellis, Whitman, Berenzweig, and Lawrence (2002). These tasks can be tackled using content-based features or meta-data from human sources. Features commonly used to predict music similarity include audio features, tags and collaborative filtering information.

Meta-data such as tags and collaborative filtering data have the advantage of considering human perception and opinions. These concepts are important to consider when building a music similarity space. However, meta-data suffers from a popularity bias, because a lot of data is available for popular music, but very little information can be found on new or less known artists. In consequence, in systems that rely solely upon meta-data, everything tends to be similar to popular artists. Another problem, known as the cold-start problem, arises with new artists or songs for which no human annotation exists yet. It is then impossible to get a reliable similarity measure, and is thus difficult to correctly recommend new or less known artists.

“…[H]uman perception[?]…” Is there some other form I am unaware of? Some other measure of similarity than our own? Recalling that vector spaces are a pale mockery of our more subtle judgments.

Suggestions?