Relational inductive biases, deep learning, and graph networks by Peter W. Battaglia, et al.

Abstract:

Artificial intelligence (AI) has undergone a renaissance recently, making major progress in key domains such as vision, language, control, and decision-making. This has been due, in part, to cheap data and cheap compute resources, which have fit the natural strengths of deep learning. However, many defining characteristics of human intelligence, which developed under much different pressures, remain out of reach for current approaches. In particular, generalizing beyond one’s experiences–a hallmark of human intelligence from infancy–remains a formidable challenge for modern AI.

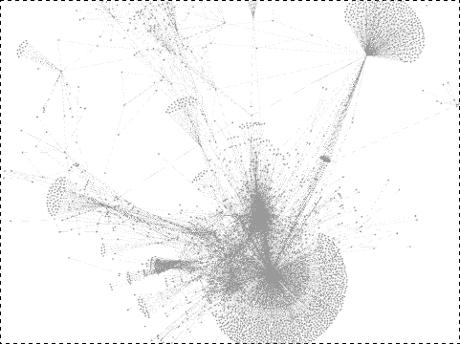

The following is part position paper, part review, and part unification. We argue that combinatorial generalization must be a top priority for AI to achieve human-like abilities, and that structured representations and computations are key to realizing this objective. Just as biology uses nature and nurture cooperatively, we reject the false choice between “hand-engineering” and “end-to-end” learning, and instead advocate for an approach which benefits from their complementary strengths. We explore how using relational inductive biases within deep learning architectures can facilitate learning about entities, relations, and rules for composing them. We present a new building block for the AI toolkit with a strong relational inductive bias–the graph network–which generalizes and extends various approaches for neural networks that operate on graphs, and provides a straightforward interface for manipulating structured knowledge and producing structured behaviors. We discuss how graph networks can support relational reasoning and combinatorial generalization, laying the foundation for more sophisticated, interpretable, and flexible patterns of reasoning. As a companion to this paper, we have released an open-source software library for building graph networks, with demonstrations of how to use them in practice.

Forty pages of very deep sledding.

Just on a quick scan, I do take encouragement from:

An entity is an element with attributes, such as a physical object with a size and mass. (page 4)

Could it be that entities have identities defined by their attributes? Are the attributes and their values recursive subjects?

Only a close read of the paper will tell but I wanted to share it today.

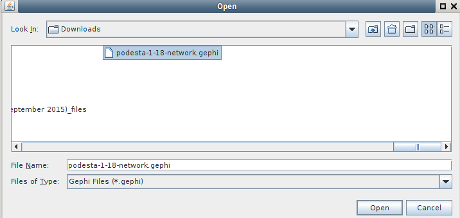

Oh, the authors have released a library for building graph networks: https://github.com/deepmind/graph_nets.