Microsoft Defies Court Order, Will Not Give Emails to US Government by Paul Thurrott.

From the post:

Despite a federal court order directing Microsoft to turn overseas-held email data to federal authorities, the software giant said Friday it will continue to withhold that information as it waits for the case to wind through the appeals process. The judge has now ordered both Microsoft and federal prosecutors to advise her how to proceed by next Friday, September 5.

Let there be no doubt that Microsoft’s actions in this controversial case are customer-centric. The firm isn’t just standing up to the US government on moral principles. It’s now defying a federal court order.

“Microsoft will not be turning over the email and plans to appeal,” a Microsoft statement notes. “Everyone agrees this case can and will proceed to the appeals court. This is simply about finding the appropriate procedure for that to happen.”

…

Hooray for Microsoft! So say we all!

Unlike victimizing an individual like Aaron Swartz, the United States government now faces an opponent with no fear of personal injury and phalanxes of lawyers to defend itself. An opponent that is heavily wired into the halls of government itself.

Why should other corporations join Microsoft in this fighting government overreaching?

Thurrott’s post captures that is a single phrase, “customer-centric.”

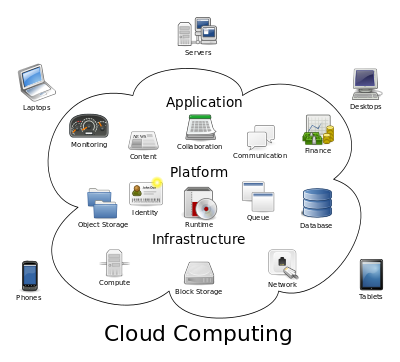

If you want to protect your part of a projected market of $235.1 billion in 2017, being customer-centric is a necessity.

That’s it. Bottom line it is a question of cold, hard economics and profit. Lose potential customers because of government overreaching and your bottom line shrinks.

Unless a shrinking bottom line is your corporate strategy for the future (you need to tell your shareholders about that) the time to start fighting government overreaching is now.

For example, all orders for customer data or data about customers should be subject to appellate review up to and including the United States Supreme Court.

Congress has established lower courts and even secret courts, so it can damned well make their orders subject to appeal up to the Supreme Court.

If IT businesses and their customers want a “customer-centric” Cloud, the time has some for calling on their elected representatives to make it so.

Possible slogan? Customer-Centric-Cloud! CCCl