Big Data Counting: How to count a billion distinct objects using only 1.5KB of Memory

From the post:

This is a guest post by Matt Abrams (@abramsm), from Clearspring, discussing how they are able to accurately estimate the cardinality of sets with billions of distinct elements using surprisingly small data structures. Their servers receive well over 100 billion events per month.

At Clearspring we like to count things. Counting the number of distinct elements (the cardinality) of a set is challenge when the cardinality of the set is large.

…

Cardinality estimation algorithms trade space for accuracy. To illustrate this point we counted the number of distinct words in all of Shakespeare’s works using three different counting techniques. Note that our input dataset has extra data in it so the cardinality is higher than the standard reference answer to this question. The three techniques we used were Java HashSet, Linear Probabilistic Counter, and a Hyper LogLog Counter. Here are the results:

|

Counter

|

Bytes Used

|

Count

|

Error

|

|

HashSet

|

10447016

|

67801

|

0%

|

|

Linear

|

3384

|

67080

|

1%

|

|

HyperLogLog

|

512

|

70002

|

3%

|

The table shows that we can count the words with a 3% error rate using only 512 bytes of space. Compare that to a perfect count using a HashMap that requires nearly 10 megabytes of space and you can easily see why cardinality estimators are useful. In applications where accuracy is not paramount, which is true for most web scale and network counting scenarios, using a probabilistic counter can result in tremendous space savings.

The post goes onto describe merging of counters from distributed machines and choosing an acceptable error rate for probabilistic counting.

Question: Can we make graph traversal resemble probabilistic counting?

I will have to work on a graphic but see if this word picture works for the moment.

Assume we have a 3-D graph and the top layer of nodes is composed of basketballs, the basketballs are sitting on a layer of baseballs, and the baseballs are sitting on top of marbles. Each layer represents the nodes and edges below it, except that the representation is coarser at the baseball level and coarser still at the level of basketballs.

Traversal at the “level” of basketballs may be sufficient until we reach a point of interest and then we traverse into greater detail levels of the graph.

Another illustration.

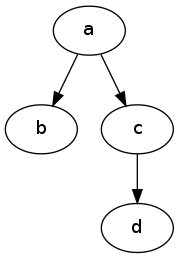

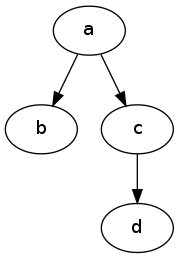

You draw and traverse from node a to node d the following graph:

Easy enough.

Now, same traversal but choose a molecule located in a to traverse to d and travel along edges between molecules.

Or, same traversal but choose an atom located in a to traverse to d and travel along edges between atoms.

In some sense the “same” path but substantially higher traversal cost at the level of greater detail.

Has someone suggested coarsening graph traversal (or having multiple levels of traversal)? Sure it has happened. Would appreciate a pointer.

The authors cite: Hyperloglog: The analysis of a near-optimal cardinality estimation algorithm (2007) by Philippe Flajolet , Éric Fusy , Olivier Gandouet, et al.

And, stream-lib, a project with many useful implementations of the strategies in the post.