DataGraft: Initial Public Release

As a former resident of Louisiana and given my views on the endemic corruption in government contracts, putting “graft” in the title of anything is like waving a red flag at a bull!

From the webpage:

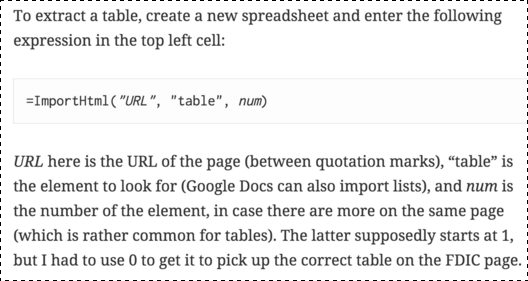

We are pleased to announce the initial public release of DataGraft – a cloud-based service for data transformation and data access. DataGraft is aimed at data workers and data developers interested in simplified and cost-effective solutions for managing their data. This initial release provides capabilities to:

- Transform tabular data and share transformations: Interactively edit, host, execute, and share data transformations

- Publish, share, and access RDF data: Data hosting and reliable RDF data access / data querying

Sign up for an account and try DataGraft now!

You may want to check out our FAQ, documentation, and the APIs. We’d be glad to hear from you – don’t hesitate to get in touch with us!

I followed a tweet from Kirk Borne recently to a demo of Pentaho on data integration. I mention that because Pentaho is a good representative of the commercial end of data integration products.

Oh, the demo was impressive, a visual interface selecting nicely styled icons from different data sources, integration, visualization, etc.

But, the one characteristic it shares with DataGraft is that I would be hard pressed to follow or verify your reasoning for the basis for integrating that particular data.

If it happens that both files have customerID and they both have the same semantic, by some chance, then you can glibly talk about integrating data from diverse resources. If not, well, then your mileage will vary a great deal.

The important point that is dropped by both Pentaho and DataGraft is that data integration isn’t just an issue for today, that same data integration must be robust long after I have moved onto another position.

Like spreadsheets, the next person in my position could just run the process blindly and hope that no one ever asks for a substantive change, but that sounds terribly inefficient.

Why not provide users with the ability to disclose the properties they “see” in the data sources and to indicate why they made the mappings they did?

That is make the mapping process more transparent.