Encyclopedia of Distances (4th edition) by Michel Marie Deza and Elena Deza.

Springer description:

This 4-th edition of the leading reference volume on distance metrics is characterized by updated and rewritten sections on some items suggested by experts and readers, as well a general streamlining of content and the addition of essential new topics. Though the structure remains unchanged, the new edition also explores recent advances in the use of distances and metrics for e.g. generalized distances, probability theory, graph theory, coding theory, data analysis.

New topics in the purely mathematical sections include e.g. the Vitanyi multiset-metric, algebraic point-conic distance, triangular ratio metric, Rossi-Hamming metric, Taneja distance, spectral semimetric between graphs, channel metrization, and Maryland bridge distance. The multidisciplinary sections have also been supplemented with new topics, including: dynamic time wrapping distance, memory distance, allometry, atmospheric depth, elliptic orbit distance, VLBI distance measurements, the astronomical system of units, and walkability distance.

Leaving aside the practical questions that arise during the selection of a ‘good’ distance function, this work focuses on providing the research community with an invaluable comprehensive listing of the main available distances.

As well as providing standalone introductions and definitions, the encyclopedia facilitates swift cross-referencing with easily navigable bold-faced textual links to core entries. In addition to distances themselves, the authors have collated numerous fascinating curiosities in their Who’s Who of metrics, including distance-related notions and paradigms that enable applied mathematicians in other sectors to deploy research tools that non-specialists justly view as arcane. In expanding access to these techniques, and in many cases enriching the context of distances themselves, this peerless volume is certain to stimulate fresh research.

Ransomed for $149 (US) per digital copy, this remarkable work that should have a broad readership.

From the introduction to the 2009 edition:

…

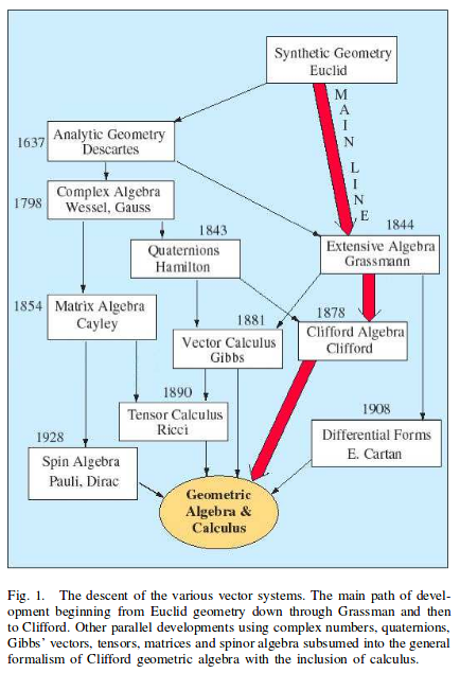

Distance metrics and distances have now become an essential tool in many areas of Mathematics and its applications including Geometry, Probability, Statistics, Coding/Graph Theory, Clustering, Data Analysis, Pattern Recognition, Networks, Engineering, Computer Graphics/Vision, Astronomy, Cosmology, Molecular Biology, and many other areas of science. Devising the most suitable distance metrics and similarities, to quantify the proximity between objects, has become a standard task for many researchers. Especially intense ongoing search for such distances occurs, for example, in Computational Biology, Image Analysis, Speech Recognition, and Information Retrieval.

Often the same distance metric appears independently in several different areas; for example, the edit distance between words, the evolutionary distance in Biology, the Levenstein distance in Coding Theory, and the Hamming+Gap or shuffle-Hamming distance.

(emphasis added)

…

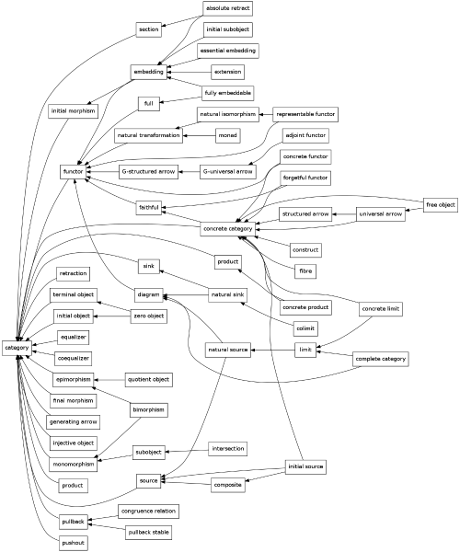

I highlighted that last sentence to emphasize that Encyclopedia of Distances is a static and undisclosed topic map.

While readers familiar with the concepts:

edit distance between words, the evolutionary distance in Biology, the Levenstein distance in Coding Theory, and the Hamming+Gap or shuffle-Hamming distance.

could enumerate why those merit being spoken of as being “the same distance metric,” no indexing program can accomplish the same feat.

If each of those concepts had enumerated properties, which could be compared by an indexing program, readers could not only discover those “same distance metrics” but could also discover new rediscoveries of that same metric.

As it stands, readers must rely upon the undisclosed judgments of the Deza’s and hope they continue to revise and extend this work.

When they cease to do so, successive editors will be forced to re-acquire the basis for adding new/re-discovered metrics to it.

PS: Suggestions of similar titles that deal with non-metric distances? I’m familiar with works that impose metrics on non-metric distances but that’s not what I have in mind. That’s an arbitrary and opaque mapping from non-metric to metric.