From Records to a Web of Library Data – Pt3 Beacons of Availability by Richard Wallis.

Beacons of Availability

As I indicated in the first of this series, there are descriptions of a broader collection of entities, than just books, articles and other creative works, locked up in the Marc and other records that populate our current library systems. By mining those records it is possible to identify those entities, such as people, places, organisations, formats and locations, and model & describe them independently of their source records.

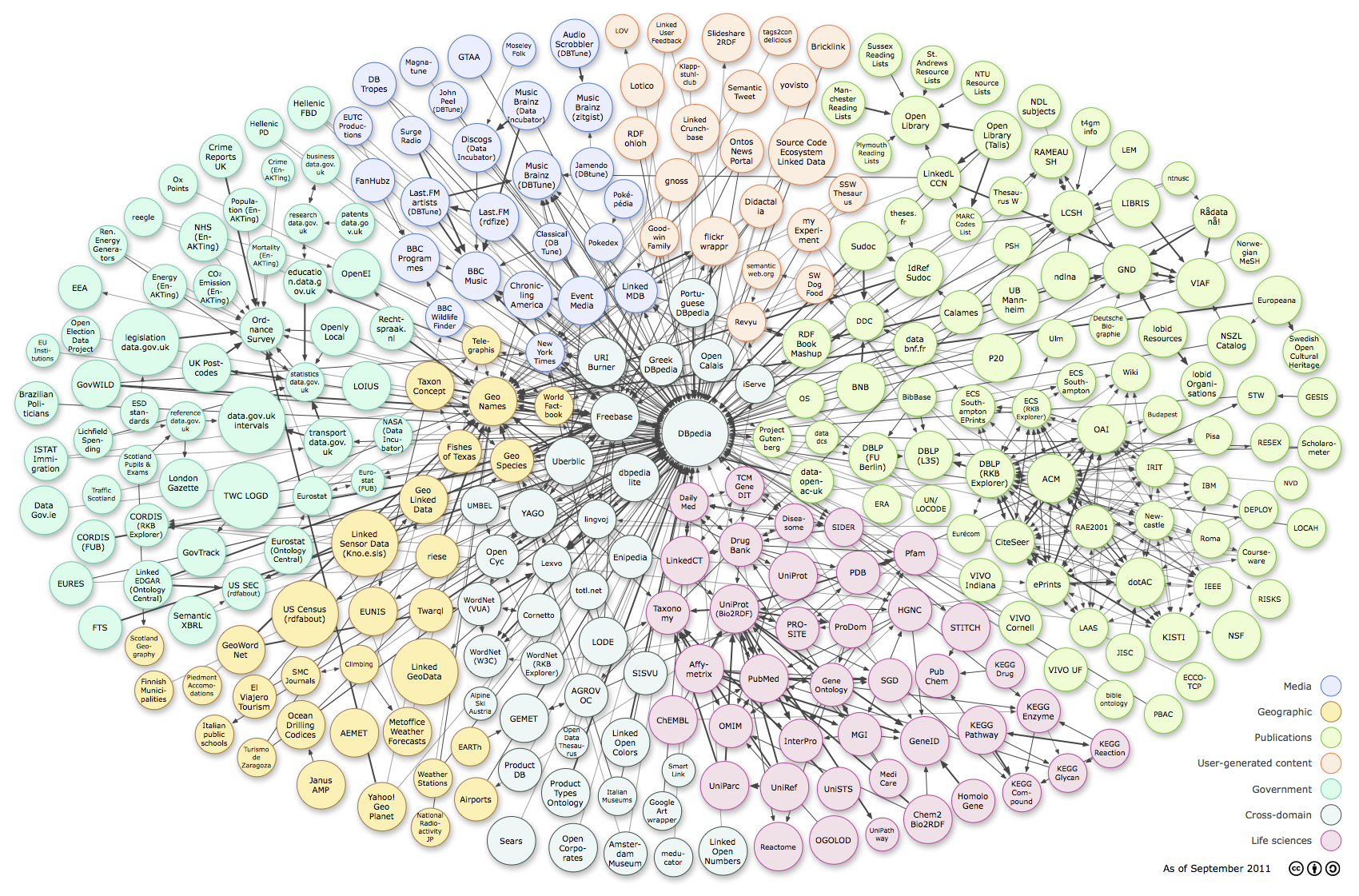

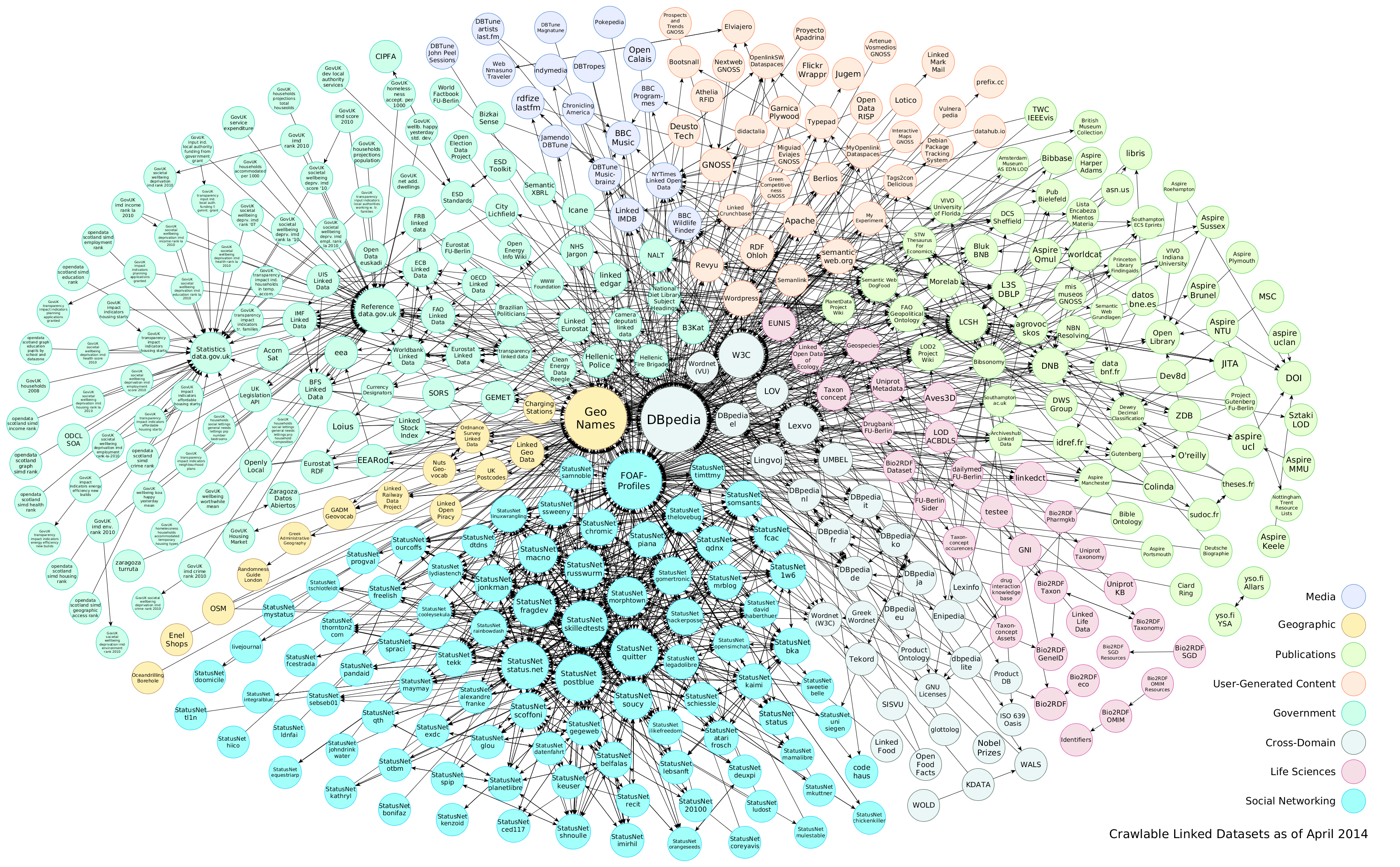

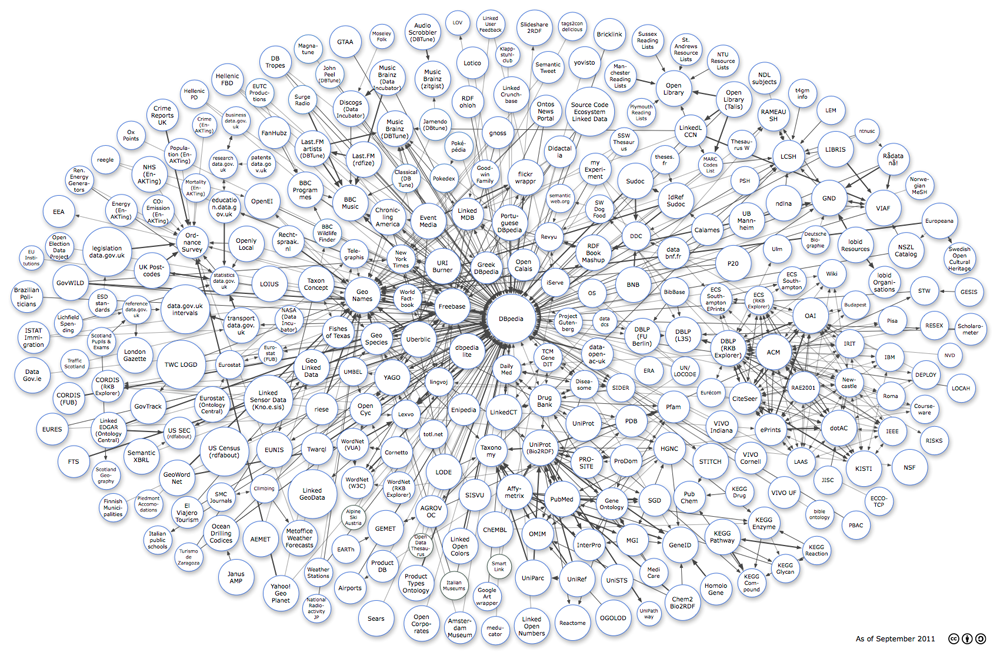

As I discussed in the post that followed, the library domain has often led in the creation and sharing of authoritative datasets for the description of many of these entity types. Bringing these two together, using URIs published by the Hubs of Authority, to identify individual relationships within bibliographic metadata published as RDF by individual library collections (for example the British National Bibliography, and WorldCat) is creating Library Linked Data openly available on the Web.

Why do we catalogue? is a question, I often ask, with an obvious answer – so that people can find our stuff. How does this entification, sharing of authorities, and creation of a web of library linked data help us in that goal. In simple terms, the more libraries can understand what resources each other hold, describe, and reference, the more able they are to guide people to those resources. Sounds like a great benefit and mission statement for libraries of the world but unfortunately not one that will nudge the needle on making library resources more discoverable for the vast majority of those that can benefit from them.

I have lost count of the number of presentations and reports I have seen telling us that upwards of 80% of visitors to library search interfaces start in Google. A similar weight of opinion can be found that complains how bad Google, and the other search engines, are at representing library resources. You will get some balancing opinion, supporting how good Google Book Search and Google Scholar are at directing students and others to our resources. Yet I am willing to bet that again we have another 80-20 equation or worse about how few, of the users that libraries want to reach, even know those specialist Google services exist. A bit of a sorry state of affairs when the major source of searching for our target audience, is also acknowledged to be one of the least capable at describing and linking to the resources we want them to find!

Library linked data helps solve both the problem of better description and findability of library resources in the major search engines. Plus it can help with the problem of identifying where a user can gain access to that resource to loan, download, view via a suitable license, or purchase, etc.

I’m am an ardent sympathizer helping people to find “our stuff.”

I don’t disagree with the description of Google as: “…the major source of searching for our target audience, is also acknowledged to be one of the least capable at describing and linking to the resources we want them to find!”

But in all fairness to Google, I would remind you of Drabenstott’s research that found for the Library of Congress subject headings:

Overall percentages of correct meanings for subject headings in the original order of subdivisions were as follows:

| children |

32% |

| adults |

40% |

| reference |

53% |

| technical services librarians |

56% |

The Library of Congress subject classification has been around for more than a century and just over half of the librarians can use it correctly.

Let’s don’t wait more than a century to test the claim:*

“Library linked data helps solve both the problem of better description and findability of library resources in the major search engines.”

* By “test” I don’t mean the sort of study, “…we recruited twelve LIS students but one had to leave before the study was complete….”

I am using “test” in the sense of a well designed and organized social science project with professional assistance from social scientists, UI test designers and the like.

I think OCLC is quite sincere in its promotion of linked data, but effectiveness is an empirical question, not one of sincerity.