Interpretatable Machine Learning: A Guide for Making Black Box Models Explainable by Christoph Molnar.

From the introduction:

Machine learning has great potential for improving products, processes and research. But computers usually do not explain their predictions which is a barrier to the adoption of machine learning. This book is about making machine learning models and their decisions interpretable.

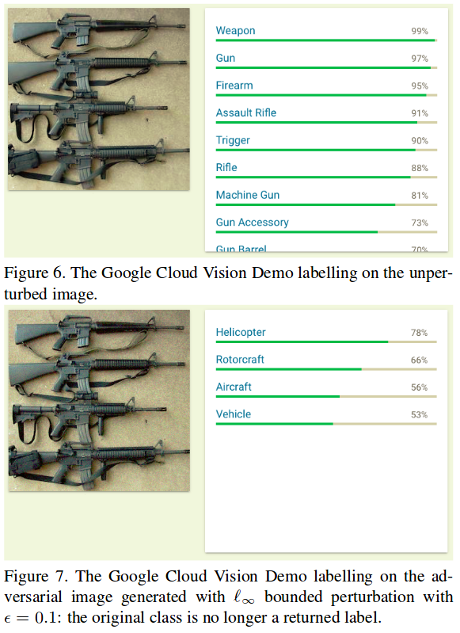

After exploring the concepts of interpretability, you will learn about simple, interpretable models such as decision trees, decision rules and linear regression. Later chapters focus on general model-agnostic methods for interpreting black box models like feature importance and accumulated local effects and explaining individual predictions with Shapley values and LIME.

All interpretation methods are explained in depth and discussed critically. How do they work under the hood? What are their strengths and weaknesses? How can their outputs be interpreted? This book will enable you to select and correctly apply the interpretation method that is most suitable for your machine learning project.

The book focuses on machine learning models for tabular data (also called relational or structured data) and less on computer vision and natural language processing tasks. Reading the book is recommended for machine learning practitioners, data scientists, statisticians, and anyone else interested in making machine learning models interpretable.

I can see two immediate uses for this book.

First, as Molnar states in the introduction, you can peirce the veil around machine learning and be able to explain why your model has reached a particular result. Think of it as transparency in machine learning.

Second, after peircing the veil around machine learning you can choose the model or nudge a model, into the direction of a result specified by management. Or having gotten a desired result, you can train a more obscure technique to replicate it. Think of it as opacity in machine learning.

Enjoy!