Congressional PageRank – Analyzing US Congress With Neo4j and Apache Spark by William Lyon.

From the post:

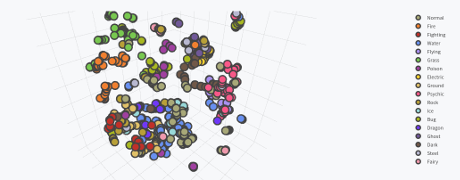

As we saw previously, legis-graph is an open source software project that imports US Congressional data from Govtrack into the Neo4j graph database. This post shows how we can apply graph analytics to US Congressional data to find influential legislators in Congress. Using the Mazerunner open source graph analytics project we are able to use Apache Spark GraphX alongside Neo4j to run the PageRank algorithm on a collaboration graph of US Congress.

While Neo4j is a powerful graph database that allows for efficient OLTP queries and graph traversals using the Cypher query language, it is not optimized for global graph algorithms, such as PageRank. Apache Spark is a distributed in-memory large-scale data processing engine with a graph processing framework called GraphX. GraphX with Apache Spark is very efficient at performing global graph operations, like the PageRank algorithm. By using Spark alongside Neo4j we can enhance our analysis of US Congress using legis-graph.

…

Excellent walk-through to get you started on analyzing influence in congress, with modern data analysis tools. Getting a good grip on all these tools with be valuable.

Political scientists, among others, have studied the question of influence in Congress for decades so if you don’t want to repeat the results of others, being by consulting the American Political Science Review for prior work in this area.

An article that reports counter-intuitive results is: The Influence of Campaign Contributions on the Legislative Process by Lynda W. Powell.

From the introduction:

Do campaign donors gain disproportionate influence in the legislative process? Perhaps surprisingly, political scientists have struggled to answer this question. Much of the research has not identified an effect of contributions on policy; some political scientists have concluded that money does not matter; and this bottom line has been picked up by reporters and public intellectuals.1 It is essential to answer this question correctly because the result is of great normative importance in a democracy.

It is important to understand why so many studies find no causal link between contributions and policy outcomes. (emphasis added)

Linda cites much of the existing work on the influence of donations on process so her work makes a great starting point for further research.

As far as the lack of a “casual link between contributions and policy outcomes,” I think the answer is far simpler than Linda suspects.

The existence of a quid-pro-quo, the exchange of value for a vote on a particular bill, is the essence of the crime of public bribery. For the details (in the United States), see: 18 U.S. Code § 201 – Bribery of public officials and witnesses

What isn’t public bribery is to donate funds to an office holder on a regular basis, unrelated to any particular vote or act on the part of that official. Think of it as bribery on an installment plan.

When U.S. officials, such as former Secretary of State Hillary Clinton complain of corruption in other governments, they are criticizing quid-pro-quo bribery and not installment plan bribery as it is practiced in the United States.

Regular contributions gains ready access to legislators and, not surprisingly, more votes will go in your favor than random chance would allow.

Regular contributions are more expensive than direct bribes but avoiding the “causal link” is essential for all involved.