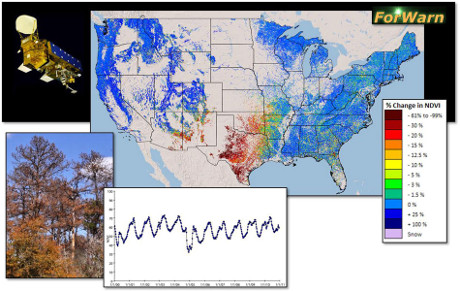

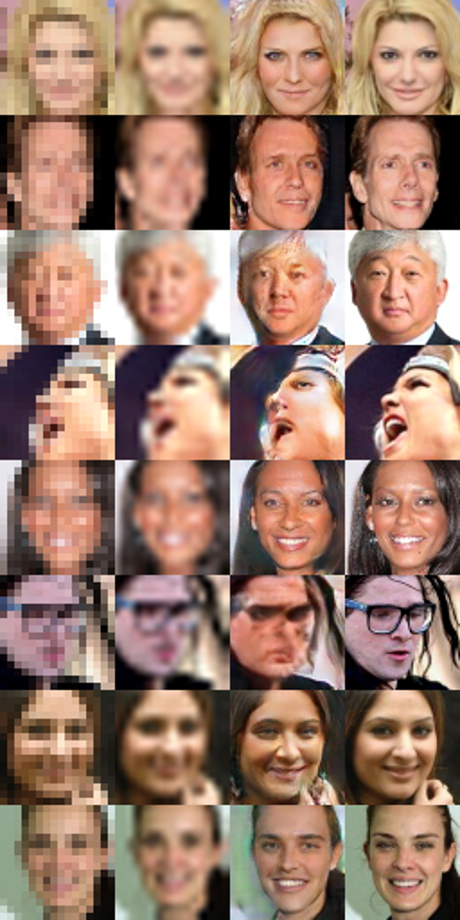

IBM Research Releases ‘Diversity in Faces’ Dataset to Advance Study of Fairness in Facial Recognition Systems by John R. Smith.

From the post:

Have you ever been treated unfairly? How did it make you feel? Probably not too good. Most people generally agree that a fairer world is a better world, and our AI researchers couldn’t agree more. That’s why we are harnessing the power of science to create AI systems that are more fair and accurate.

Many of our recent advances in AI have produced remarkable capabilities for computers to accomplish increasingly sophisticated and important tasks, like translating speech across languages to bridge communications across cultures, improving complex interactions between people and machines, and automatically recognizing contents of video to assist in safety applications.

Much of the power of AI today comes from the use of data-driven deep learning to train increasingly accurate models by using growing amounts of data. However, the strength of these techniques can also be a weakness. The AI systems learn what they’re taught, and if they are not taught with robust and diverse datasets, accuracy and fairness could be at risk. For that reason, IBM, along with AI developers and the research community, need to be thoughtful about what data we use for training. IBM remains committed to developing AI systems to make the world more fair.

…

To request access to the DiF dataset, visit our webpage. To learn more about DiF, read our paper, “Diversity in Faces.”

Nice of Smith to we have “ever been treated unfairly?”

Because if not before, certainly now with the limitations on access to the “Diversity in Faces” Dataset.

Step 1

Review the DiF Terms of Use and Privacy Notice.

DOCUMENTS

Step 2

Download and complete the questionnaire.

DOCUMENT

Step 3

Email completed questionnaire to IBM Research.

APPLICATION CONTACT

Michele Merler | mimerler@us.ibm.com

Step 4

Further instructions will be provided from IBM Research via email once application is approved.

Check out Terms of Use, 3. IP Rights, 3.2 #5:

…

Licensee grants to IBM a non-exclusive, irrevocable, unrestricted, worldwide and paid-up right, license and sublicense to: a) include in any product or service any idea, know-how, feedback, concept, technique, invention, discovery or improvement, whether or not patentable, that Licensee provides to IBM, b) use, manufacture and market any such product or service, and c) allow others to do any of the foregoing. (emphasis added)

…

Treated unfairly? There’s the grasping claw of IBM so familiar across the decades. I suppose we should be thankful it doesn’t include any ideas, concepts, patents, etc., that you develop while in possession of the dataset. From that perspective, the terms of use are downright liberal.