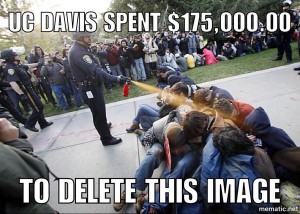

Safiya Noble’s Algorithms of Oppression highlights the necessity of asking members of marginalized communities about their experiences with algorithms. I can read the terms that Noble uses in her Google searches and her analysis of the results. What I can’t do, as a older white male, is authentically originate queries of a Black woman scholar or estimate her reaction to search results.

That inability to assume a role in a marginalized community extends across all marginalized communities and in between them. To understand the impact of oppressive algorithms, such as Google’s search algorithms, we must:

- Empower everyone who can use a web browser with the ability to black box Google’s algorithm of oppression, and

- Listen to their reports of queries and experiences with results of queries.

Enpowering everyone to participate in testing Google’s algorithms avoids relying on reports about the experiences of marginalized communities. We will be listening to members of those communities.

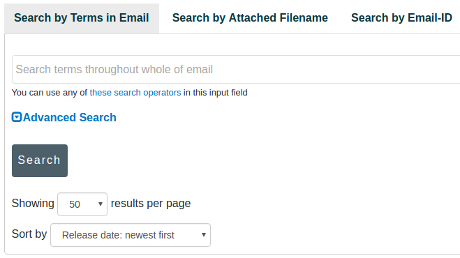

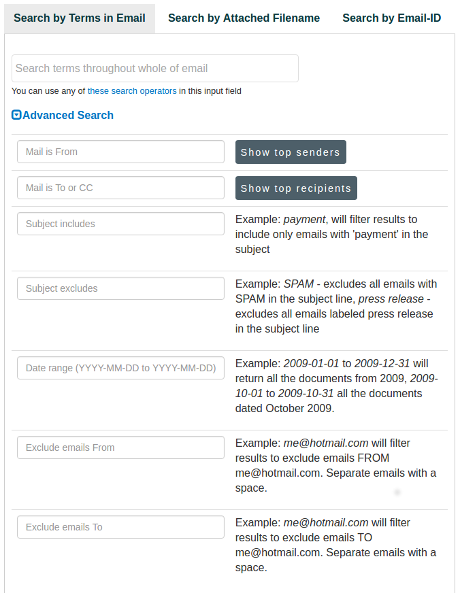

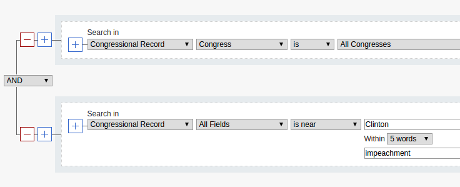

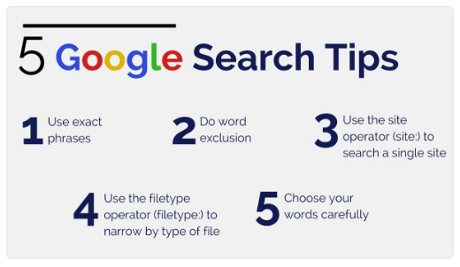

In it’s simplest form, your black boxing of Google start with a Google search box, then:

your search terms site:website OR site:websiteThat search string states your search terms and is then followed by an OR list of websites you want searched. The results are Google’s ranking of your search against specified websites.

Here’s an example ran while working on this post:

terrorism trump IS site:nytimes.com OR site:fox.com OR site:wsj.comWithout running the search yourself, what distribution of articles to you expect to see? (I also tested this using Tor to make sure my search history wasn’t creating an issue.)

By count of the results: nytimes.com 87, fox.com 0, wsj.com 18.

Suprised? I was. I wonder how the Washington Post stacks up against the New York Times? Same terms: nytimes 49, washingtonpost.com 52.

Do you think those differences are accidental? (I don’t.)

I’m not competent to create a list of Black websites for testing Google’s algorithm of oppression but the African American Literature Book Club has a list of the top 50 Black-Owned Websites. In addition, they offer a list of 300 Black-owned websites and host the search engine Huria Search, which only searches Black-owned websites.

To save you the extraction work, here are the top 50 Black-owned websites ready for testing against each other and other sites in the bowels of Google:

essence.com OR howard.edu OR blackenterprise.com OR thesource.com OR ebony.com OR blackplanet.com OR sohh.com OR blackamericaweb.com OR hellobeautiful.com OR allhiphop.com OR worldstarhiphop.com OR eurweb.com OR rollingout.com OR thegrio.com OR atlantablackstar.com OR bossip.com OR blackdoctor.org OR blackpast.org OR lipstickalley.com OR newsone.com OR madamenoire.com OR morehouse.edu OR diversityinc.com OR spelman.edu OR theybf.com OR hiphopwired.com OR aalbc.com OR stlamerican.com OR afro.com OR phillytrib.com OR finalcall.com OR mediatakeout.com OR lasentinel.net OR blacknews.com OR blavity.com OR cassiuslife.com OR jetmag.com OR blacklivesmatter.com OR amsterdamnews.com OR diverseeducation.com OR deltasigmatheta.org OR curlynikki.com OR atlantadailyworld.com OR apa1906.net OR theshaderoom.com OR notjustok.com OR travelnoire.com OR thecurvyfashionista.com OR dallasblack.com OR forharriet.com

Please spread the word to “young Black girls” to use Noble’s phrase, Black women in general, all marginalized communities, they need not wait for experts with programming staffs to detect marginalization at Google. Experts have agendas, discover your own and tell the rest of us about it.