The New York Times built a robot to help make article tagging easier by Justin Ellis.

From the post:

If you write online, you know that a final, tedious part of the process is adding tags to your story before sending it out to the wider world.

Tags and keywords in articles help readers dig deeper into related stories and topics, and give search audiences another way to discover stories. A Nieman Lab reader could go down a rabbit hole of tags, finding all our stories mentioning Snapchat, Nick Denton, or Mystery Science Theater 3000.

Those tags can also help newsrooms create new products and find inventive ways of collecting content. That’s one reason The New York Times Research and Development lab is experimenting with a new tool that automates the tagging process using machine learning — and does it in real time.

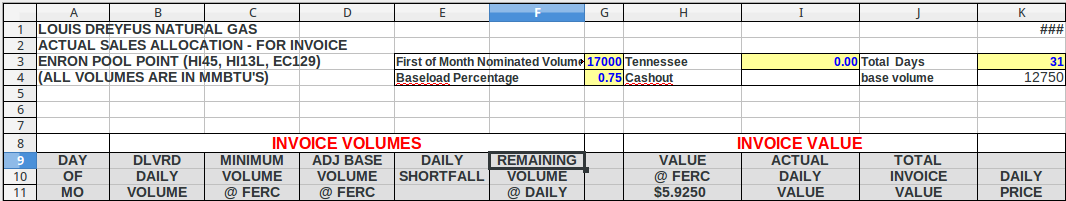

The Times R&D Editor tool analyzes text as it’s written and suggests tags along the way, in much the way that spell-check tools highlight misspelled words:

…

Great post but why not take the “…in much the way that spell-check tools highlight misspelled words” just a step further?

Apache OpenOffice already has spell-checking, so why not improve it to have automatic tagging?

You may or may not know that Open Document Format (ODF) 1.2 was just published as an ISO standard!

Which is the format used by Apache OpenOffice.

Open Document Format (ODF) 1.2 supports RDFa for inline metadata.

Now, imagine for a moment using standard office suite software (Apache OpenOffice) to choose a metadata dictionary and have your content automatically tagged as you type or to insert a document and tags are automatically inserted into the text.

Does that sound like a killer application for your corner of the woods?

A universal dictionary of RDFa tags might be a real memory hog but how many different tags would you need day to day? That’s even an empirical question that could be answered by indexing your documents for the past six (6) months.

With very little effort on the part of users, you can transform your documents from unstructured text to tagged (and proofed) text.

Assemble at the Apache OpenOffice (or LibreOffice) projects if an easy-to-use, easy-to-modify tagging system for office suite software appeals to you.

For other software projects supporting ODF, see: OpenDocument software.

PS: Work is current underway at the ODF TC (OASIS) on robust change tracking support. All we are missing is you.