Musical Genres Classified Using the Entropy of MIDI Files (Emerging Technology from the arXiv, October 15, 2015)

Communication is the process of reproducing a message created in one point space at another point in space. It has been studied in depth by numerous scientists and engineers but it is the mathematical treatment of communication that has had the most profound influence.

To mathematicians, the details of a message are of no concern. All that matters is that the message can be thought of as an ordered set of symbols. Mathematicians have long known that this set is governed by fundamental laws first outlined by Claude Shannon in his mathematical theory of communication.

Shannon’s work revolutionized the way engineers think about communication but it has far-reaching consequences in other areas, too. Language involves the transmission of information from one individual to another and information theory provides a window through which to study and understand its nature. In computing, data is transmitted from one location to another and information theory provides the theoretical bedrock that allows this to be done most efficiently. And in biology, reproduction can be thought of as the transmission of genetic information from one generation to the next.

Music too can be thought of as the transmission of information from one location to another, but scientists have had much less success in using information theory to characterize music and study its nature.

Today, that changes thanks to the work of Gerardo Febres and Klaus Jaffé at Simon Bolivar University in Venezuela. These guys have found a way to use information theory to tease apart the nature of certain types of music and to automatically classify different musical genres, a famously difficult task in computer science.

One reason why music is so hard to study is that it does not easily translate into an ordered set of symbols. Music often consists of many instruments playing different notes at the same time. Each of these can have various qualities of timbre, loudness, and so on.

…

Music viewed by its Entropy content: A novel window for comparative analysis by Gerardo Febres and Klaus Jaffe.

Abstract:

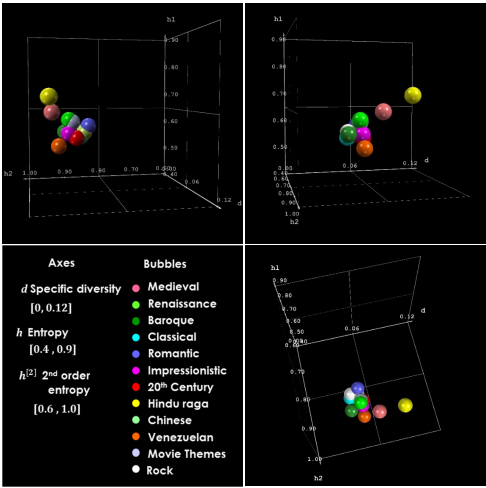

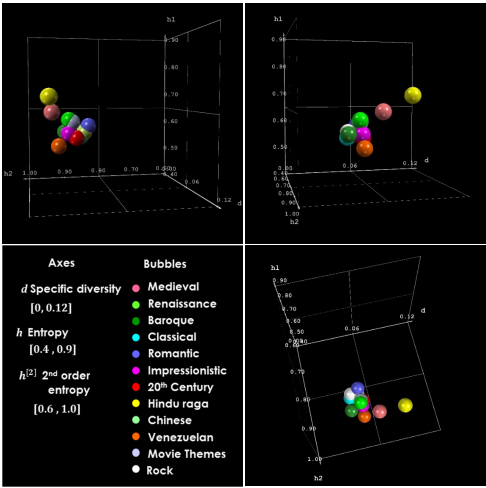

Texts of polyphonic music MIDI files were analyzed using the set of symbols that produced the Fundamental Scale (a set of symbols leading to the Minimal Entropy Description). We created a space to represent music pieces by developing: (a) a method to adjust a description from its original scale of observation to a general scale, (b) the concept of higher order entropy as the entropy associated to the deviations of a frequency ranked symbol profile from a perfect Zipf profile. We called this diversity index the “2nd Order Entropy”. Applying these methods to a variety of musical pieces showed how the space “symbolic specific diversity-entropy – 2nd order entropy” captures some of the essence of music types, styles, composers and genres. Some clustering around each musical category is shown. We also observed the historic trajectory of music across this space, from medieval to contemporary academic music. We show that description of musical structures using entropy allows to characterize traditional and popular expressions of music. These classification techniques promise to be useful in other disciplines for pattern recognition, machine learning, and automated experimental design for example.

The process simplifies the data stream, much like you choose which subjects you want to talk about in a topic map.

Purists will object but realize that objection is because they have chosen a different (and much more complex) set of subjects to talk about in the analysis of music.

The important point is to realize we are always choosing different degrees of granularity of subjects and their identifications, for some specific purpose. Change that purpose and the degree of granularity will change.