The 50 Most Innovative Computer Science Departments in the U.S. by Yusuf Laher.

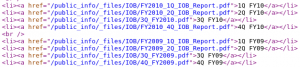

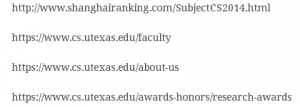

A great resource but the additional links were written as text and not hyperlinks. Thus, for University of Texas at Austin:

Compare my listing (which included links buried in prose):

11. Department of Computer Science, University of Texas at Austin – Austin, Texas

Department of Computer Science

https://www.cs.utexas.edu/faculty

https://www.cs.utexas.edu/about-us

I deleted the prose descriptions, changed all the links into HTML links, swept for vanity links, such as prior rankings, etc. What’s left should be links to the departments, major projects and faculty. It’s a rough cut but suitable for spidering, etc.

50. Department of Computer Science, University of Arkansas at Little Rock – Little Rock, Arkansas

Department of Computer Science

http://ualr.edu/eit/

http://ualr.edu/computerscience/prospective-students/facilities/

49. School of Informatics and Computing, Indiana University – Bloomington, Indiana

School of Informatics and Computing

Student Technology Centers

http://www.soic.indiana.edu/faculty-research/

http://www.soic.indiana.edu/about/

48. Department of Computer Science & Engineering, Texas A&M University – College Station, Texas

Department of Computer Science & Engineering

the High Performance Computing Laboratory

http://engineering.tamu.edu/cse/

47. School of Computing, Informatics and Decision Systems Engineering, Arizona State University – Tempe, Arizona

School of Computing, Informatics and Decision Systems Engineering

Center for Excellence in Logistics and Distribution

http://cidse.engineering.asu.edu/facultyandresearc/research-centers/

46. Department of Computer Science, The University of North Carolina at Chapel Hill – Chapel Hill, North Carolina

Department of Computer Science

https://www.cs.unc.edu/cms/research/research-laboratories

45. Department of Computer Science, Rutgers University – Piscataway, New Jersey

Department of Computer Science

Hack R Space

44. Department of Computer Science, Stony Brook University – Stony Brook, New York

Stony Brook University

Center of Excellence in Wireless and Information Technology

https://www.cs.stonybrook.edu/research

43. Department of Computer Science & Engineering, Washington University in St. Louis – St. Louis, Missouri

Department of Computer Science & Engineering

Cyber-Physical Systems Lab

Stream-based Supercomputing Lab

http://cse.wustl.edu/aboutthedepartment/Pages/history.aspx

http://cse.wustl.edu/Research/

42. Department of Computer Science, Purdue University – West Lafayette, Indiana

Department of Computer Science

Center for Integrated Systems in Aerospace

https://www.cs.purdue.edu/research/centers.html

http://www.purdue.edu/discoverypark/cri/research/projects.php

41. Computer Science Department, New York University – New York City, New York

Computer Science Department

40. Electrical Engineering and Computer Science, Northwestern University – Evanston, Illinois

Electrical Engineering and Computer Science department

http://eecs.northwestern.edu/2013-09-03-20-01-56/researchgroupsandlabs/

http://www.eecs.northwestern.edu/graduate-study

39. School of Computing, The University of Utah – Salt Lake City, Utah

School of Computing

Scientific Computing and Imaging Institute

http://www.cs.utah.edu/research/

http://www.cs.utah.edu/about/history/

38. Department of Computer Science, University of California, Santa Barbara – Santa Barbara, California

Department of Computer Science

Four Eyes Lab

https://www.cs.ucsb.edu/research

37. Department of Computer Science and Engineering, University of Minnesota – Minneapolis, Minnesota

Department of Computer Science and Engineering

Laboratory for Computational Science and Engineering

http://www.cs.umn.edu/department/excellence.php

http://www.cs.umn.edu/research/

36. Department of Computer Science, Dartmouth College – Hanover, New Hampshire

Department of Computer Science

Visual Learning Group

computational biology-focused Grigoryan Lab

http://web.cs.dartmouth.edu/research/projects

35. Department of Computer Science and Engineering, The Ohio State University – Columbus, Ohio

computer science programs

https://cse.osu.edu/research

34. Department of Computer Science, North Carolina State University – Raleigh, North Carolina

Department of Computer Science

Visual Experiences Lab

http://www.csc.ncsu.edu/news/

33. Computer Science Department, Boston University – Boston, Massachusetts

Computer Science Department

http://www.bu.edu/hic/about-hic/

32. Department of Computer Science, University of Pittsburgh – Pittsburgh, Pennsylvania

Department of Computer Science

Pittsburgh Supercomputing Center

http://www.cs.pitt.edu/research/

31. Department of Computer Science, Virginia Polytechnic Institute and State University – Blacksburg, Virginia

computer science programs

30. Department of Computer Science, University of California, Davis – Davis, California

Department of Computer Science

http://www.cs.ucdavis.edu/index.html

http://www.cs.ucdavis.edu/research/index.html

http://www.cs.ucdavis.edu/iap/index.html

29. Department of Computer Science and Engineering, Pennsylvania State University – University Park, Pennsylvania

Department of Computer Science and Engineering

Institute for CyberScience

https://www.cse.psu.edu/research

http://ics.psu.edu/what-we-do/

28. Department of Computer Science, Johns Hopkins University – Baltimore, Maryland

Department of Computer Science

boundary-crossing areas

Center for Encrypted Functionalities

http://www.cs.jhu.edu/research/

27. School of Computer Science, University of Massachusetts Amherst – Amherst, Massachusetts

School of Computer Science

https://www.cs.umass.edu/faculty/

26. Department of Computer Science, University of Illinois at Chicago – Chicago, Illinois

Department of Computer Science

25. Rice University Computer Science, Rice University – Houston, Texas

computer science department

24. Department of Computer Science, Donald Bren School of Information and Computer Sciences, University of California, Irvine – Irvine, California

Donald Bren School of Information and Computer Sciences

Center for Emergency Response Technologies

Center for Machine Learning and Intelligent Systems

Institute for Virtual Environments and Computer Games

http://uci.edu/academics/ics.php

http://www.ics.uci.edu/faculty/

23. Department of Computer Science, University of Maryland – College Park, Maryland

Department of Computer Science

Institute for Advanced Computer Studies

https://www.cs.umd.edu/research

22. Brown Computer Science, Brown University – Providence, Rhode Island

computer science department

Center for Computational Molecular Biology

Center for Vision Research

http://cs.brown.edu/research/

21. Department of Computer Science, University of Chicago – Chicago, Illinois

Department of Computer Science

http://www.cs.uchicago.edu/research/labs

20. Department of Computer and Information Science, University of Pennsylvania – Philadelphia, Pennsylvania

Department of Computer and Information Science

Electronic Numerical Integrator and Computer

http://www.seas.upenn.edu/about-seas/eniac/

19. Department of Computer Science and Engineering, University of California, San Diego – La Jolla, California

Department of Computer Science and Engineering

18. Department of Computer Science, University of Southern California – Los Angeles, California

Department of Computer Science

http://www.cs.usc.edu/research/centers-and-institutes.htm

17. Department of Electrical Engineering and Computer Science, University of Michigan – Ann Arbor, Michigan

Department of Electrical Engineering and Computer Science

http://www.eecs.umich.edu/eecs/research/reslabs.html

16. Department of Computer Sciences, University of Wisconsin-Madison – Madison, Wisconsin

Department of Computer Sciences

https://www.cs.wisc.edu/people/emeritus-faculty

15. College of Computing, Georgia Institute of Technology – Atlanta, Georgia

College of Computing

Center for Robotics and Intelligent Machines

Georgia Tech Information Security Center

http://www.cse.gatech.edu/research

14. Department of Computer Science, Yale University – New Haven, Connecticut

Department of Computer Science

http://cpsc.yale.edu/our-research

13. Department of Computing + Mathematical Sciences, California Institute of Technology – Pasadena, California

Department of Computing + Mathematical Sciences

Annenberg Center

http://www.cms.caltech.edu/research/

12. Department of Computer Science, University of Illinois at Urbana-Champaign – Urbana, Illinois

Department of Computer Science

http://cs.illinois.edu/research/research-centers

11. Department of Computer Science, University of Texas at Austin – Austin, Texas

Department of Computer Science

https://www.cs.utexas.edu/faculty

10. Department of Computer Science, Cornell University – Ithaca, New York

Department of Computer Science

Juris Hartmanis

http://www.cs.cornell.edu/research

http://www.cs.cornell.edu/people/faculty

9. UCLA Computer Science Department, University of California, Los Angeles – Los Angeles, California

Computer Science Department

http://www.cs.ucla.edu/people/faculty

http://www.cs.ucla.edu/research/research-labs

8. Department of Computer Science, Princeton University – Princeton, New Jersey

computer science department

http://www.cs.princeton.edu/research/areas

7. Computer Science Division, University of California, Berkeley – Berkeley, California

Computer Science Division

6. Harvard School of Engineering and Applied Sciences, Harvard University – Cambridge, Massachusetts

School of Engineering and Applied Sciences

http://www.seas.harvard.edu/faculty-research/

5. Carnegie Mellon School of Computer Science, Carnegie Mellon University – Pittsburgh, Pennsylvania

School of Computer Science

http://www.cs.cmu.edu/directory/

4. Computer Science & Engineering, University of Washington – Seattle, Washington

Computer Science & Engineering

Paul G. Allen Center for Computer Science & Engineering

https://www.cs.washington.edu/research/

3. Department of Computer Science, Columbia University – New York City, New York

The Fu Foundation School of Engineering and Applied Science

http://engineering.columbia.edu/graduateprograms/

http://www.cs.columbia.edu/people/faculty

2. Computer Science Department, Stanford University – Stanford, California

Computer Science Department

http://www-cs.stanford.edu/research/

1. MIT Electrical Engineering & Computer Science, Massachusetts Institute of Technology – Cambridge, Massachusetts

Electrical Engineering & Computer Science

Computer Science and Artificial Intelligence Laboratory

Suggestions for further uses of this listing welcome! (Or grab a copy and use it yourself. Please send a link to what you make out of it. Thanks!)

Or at least they shouldn’t have that kind of access.

Or at least they shouldn’t have that kind of access.