Awesome Deep Learning by Christos Christofidis.

Tweeted by Gregory Piatetsky as:

Awesome Curated #DeepLearning resources on #GitHub: books, courses, lectures, researchers…

What will you find there? (As of 28 December 2015):

- Courses – 15

- Datasets – 114

- Free Online Books – 8

- Frameworks – 35

- Miscellaneous – 26

- Papers – 32

- Researchers – 96

- Tutorials – 13

- Videos and Lectures – 16

- Websites – 24

By my count, that’s 359 resources.

We know from detailed analysis of PubMed search logs, that 80% of searchers choose a link from the first twenty “hits” returned for a search.

You could assume that out of “23 million user sessions and more than 58 million user queries” PubMed searchers and/or PubMed itself or both transcend the accuracy of searching observed in other contexts. That seems rather unlikely.

The authors note:

…

Two interesting phenomena are observed: first, the number of clicks for the documents in the later pages degrades exponentially (Figure 8). Second, PubMed users are more likely to click the first and last returned citation of each result page (Figure 9). This suggests that rather than simply following the retrieval order of PubMed, users are influenced by the results page format when selecting returned citations.

…

Result page format seems like a poor basis for choosing search results, in addition to being in the top twenty (20) results.

Eliminating all the cruft from search results to give you 359 resources is a value-add, but what value-add should added to this list of resources?

What are the top five (5) value-adds on your list?

Serious question because we have tools far beyond what were available to curators in the 1960’s but there is little (if any) curation to match of the Reader’s Guide to Periodical Literature.

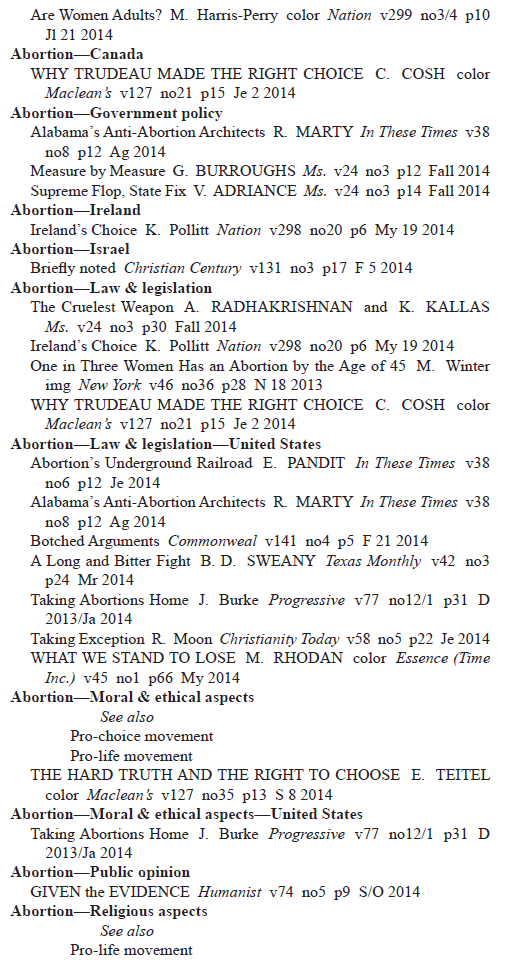

There are sample pages from the 2014 Reader’s Guide to Periodical Literature online.

Here is a screen-shot of some of its contents:

If you can, tell me what search you would use to return that sort of result for “abortion” as a subject.

Nothing come to mind?

Just to get you started, would pointing to algorithms across these 359 resources be helpful? Would you want to know more than algorithm N occurs in resource Y? Some of the more popular ones may occur in every resource. How helpful is that?

So I repeat my earlier question:

What are the top five (5) value-adds on your list?

Please forward, repost, reblog, tweet. Thanks!