In its mindless pursuit of the marginal and irrelevant, the EU is ramping up pressure on tech companies censor more speech.

Security Union: Commission steps up efforts to tackle illegal content online

Brussels, 28 September 2017

The Commission is presenting today guidelines and principles for online platforms to increase the proactive prevention, detection and removal of illegal content inciting hatred, violence and terrorism online.

…

As a first step to effectively fight illegal content online, the Commission is proposing common tools to swiftly and proactively detect, remove and prevent the reappearance of such content:

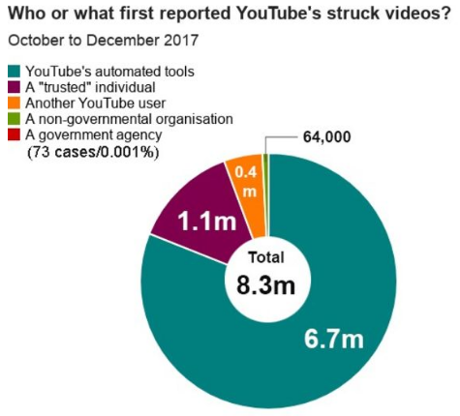

- Detection and notification: Online platforms should cooperate more closely with competent national authorities, by appointing points of contact to ensure they can be contacted rapidly to remove illegal content. To speed up detection, online platforms are encouraged to work closely with trusted flaggers, i.e. specialised entities with expert knowledge on what constitutes illegal content. Additionally, they should establish easily accessible mechanisms to allow users to flag illegal content and to invest in automatic detection technologies.

- Effective removal: Illegal content should be removed as fast as possible, and can be subject to specific timeframes, where serious harm is at stake, for instance in cases of incitement to terrorist acts. The issue of fixed timeframes will be further analysed by the Commission. Platforms should clearly explain to their users their content policy and issue transparency reports detailing the number and types of notices received. Internet companies should also introduce safeguards to prevent the risk of over-removal.

- Prevention of re-appearance: Platforms should take measures to dissuade users from repeatedly uploading illegal content. The Commission strongly encourages the further use and development of automatic tools to prevent the re-appearance of previously removed content.

… (emphasis in original)

Taking Twitter as an example, EU terrorism concerns are generously described as coke-fueled fantasies.

Twitter Terrorism By The Numbers

Don’t take my claims about Twitter as true without evidence! Such as statistics gathered on Twitter and Twitter’s own reports.

Twitter Statistics:

Total Number of Monthly Active Twitter Users: 328 million (as of 8/12/17)

Total Number of Tweets sent per Day: 500 million (as of 1/24/17)

Number of Twitter Daily Active Users: 100 million (as of 1/24/17)

Government terms of service reports Jan – Jun 30, 2017

Reports 338 reports on 1200 accounts suspended for promotion of terrorism.

Got that? From Twitter’s official report, 1200 accounts suspended for promotion of terrorism.

I read that to say 1200 accounts out of 328 million monthly users.

Aren’t you just shaking in your boots?

But it gets better, Twitter has a note on promotion of terrorism:

During the reporting period of January 1, 2017 through June 30, 2017, a total of 299,649 accounts were suspended for violations related to promotion of terrorism, which is down 20% from the volume shared in the previous reporting period. Of those suspensions, 95% consisted of accounts flagged by internal, proprietary spam-fighting tools, while 75% of those accounts were suspended before their first tweet. The Government TOS reports included in the table above represent less than 1% of all suspensions in the reported time period and reflect an 80% reduction in accounts reported compared to the previous reporting period.

We have suspended a total of 935,897 accounts in the period of August 1, 2015 through June 30, 2017.

That’s more than the 1200 reported by governments, but comparing 935,897 accounts total, against 328 million monthly users, assuming all those suspensions were warranted (more on that in a minute), “terrorism” accounts were less than 1/3 of 1% of all Twitter accounts.

The EU is urging more pro-active censorship over less than 1/3 of 1% of all Twitter accounts.

Please help the EU find something more trivial and less dangerous to harp on.

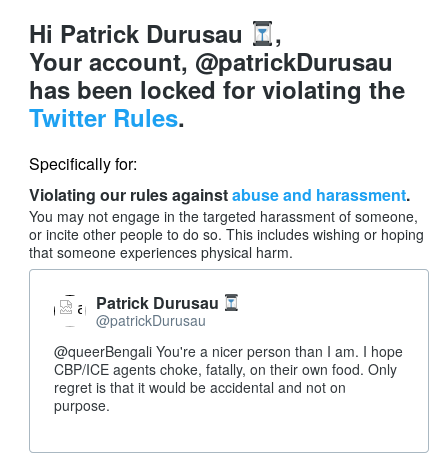

The Dangers of Twitter Censorship

Known Unknowns: An Analysis of Twitter Censorship in Turkey by Rima S. Tanash, et. al, studies Twitter censorship in Turkey:

Twitter, widely used around the world, has a standard interface for government agencies to request that individual tweets or even whole accounts be censored. Twitter, in turn, discloses country-by-country statistics about this censorship in its transparency reports as well as reporting specific incidents of censorship to the Chilling Effects web site. Twitter identifies Turkey as the country issuing the largest number of censorship requests, so we focused our attention there. Collecting over 20 million Turkish tweets from late 2014 to early 2015, we discovered over a quarter million censored tweets—two orders of magnitude larger than what Twitter itself reports. We applied standard machine learning / clustering techniques, and found the vast bulk of censored tweets contained political content, often critical of the Turkish government. Our work establishes that Twitter radically under-reports censored tweets in Turkey, raising the possibility that similar trends hold for censored tweets from other countries as well. We also discuss the relative ease of working around Twitter’s censorship mechanisms, although we can not easily measure how many users take such steps.

Are you surprised that:

- Censors lie about the amount of censoring done, or

- Censors censor material critical of governments?

It’s not only users in Turkey who have been victimized by Twitter censorship. Alfons López Tena has great examples of unacceptable Twitter censorship in: Twitter has gone from bastion of free speech to global censor.

You won’t notice Twitter censorship if you don’t care about Arab world news or Catalan independence. And, after all, you really weren’t interested in those topics anyway. (sarcasm)

Next Steps

The EU wants an opaque, private party to play censor for content on a worldwide basis. In pursuit of a gnat in the flood of social media content.

What could possibly go wrong? Well, as long as you don’t care about the Arab world, Catalan independence, or well, criticism of government in general. You don’t care about those things, right? Otherwise you might be a terrorist in the eyes of the EU and Twitter.

The EU needs to be distracted from humping its own leg and promoting censorship of social media.

Suggestions?

PS: Other examples of inappropriate Twitter censorship abound but the answer to all forms of censorship is NO. Clear, clean, easy to implement. Don’t want to see content? Filter your own feed, not mine.