TrackML Particle Tracking Challenge

Cutting to the chase:

… can machine learning assist high energy physics in discovering and characterizing new particles?

Details follow:

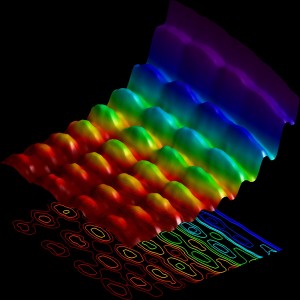

To explore what our universe is made of, scientists at CERN are colliding protons, essentially recreating mini big bangs, and meticulously observing these collisions with intricate silicon detectors.

While orchestrating the collisions and observations is already a massive scientific accomplishment, analyzing the enormous amounts of data produced from the experiments is becoming an overwhelming challenge.

Event rates have already reached hundreds of millions of collisions per second, meaning physicists must sift through tens of petabytes of data per year. And, as the resolution of detectors improve, ever better software is needed for real-time pre-processing and filtering of the most promising events, producing even more data.

To help address this problem, a team of Machine Learning experts and physics scientists working at CERN (the world largest high energy physics laboratory), has partnered with Kaggle and prestigious sponsors to answer the question: can machine learning assist high energy physics in discovering and characterizing new particles?

Specifically, in this competition, you’re challenged to build an algorithm that quickly reconstructs particle tracks from 3D points left in the silicon detectors. This challenge consists of two phases:

- The Accuracy phase will run on Kaggle from May to July 2018. Here we’ll be focusing on the highest score, irrespective of the evaluation time. This phase is an official IEEE WCCI competition (Rio de Janeiro, Jul 2018).

- The Throughput phase will run on Codalab from July to October 2018. Participants will submit their software which is evaluated by the platform. Incentive is on the throughput (or speed) of the evaluation while reaching a good score. This phase is an official NIPS competition (Montreal, Dec 2018).

All the necessary information for the Accuracy phase is available here on Kaggle site. The overall TrackML challenge web site is there.

I know you breathed a sigh of relief upon reading, [Non-Twitter Big Data].

There’s nothing wrong with using Twitter to practice big data techniques but end of the day, at best some advertiser can micro-tweak an advertisement for a loser (pronounced “user.”) There’s no real bang from that “achievement.”

Unlike tweaking ad targeting, a viable solution to this challenge may make a fundamental difference in high energy physics.

Would you rather be known as an ad tweaker or for advancing ML in high energy physics?

Your call.