OpenGamma updates its open source financial analytics platform

From the post:

OpenGamma has released version 1.2 of its open source financial analytic and risk management platform. Released as Apache 2.0 licensed open source in April, the Java-based platform offers an architecture for delivering real-time available trading and risk analytics for front-office-traders, quants, and risk managers.

Version 1.2 includes a newly rebuilt beta of a new web GUI offering multi-pane analytics views with drag and drop panels, independent pop-out panels, multi-curve and surface viewers, and intelligent tab handling. Copy and paste is now more extensive and is capable of handing complex structures.

Underneath, the Analytics Library has been expanded to include support for Credit Default Swaps, Extended Futures, Commodity Futures and Options databases, and equity volatility surfaces. Data Management has improved robustness with schema checking on production systems and an auto-upgrade tool being added to handle restructuring of the futures/forwards database. The market and reference data’s live system now uses OpenGamma’s own component system. The Excel Integration module has also been enhanced and thanks to a backport now works with Excel 2003. A video shows the Excel module in action:

Integration with OpenGamma billed by OpenGamma as:

While true green-field development does exist in financial services, it’s exceptionally rare. Firms already have a variety of trade processing, analytics, and risk systems in place. They may not support your current requirements, or may be lacking in capabilities/flexibility; but no firm can or should simply throw them all away and start from scratch.

We think risk technology architecture should be designed to use and complement systems already supporting traders and risk managers. Whether proprietary or vendor solutions, considerable investments have been made in terms of time and money. Discarding them and starting from scratch risks losing valuable data and insight, and adds to the cost of rebuilding.

That being said, a primary goal of any project rethinking analytics or risk computation needs to be the elimination of all the problems siloed, legacy systems have: duplication of technology, lack of transparency, reconciliation difficulties, inefficient IT resourcing, etc.

The OpenGamma Platform was built from scratch specifically to integrate with any legacy data source, analytics library, trading system, or market data feed. Once that integration is done against our rich set of APIs and network endpoints, you can make use of it across any project based on the OpenGamma Platform.

A very valuable approach to integration, being able to access legacy or even current data sources.

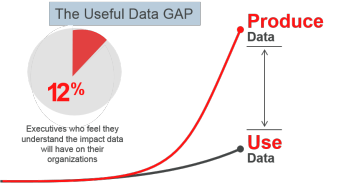

But that leaves the undocumented semantics of data from those feeds on the cutting room floor.

The unspoken semantics of data from integrated feeds is like dry rot just waiting to make its presence known.

Suddenly and at the worst possible moment.

Compare that to documented data identity and semantics, which enables reliable re-use/merging of data from multiple sources.

So we are clear, I am not suggesting a topic maps platform with financial analytics capabilities.

I am suggesting incorporation of topic map capabilities into existing applications, such as OpenGamma.

That would take data integration to a whole new level.