Version 2 of the Hubble Source Catalog

From the post:

The Hubble Source Catalog (HSC) is designed to optimize science from the Hubble Space Telescope by combining the tens of thousands of visit-based source lists in the Hubble Legacy Archive (HLA) into a single master catalog.

Version 2 includes:

- Four additional years of ACS source lists (i.e., through June 9, 2015). All ACS source lists go deeper than in version 1. See current HLA holdings for details.

- One additional year of WFC3 source lists (i.e., through June 9, 2015).

- Cross-matching between HSC sources and spectroscopic COS, FOS, and GHRS observations.

- Availability of magauto values through the MAST Discovery Portal. The maximum number of sources displayed has increased from 10,000 to 50,000.

The HSC v2 contains members of the WFPC2, ACS/WFC, WFC3/UVIS and WFC3/IR Source Extractor source lists from HLA version DR9.1 (data release 9.1). The crossmatching process involves adjusting the relative astrometry of overlapping images so as to minimize positional offsets between closely aligned sources in different images. After correction, the astrometric residuals of crossmatched sources are significantly reduced, to typically less than 10 mas. The relative astrometry is supported by using Pan-STARRS, SDSS, and 2MASS as the astrometric backbone for initial corrections. In addition, the catalog includes source nondetections. The crossmatching algorithms and the properties of the initial (Beta 0.1) catalog are described in Budavari & Lubow (2012).

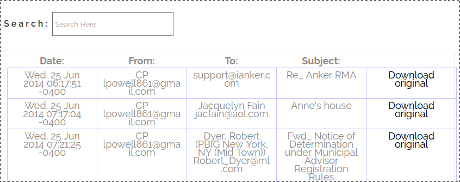

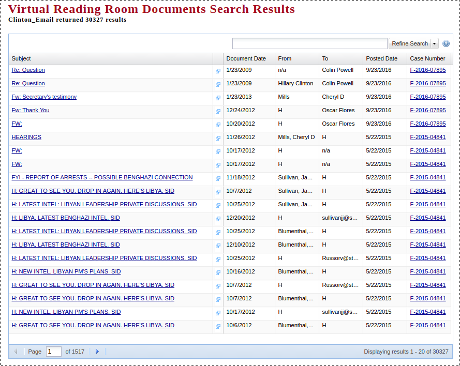

Interfaces

There are currently three ways to access the HSC as described below. We are working towards having these interfaces consolidated into one primary interface, the MAST Discovery Portal.

- The MAST Discovery Portal provides a one-stop web access to a wide variety of astronomical data. To access the Hubble Source Catalog v2 through this interface, select Hubble Source Catalog v2 in the Select Collection dropdown, enter your search target, click search and you are on your way. Please try Use Case Using the Discovery Portal to Query the HSC

- The HSC CasJobs interface permits you to run large and complex queries, phrased in the Structured Query Language (SQL).

- HSC Home Page

– The HSC Summary Search Form displays a single row entry for each object, as defined by a set of detections that have been cross-matched and hence are believed to be a single object. Averaged values for magnitudes and other relevant parameters are provided.

– The HSC Detailed Search Form displays an entry for each separate detection (or nondetection if nothing is found at that position) using all the relevant Hubble observations for a given object (i.e., different filters, detectors, separate visits).…

Amazing isn’t it?

The astronomy community long ago vanquished data hoarding and constructed tools to avoid moving very large data sets across the network.

All while enabling more and not less access and research using the data.

Contrast that to the sorry state of security research, where example code is condemned, if not actually prohibited by law.

Yet, if you believe current news reports (always an iffy proposition), cybercrime is growing by leaps and bounds. (PwC Study: Biggest Increase in Cyberattacks in Over 10 Years)

How successful is the “data hoarding” strategy of the security research community?