Should Everybody Learn to Code? by Esther Shein.

Interesting essay but most of the suggestions read like this one:

Just as students are taught reading, writing, and the fundamentals of math and the sciences, computer science may one day become a standard part of a K–12 school curriculum. If that happens, there will be significant benefits, observers say. As the kinds of problems we will face in the future will continue to increase in complexity, the systems being built to deal with that complexity will require increasingly sophisticated computational thinking skills, such as abstraction, decomposition, and composition, says Wing.

“If I had a magic wand, we would have some programming in every science, mathematics, and arts class, maybe even in English classes, too,” says Guzdial. “I definitely do not want to see computer science on the side … I would have computer science in every high school available to students as one of their required science or mathematics classes.”

But university CS programs for the most part don’t teach people to code. Rather they teach computer science in the abstract.

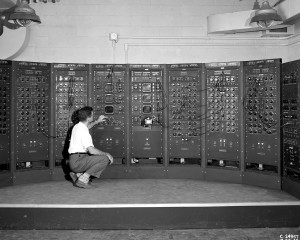

Moreover, coding practice isn’t necessary to contribute to computer science, as illustrated in “History of GIS and Early Computer Cartography Project,” by John Hessler, Cartographic Specialist, Geography and Map Division, Library of Congress.

As part of a project to collect early GIS materials, the following was discovered in an archive:

One set of papers in particular, which deserves much more attention from today’s mapmakers, historians, and those interested in the foundations of current geographic thought, is the Harvard Papers in Theoretical Geography. These papers, subtitled, “Geography and the properties of surfaces,” detail the lab’s early experiments in the computer analysis of cartographic problems. They also give insight into the theoretical thinking of many early researchers as they experimented with theorems from algebraic topology, complex spatial analysis algorithms, and various forms of abstract algebras to redefine the map as a mathematical tool for geographic analysis. Reading some of the titles in the series today, for example, “Hyper-surfaces and Geodesic Lines in 4-D Euclidean Space and The Sandwich Theorem: A Basic One for Geography,” gives one a sense of the experimentation and imaginative thinking that surrounded the breakthroughs necessary for the development of our modern computer mapping systems.

And the inspiration for this work?

Aside from the technical aspects that archives like this reveal, they also show deeper connections with cultural and intellectual history. They demonstrate how the practitioners and developers of GIS found themselves compelled to draw both distinctions and parallels with ideas that were appearing in the contemporary scholarly literature on spatial and temporal reasoning. Their explorations into this literature was not limited to geographic ideas on lived human space but also drew on philosophy, cognitive science, pure mathematics, and fields like modal logic—all somehow to come to terms with the diverse phenomena that have spatiotemporal extent and that might be mapped and analyzed.

Coding is a measurable activity but being measurable doesn’t mean it is the only way to teach abstract thinking skills.

The early days of computer science, including compiler research, suggest coding isn’t require to learn abstract thinking skills.

Coding is a useful skill but let’s not confuse a skill or even computer science with abstract thinking skills. Abstract thinking is needed in many domains and we will all profit from not defining it too narrowly.

I first saw this in a tweet from Tim O’Reilly, who credits Simon St. Laurent with the discovery.