Measuring the Complexity of the Law: The United States Code by Daniel Martin Katz and Michael James Bommarito II.

Abstract:

Einstein’s razor, a corollary of Ockham’s razor, is often paraphrased as follows: make everything as simple as possible, but not simpler. This rule of thumb describes the challenge that designers of a legal system face — to craft simple laws that produce desired ends, but not to pursue simplicity so far as to undermine those ends. Complexity, simplicity’s inverse, taxes cognition and increases the likelihood of suboptimal decisions. In addition, unnecessary legal complexity can drive a misallocation of human capital toward comprehending and complying with legal rules and away from other productive ends.

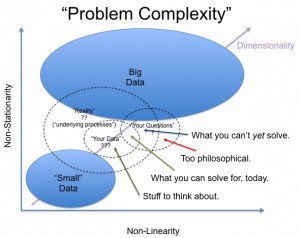

While many scholars have offered descriptive accounts or theoretical models of legal complexity, empirical research to date has been limited to simple measures of size, such as the number of pages in a bill. No extant research rigorously applies a meaningful model to real data. As a consequence, we have no reliable means to determine whether a new bill, regulation, order, or precedent substantially effects legal complexity.

In this paper, we address this need by developing a proposed empirical framework for measuring relative legal complexity. This framework is based on “knowledge acquisition,” an approach at the intersection of psychology and computer science, which can take into account the structure, language, and interdependence of law. We then demonstrate the descriptive value of this framework by applying it to the U.S. Code’s Titles, scoring and ranking them by their relative complexity. Our framework is flexible, intuitive, and transparent, and we offer this approach as a first step in developing a practical methodology for assessing legal complexity.

Curious what you make of the treatment of the complexity of language of laws in this article?

The authors compute the number of words and the average length of words in each title of the United States Code. In addition, the Shannon entropy of each title is also calculated. Those results figure in the author’s determination of the complexity of each title.

To be sure, those are all measurable aspects of each title and so in that sense the results and the process to reach them can be duplicated and verified by others.

The author’s are using a “knowledge acquisition model,” that is measuring the difficulty a reader would experience in reading and acquiring knowledge about any part of the United States Code.

But reading the bare words of the U.S. Code is not a reliable way to acquire legal knowledge. Words in the U.S. Code and their meanings have been debated and decided (sometimes differently) by various courts. A reader doesn’t understand the U.S. Code without knowledge of court decisions on the language of the text.

Let me give you a short example:

42 U.S.C. §1983 read:

Every person who, under color of any statute, ordinance, regulation, custom, or usage, of any State or Territory or the District of Columbia, subjects, or causes to be subjected, any citizen of the United States or other person within the jurisdiction thereof to the deprivation of any rights, privileges, or immunities secured by the Constitution and laws, shall be liable to the party injured in an action at law, suit in equity, or other proper proceeding for redress, except that in any action brought against a judicial officer for an act or omission taken in such officer’s judicial capacity, injunctive relief shall not be granted unless a declaratory decree was violated or declaratory relief was unavailable. For the purposes of this section, any Act of Congress applicable exclusively to the District of Columbia shall be considered to be a statute of the District of Columbia. (emphasis added)

Before reading the rest of this post, answer this question: Is a municipality a person for purposes of 42 U.S.C. §1983?

That is if city employees violate your civil rights, can you sue them and the city they work for?

That seems like a straightforward question. Yes?

In Monroe v. Pape, 365 US 167 (1961), the Supreme Court found the answer was no. Municipalities were not “persons” for purposes of 42 U.S.C. §1983.

But a reader who only remembers that decision would be wrong if trying to understand that statute today.

In Monell v. New York City Dept. of Social Services, 436 U.S. 658 (1978), the Supreme Court found that it was mistaken in Monroe v. Pape and found the answer was yes. Municipalities could be “persons” for purposes of 42 U.S.C. §1983, in some cases.

The language in 42 U.S.C. §1983 did not change between 1961 and 1978. Nor did the circumstances under which section 1983 was passed (Civil War reconstruction) change.

But the meaning of that one word changed significantly.

Many other words in the U.S. Code have had a similar experience.

If you need assistance with 42 U.S.C. §1983 or any other part of the U.S. Code or other laws, seek legal counsel.