Incredible New View of the Milky Way Is the Largest Star Map Ever

From the webpage:

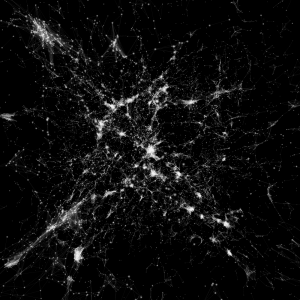

The European Space Agency’s Gaia spacecraft team has dropped its long-awaited trove of data about 1.7 billion stars. You can see a new visualization of all those stars in the Milky Way and nearby galaxies above, but you really need to zoom in to appreciate just how much stuff there is in the map. Yes, the specks are stars.

In addition to the 1.7 billion stars, this second release of data from Gaia contains the motions and color of 1.3 billion stars relative to the Sun, as well as how the stars relate to things in the distant background based on the Earth’s position. It also features radial velocities, amount of dust, and surface temperatures of lots of stars, and a catalogue of over 14,000 Solar System objects, including asteroids. There is a shitload of data in this release.

…

I won’t torment the images in the original post for display here.

More links:

Gaia Data Release 2: A Guide for Scientists

From the webpage:

“Gaia Data Release 2: A guide for scientists” consists of a series of videos from scientists to scientists. They are meant to give some overview of Gaia Data Release 2 and give help or guidance on the usage of the data. Also given are warnings and explanations on the limitations of the data. These videos are based on Skype interviews with the scientists.

Gaia Archive (here be big data)

Gaia is an ambitious mission to chart a three-dimensional map of our Galaxy, the Milky Way, in the process revealing the composition, formation and evolution of the Galaxy. Gaia will provide unprecedented positional and radial velocity measurements with the accuracies needed to produce a stereoscopic and kinematic census of about one billion stars in our Galaxy and throughout the Local Group. This amounts to about 1 per cent of the Galactic stellar population.

Astronomy, data science, computers, sounds like a natural for introducing all three to students!