Ontology work at the Royal Society of Chemistry by Antony Williams.

From the description:

We provide an overview of the use we make of ontologies at the Royal Society of Chemistry. Our engagement with the ontology community began in 2006 with preparations for Project Prospect, which used ChEBI and other Open Biomedical Ontologies to mark up journal articles. Subsequently Project Prospect has evolved into DERA (Digitally Enhancing the RSC Archive) and we have developed further ontologies for text markup, covering analytical methods and name reactions. Most recently we have been contributing to CHEMINF, an open-source cheminformatics ontology, as part of our work on disseminating calculated physicochemical properties of molecules via the Open PHACTS. We show how we represent these properties and how it can serve as a template for disseminating different sorts of chemical information.

A bit wordy for my taste but it has numerous references and links to resources. Top stuff!

I had to laugh when I read slide #20:

Why a named reaction ontology?

Despite attempts to introduce systematic nomenclature for organic reactions, lots of chemists still prefer to attach human names.

Those nasty humans! Always wanting “human” names. Grrr!

Afraid so. That is going to continue in a number of disciplines.

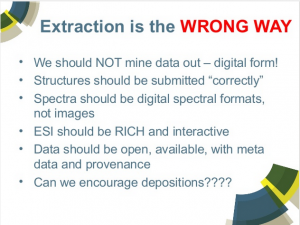

When I got to slides #29:

Ontologies as synonym sets for text-mining

it occurred to me that terms in an ontology are like base names in a topic map, in topics with associations with other topics, which also have base name.

The big difference being that ontologies are mono-views that don’t include mapping instructions based on properties in starting ontology or any other ontology to which you could map.

That is the ontologies I have seen can only report properties of their terms and not which properties must be matched to be the same subject.

Nor do such ontologies report properties of the subjects that are their properties. Much less any mappings from bundles of properties to bundles of properties in other ontologies.

I know the usual argument about combinatorial explosion of mappngs, etc., which leaves ontologists with too few arms and legs to point in the various directions.

That argument fails to point out that to have an “uber” ontology, someone has to do the mapping (undisclosed) from variants to the new master ontology. And, they don’t write that mapping down.

So the combinatorial explosion was present, it just didn’t get written down. Can you guess who is empowered as an expert in the new master ontology with undocumented mappings?

The other fallacy in that argument is that topic maps, for example, are always partial world views. I can map as much or as little between ontologies, taxonomies, vocabularies, folksonomies, etc. as I care to do.

If I don’t want to bother mapping “thing” as the root of my topic map, I am free to omit it. All the superstructure clutter goes away and I can focus on immediate ROI concerns.

Unless you want to pay for the superstructure clutter then by all means, I’m interested!

If you have an ontology, by all means use it as a starting point for your topic map. Or if someone is willing to pay to create yet another ontology, do it. But if they need results before years of travel, debate and bad coffee, give topic maps a try!

PS: The travel, debate and bad coffee never go away for ontologies, even successful ones. Despite the desires of many, the world keeps changing and our views of it along with it. A static ontology is a dead ontology. Same is true for a topic map, save that agreement on its content is required only as far as it is used and no further.