From the about page:

ANTLR is a powerful parser generator that you can use to read, process, execute, or translate structured text or binary files. It’s widely used in academia and industry to build all sorts of languages, tools, and frameworks. Twitter search uses ANTLR for query parsing, with over 2 billion queries a day. The languages for Hive and Pig, the data warehouse and analysis systems for Hadoop, both use ANTLR. Lex Machina uses ANTLR for information extraction from legal texts. Oracle uses ANTLR within SQL Developer IDE and their migration tools. NetBeans IDE parses C++ with ANTLR. The HQL language in the Hibernate object-relational mapping framework is built with ANTLR.

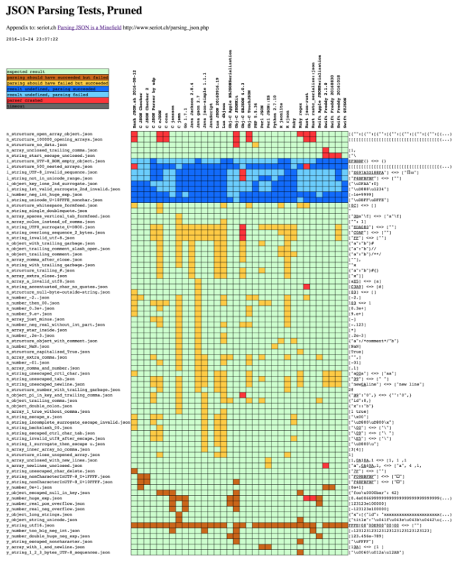

Aside from these big-name, high-profile projects, you can build all sorts of useful tools like configuration file readers, legacy code converters, wiki markup renderers, and JSON parsers. I’ve built little tools for object-relational database mappings, describing 3D visualizations, injecting profiling code into Java source code, and have even done a simple DNA pattern matching example for a lecture.

From a formal language description called a grammar, ANTLR generates a parser for that language that can automatically build parse trees, which are data structures representing how a grammar matches the input. ANTLR also automatically generates tree walkers that you can use to visit the nodes of those trees to execute application-specific code.

There are thousands of ANTLR downloads a month and it is included on all Linux and OS X distributions. ANTLR is widely used because it’s easy to understand, powerful, flexible, generates human-readable output, comes with complete source under the BSD license, and is actively supported.

… (emphasis in original)

A friend wants to explore the OpenOffice schema by visualizing a parse from the Multi-Schema Validator.

ANTLR is probably more firepower than needed but the extra power may encourage creative thinking. Maybe.

Enjoy!