Leaked BLM Draft May Hinder Public Access to Chemical Information

From the post:

On Feb. 8, EnergyWire released a leaked draft proposal from the U.S. Department of the Interior’s Bureau of Land Management on natural gas drilling and extraction on federal public lands. If finalized, the proposal could greatly reduce the public’s ability to protect our resources and communities. The new draft indicates a disappointing capitulation to industry recommendations.

…

The draft rule affects oil and natural gas drilling operations on the 700 million acres of public land administered by BLM, plus 56 million acres of Indian lands. This includes national forests, which are the sources of drinking water for tens of millions of Americans, national wildlife refuges, and national parks, which are widely used for recreation.

The Department of the Interior estimates that 90 percent of the 3,400 wells drilled each year on public and Indian lands use natural gas fracking, a process that pumps large amounts of water, sand, and toxic chemicals into gas wells at very high pressure to cause fissures in shale rock that contains methane gas. Fracking fluid is known to contain benzene (which causes cancer), toluene, and other harmful chemicals. Studies link fracking-related activities to contaminated groundwater, air pollution, and health problems in animals and humans.

…

If the leaked draft is finalized, the changes in chemical disclosure requirements would represent a major concession to the oil and gas industry. The rule would allow drilling companies to report the chemicals used in fracking to an industry-funded website, called FracFocus.org. Though the move by the federal government to require online disclosure is encouraging, the choice of FracFocus as the vehicle is problematic for many reasons.

First, the site is not subject to federal laws or oversight. The site is managed by the Ground Water Protection Council (GWPC) and the Interstate Oil and Gas Compact Commission (IOGCC), nonprofit intergovernmental organizations comprised of state agencies that promote oil and gas development. However, the site is paid for by the American Petroleum Institute and America’s Natural Gas Alliance, industry associations that represent the interests of member companies.

BLM would have little to no authority to ensure the quality and accuracy of the data reported directly to such a third-party website. Additionally, the data will not be accessible through the Freedom of Information Act since BLM is not collecting the information. The IOGCC has already declared that it is not subject to federal or state open records laws, despite its role in collecting government-mandated data.

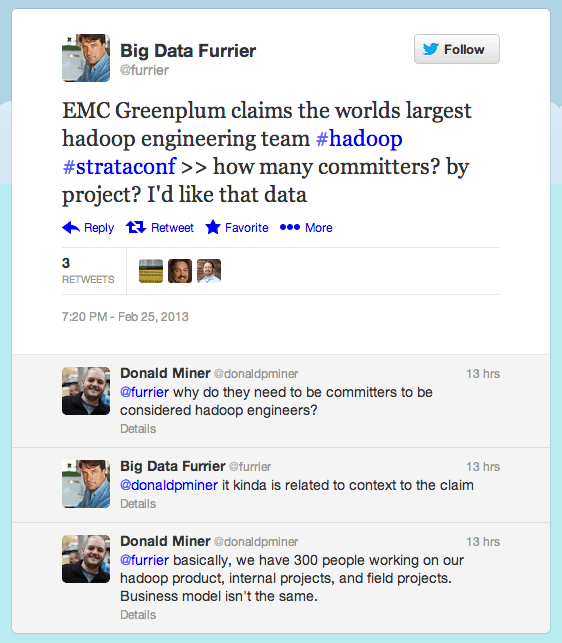

Second, FracFocus.org makes it difficult for the public to use the data on wells and chemicals. The leaked BLM proposal fails to include any provisions to ensure minimum functionality on searching, sorting, downloading, or other mechanisms to make complex data more usable. Currently, the site only allows users to download PDF files of reports on fracked wells, which makes it very difficult to analyze data in a region or track chemical use. Despite some plans to improve searching on FracFocus.org, the oil and gas industry opposes making chemical data easier to download or evaluate for fear that the public “might misinterpret it or use it for political purposes.”

Don’t you feel safer? Knowing the oil and gas industry is working so hard to protect you from misinterpreting data?

Why the government is helping the oil and gas industry protect us from data I cannot say.

I mention this an example of testing for “transparency.”

Anything the government freely makes available with spreadsheet capabilities, isn’t transparency. It’s distraction.

Any data that the government tries to hide, that data has potential value.

The Center for Effective Government points out these are draft rules and when published, you need to comment.

Not a bad plan but not very reassuring given the current record of President Obama, the Opaque.

Alternatives? Suggestions for how data mining could expose those who own floors of the BLM, who drill the wells, etc?

(I have XP/Office on a separate box that uses the same monitors/keyboard but sharing data is problematic.)

(I have XP/Office on a separate box that uses the same monitors/keyboard but sharing data is problematic.)