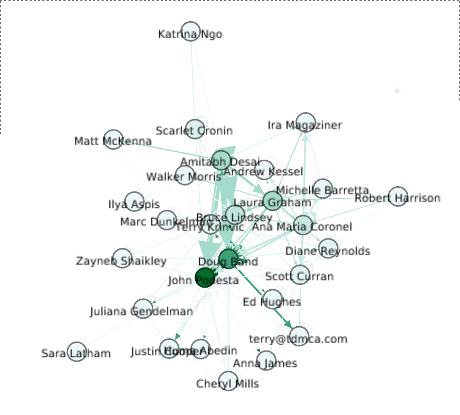

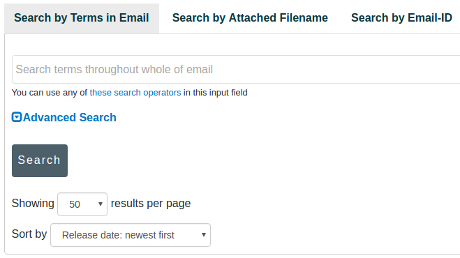

Just in time for Christmas, CrowdStrike published:

Use of Fancy Bear Android Malware in Tracking of Ukranian Field Artillery Units

Anti-Russian Propaganda

The cover art reminds me of the “Better Dead than Red” propaganda from deep inside the Cold War.

Compare that with an anti-communist poster from 1953:

Anonymous, [ANTI-COMMUNIST POSTER SHOWING RUSSIAN SOLDIER AND JOSEPH STALIN STANDING OVER GRAVES IN FOREGROUND; CANNONS AND PEOPLE MARCHING TO SIBERIA IN BACKGROUND] (1953) courtesy of Library of Congress [LC-USZ62-117876].

Notice any similarities? Sixty-three years separate those two images and yet the person who produced the second one would immediately recognize the first one. And vice versa.

Apparently, July Woodruff, who interviewed Dmitri Alperovitch, co- founder of CrowdStrike, and Thomas Rid, a professor at King’s College London for Security company releases new evidence of Russian role in DNC hack (PBS Fake News Hour), didn’t bother to look at the cover of the report covered by her “interview.”

Not commenting on Judy’s age but noting the resemblance to 1950’s and 1960’s anti-communist propaganda would be obvious to anyone in her graduating class.

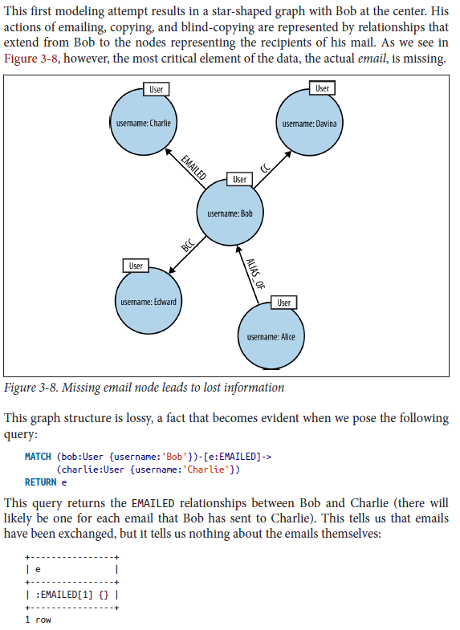

Evidence or Rather the Lack of Evidence

Leaving aside Judy’s complete failure to notice this is anti-Russian propaganda by its cover, let’s compare the “evidence” Judy discusses with Alperovich:

[Judy Woodruff]

Dmitri Alperovitch, let me start with you. What is this new information?

DMITRI ALPEROVITCH, CrowdStrike: Well, this is an interesting case we’ve uncovered actually all the way in Ukraine where Ukraine artillerymen were targeted by the same hackers who were called Fancy Bear, that targeted the DNC, but this time, they were targeting their cell phones to understand their location so that the Russian military and Russian artillery forces can actually target them in the open battle.

JUDY WOODRUFF: So, this is Russian military intelligence who got hold of information about the weapons, in essence, that the Ukrainian military was using, and was able to change it through malware?

DMITRI ALPEROVITCH: Yes, essentially, one Ukraine officer built this app for his Android phone that he gave out to his fellow officers to control the settings for the artillery pieces that they were using, and the Russians actually hacked that application, put their malware in it and that malware reported back the location of the person using the phone.

JUDY WOODRUFF: And so, what’s the connection between that and what happened to the Democratic National Committee?

DMITRI ALPEROVITCH: Well, the interesting is that it was the same variant of the same malicious code that we have seen at the DNC. This was a phone version. What we saw at the DNC was personal computers, but essentially, it was the same source used by this actor that we call Fancy Bear.

And when you think about, well, who would be interested in targeting Ukraine artillerymen in eastern Ukraine who has interest in hacking the Democratic Party, Russia government comes to find, but specifically, Russian military that would have operational over forces in the Ukraine and would target these artillerymen.

JUDY WOODRUFF: So, just quickly, in the sense, these are like cyber fingerprints? Is that what we’re talking about?

DMITRI ALPEROVITCH: Essentially the DNA of this malicious code that matches to the DNA that we saw at the DNC.

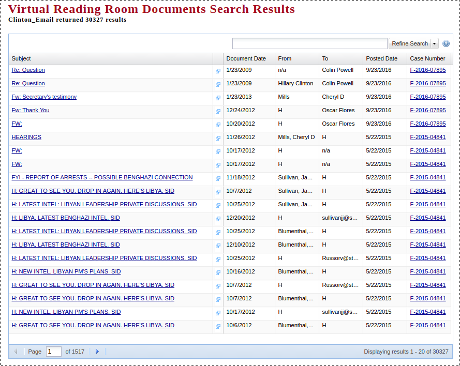

That may sound compelling, at least until you read the Crowdstrike report. Which unlike Judy/PBS, I include a link for you to review it for yourself: Use of Fancy Bear Android Malware in Tracking of Ukranian Field Artillery Units.

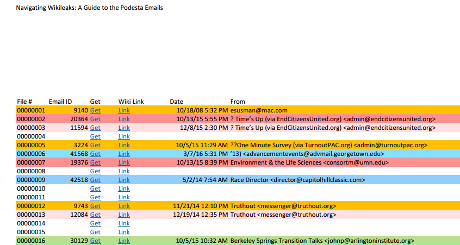

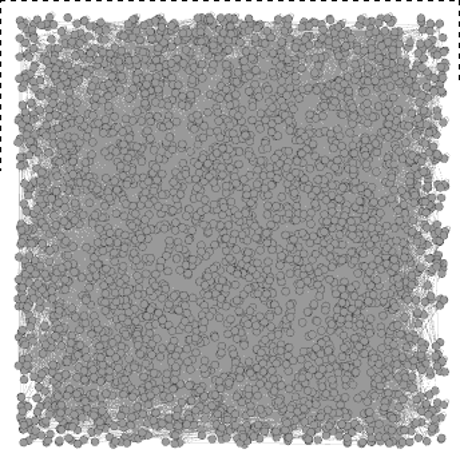

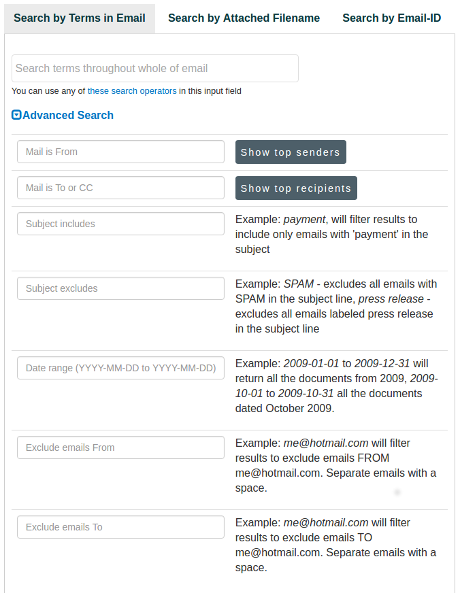

The report consists of a series of un-numbered pages, but in order:

Coverpage: (the anti-Russian artwork)

Key Points: Conclusions without evidence (1 page)

Background: Repetition of conclusions (1 page)

Timelines: No real relationship to the question of malware (2 pages)

Timeline of Events: Start of prose that might contain “evidence” (6 pages)

OK, let’s take:

the Russians actually hacked that application, put their malware in it and that malware reported back the location of the person using the phone.

as an example.

Contrary to his confidence in the interview, page 7 of the report says:

…

Crowdstrike has discovered indications that as early as 2015 FANCY BEAR likely developed X-Agent applications for the iOS environment, targeting “jailbroken” Apple mobile devices. The use of the X-Agent implant in the original Попр-Д30.apk application appears to be the first observed case of FANCY BEAR malware developed for the Android mobile platform. On 21 December 2014 the malicious variant of the Android application was first observed in limited public distribution on a Russian language, Ukrainian military forum. A late 2014 public release would place the development timeframe for this implant sometime between late-April 2013 and early December 2014.

…

I’m sorry, but do you see any evidence in “…indications…” and/or “likely developed…?”

It’s a different way of restating what you saw in the Key Points and Background, but otherwise, it’s simply repetition of Crowdstrike’s conclusions.

That’s ok if you already agree with Crowdstrike’s conclusions, I suppose, but it should be deeply unsatisfying for a news reporter.

Judy Woodruff should have said:

Imagined question from Woodruff:

I understand your report says Fancy Bear is connected with this malware but you don’t state any facts on which you base that conclusion. Is there another report our listeners can review for those details?

If you see that question in the transcript ping me. I missed it.

What About Calling the NSA?

If Woodruff had even a passing acquaintance with Clifford Stoll’s Cuckoo’s Egg (tracing a hacker from a Berkeley computer to a home in Germany), she could have asked:

Thirty years ago, Clifford Stoll wrote in the Cuckoo’s Egg about the tracking of a hacker from a computer in Berkeley to his home in Germany. Crowdstrike claims to have caught the hackers “red handed”.

The internet has grown more complicated in thirty years and tracking more difficult. Why didn’t Crowdstrike ask for help from the NSA in tracking those hackers?

I didn’t see that question being asked. Did you?

Tracking internet traffic is beyond the means of Crowdstrike, but several nation states are rumored to be sifting backbone traffic every day.

Factual Confusion and Catastrophe at Crowdsrike

The most appalling part of the Crowdstrike report is its admixture of alleged fact, speculation and wishful thinking.

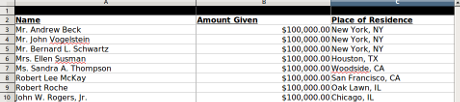

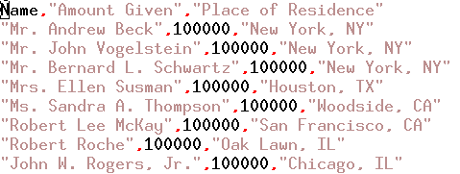

Consider its assessment of the spread and effectiveness of the alleged malware (without more evidence, I would not even concede that it exists):

-

CrowdStrike assesses that Попр-Д30.apk was potentially used through 2016 by at least one artillery unit operating in eastern Ukraine. (page 6)

-

Open-source reporting indicates losses of almost 50% of equipment in the last 2 years of conflict amongst Ukrainian artillery forces and over 80% of D-30 howitzers were lost, far more than any other piece of Ukrainian artillery (page 8)

-

A malware-infected Попр-Д30.apk application probably could not have provided all the necessary data required to directly facilitate the types of tactical strikes that occurred between July and August 2014. (page 8)

-

The X-Agent Android variant does not exhibit a destructive function and does not interfere with the function of the original Попр-Д30.apk application. Therefore, CrowdStrike Intelligence has assessed that the likely role of this malware is strategic in nature. (page 9)

-

Additionally, a study provided by the International Institute of Strategic Studies determined that the weapons platform bearing the highest losses between 2013 and 2016 was the D-30 towed howitzer.11 It is possible that the deployment of this malware infected application may have contributed to the high-loss nature of this platform. (page 9)

Judy Woodruff and her listeners don’t have to be military experts to realize that the claim of “one artillery unit” (#1) is hard to reconcile with the loss of “over 80% of D-30 howitzers” (#2) Nor do the claims of the malware being of “strategic” value, (#3, #4), work well with the “high-loss” described in #5.

The so-called “report” by Crowdstrike is a repetition of conclusions drawn on evidence (alleged to exist), the nature and scope of which is concealed from the reader.

Conclusion

However badly Clinton supporters want to believe in Russian hacking of the DNC, this report offers nothing of the kind. It creates the illusion of evidence that deceives only those already primed to accept its conclusions.

Unless and until Crowdstrike releases real evidence, logs, malware (including prior malware and how it was obtained), etc., this must be filed under “fake news.”