BinaryPig: Scalable Static Binary Analysis Over Hadoop (Guest post at Cloudera: Telvis Calhoun, Zach Hanif, and Jason Trost of Endgame)

From the post:

Over the past three years, Endgame received 40 million samples of malware equating to roughly 19TB of binary data. In this, we’re not alone. McAfee reports that it currently receives roughly 100,000 malware samples per day and received roughly 10 million samples in the last quarter of 2012. Its total corpus is estimated to be about 100 million samples. VirusTotal receives between 300,000 and 600,000 unique files per day, and of those roughly one-third to half are positively identified as malware (as of April 9, 2013).

This huge volume of malware offers both challenges and opportunities for security research, especially applied machine learning. Endgame performs static analysis on malware in order to extract feature sets used for performing large-scale machine learning. Since malware research has traditionally been the domain of reverse engineers, most existing malware analysis tools were designed to process single binaries or multiple binaries on a single computer and are unprepared to confront terabytes of malware simultaneously. There is no easy way for security researchers to apply static analysis techniques at scale; companies and individuals that want to pursue this path are forced to create their own solutions.

Our early attempts to process this data did not scale well with the increasing flood of samples. As the size of our malware collection increased, the system became unwieldy and hard to manage, especially in the face of hardware failures. Over the past two years we refined this system into a dedicated framework based on Hadoop so that our large-scale studies are easier to perform and are more repeatable over an expanding dataset.

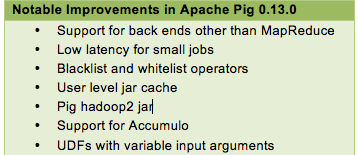

To address this problem, we created an open source framework, BinaryPig, built on Hadoop and Apache Pig (utilizing CDH, Cloudera’s distribution of Hadoop and related projects) and Python. It addresses many issues of scalable malware processing, including dealing with increasingly large data sizes, improving workflow development speed, and enabling parallel processing of binary files with most pre-existing tools. It is also modular and extensible, in the hope that it will aid security researchers and academics in handling ever-larger amounts of malware.

…

For more details about BinaryPig’s architecture and design, read our paper from Black Hat USA 2013 or check out our presentation slides. BinaryPig is an open source project under the Apache 2.0 License, and all code is available on Github.

You may have heard the rumor that storing more than seven (7) days of food marks you as a terrorist in the United States.

Be forewarned: Doing Massive Malware Analsysis May Make You A Terrorist Suspect.

The “storing more than seven (7) days of food” rumor originated with Rand Paul R-Kentucky.

http://www.youtube.com/watch?feature=player_embedded&v=X2N1z9zJ20k

The Community Against Terrorism FBI flyer, assuming the pointers I found are accurate, says nothing about how many days of food you have on hand.

Rather it says:

Make bulk purchases of items to include:

…

Meals Ready to Eat

That’s an example of using small data analysis to disprove a rumor.

Unless you are an anthropologist, I would not rely on data from CSpan2.