2nd International Electronic Conference on Remote Sensing

From the webpage:

We are very pleased to announce that the 2nd International Electronic Conference on Remote Sensing (ECRS-2) will be held online, between 22 March and 5 April 2018.

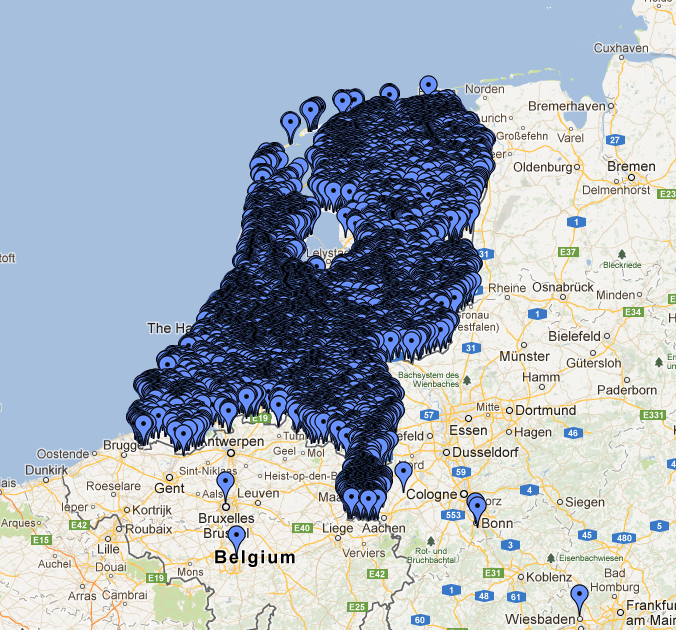

Today, remote sensing is already recognised as an important tool for monitoring our planet and assessing the state of our environment. By providing a wealth of information that is used to make sound decisions on key issues for humanity such as climate change, natural resource monitoring and disaster management, it changes our world and affects the way we think.

Nevertheless, it is very inspirational that we continue to witness a constant growth of amazing new applications, products and services in different fields (e.g. archaeology, agriculture, forestry, environment, climate change, natural and anthropogenic hazards, weather, geology, biodiversity, coasts and oceans, topographic mapping, national security, humanitarian aid) which are based on the use of satellite and other remote sensing data. This growth can be attributed to the following: large number (larger than ever before) of available platforms for data acquisition, new sensors with improved characteristics, progress in computer technology (hardware, software), advanced data analysis techniques, and access to huge volumes of free and commercial remote sensing data and related products.

Following the success of the 1st International Electronic Conference on Remote Sensing (http://sciforum.net/conference/ecrs-1), ECRS-2 aims to cover all recent advances and developments related to this exciting and rapidly changing field, including innovative applications and uses.

We are confident that participants of this unique multidisciplinary event will have the opportunity to get involved in discussions on theoretical and applied aspects of remote sensing that will contribute to shaping the future of this discipline.

ECRS-2 (http://sciforum.net/conference/ecrs-2) is hosted on sciforum, the platform developed by MDPI for organising electronic conferences and discussion groups, and is supported by Section Chairs and a Scientific Committee comprised of highly reputable experts from academia.

It should be noted that there is no cost for active participation and attendance of this virtual conference. Experts from different parts of the world are encouraged to submit their work and take the exceptional opportunity to present it to the remote sensing community.

…

I have a less generous view of remote sensing, seeing it used to further exploit/degrade the environment, manipulate regulatory processes, and to generally disadvantage those not skilled in its use.

Being aware of the latest developments in remote sensing is a first step towards developing your ability to question, defend and even use remote sensing data for your own ends.

ECRS-2 (http://sciforum.net/conference/ecrs-2) is a great opportunity to educate yourself about remote sensing. Enjoy!

While electronic conferences lack the social immediacy of physical gatherings, one wonders why more data technologies aren’t holding electronic conferences? Thoughts?