Eli Pariser has started If you were Facebook, how would you reduce the influence of fake news? on GoogleDocs.

Out of the now seventeen pages of suggestions, I haven’t noticed any that promise a revenue stream to Facebook.

I view ideas to filter “false news” and/or “hate speech” that don’t generate revenue for Facebook as non-starters. I suspect Facebook does as well.

Here is a broad sketch of how Facebook can monetize “false news” and “hate speech,” all while shaping Facebook timelines to diverse expectations.

Monetizing “false news” and “hate speech”

Facebook creates user defined filters for their timelines. Filters can block other Facebook accounts (and any material from them), content by origin, word and I would suggest, regex.

User defined filters apply only to that account and can be shared with twenty other Facebooks users.

To share a filter with more than twenty other Facebook users, Facebook charges an annual fee, scaled on the number of shares.

Unlike the many posts on “false news” and “hate speech,” being a filter isn’t free beyond twenty other users.

Selling Subscriptions to Facebook Filters

Organizations can sell subscriptions to their filters, Facebook, which controls the authorization of the filters, contracts for a percentage of the subscription fee.

Pro tip: I would not invoke Facebook filters from the Washington Post and New York Times at the same time. It is likely they exclude each other as news sources.

Advantages of Monetizing Hate Speech and False News

First and foremost for Facebook, it gets out of the satisfying every point of view game. Completely. Users are free to define as narrow or as broad a point of view as they desire.

If you see something you don’t like, disagree with, etc., don’t complain to Facebook, complain to your Facebook filter provider.

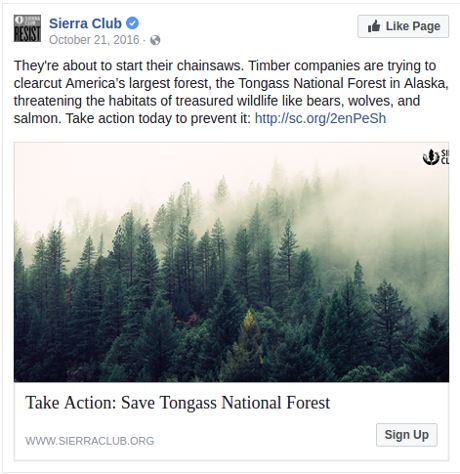

That alone will expose the hidden agenda behind most, perhaps not all, of the “false news” filtering advocates. They aren’t concerned with what they are seeing on Facebook but they are very concerned with deciding what you see on Facebook.

For wannabe filters of what other people see, beyond twenty other Facebook users, that privilege is not free. Unlike the many proposals with as many definitions of “false news” as appear in Eli’s document.

It is difficult to imagine a privilege people would be more ready to pay for than the right to attempt to filter what other people see. Churches, social organizations, local governments, corporations, you name them and they will be lining up to create filter lists.

The financial beneficiary of the “drive to filter for others” is of course Facebook but one could argue the filter owners profit by spreading their worldview and the unfortunates that follow them, well, they get what they get.

Commercialization of Facebook filters, that is selling subscriptions to Facebook filters creates a new genre of economic activity and yet another revenue stream for Facebook. (That two up to this point if you are keeping score.)

It isn’t hard to imagine the Economist, Forbes, professional clipping services, etc., creating a natural extension of their filtering activities onto Facebook.

Conclusion: Commercialization or Unfunded Work Assignments

Preventing/blocking “hate speech” and “false news,” for free has been, is and always will be a failure.

Changing Facebook infrastructure isn’t free and by creating revenue streams off of preventing/blocking “hate speech” and “false news,” creates incentives for Facebook to make the necessary changes and for people to build filters off of which they can profit.

Not to mention that filtering enables everyone, including the alt-right, alt-left and the sane people in between, to create the Facebook of their dreams, and not being subject to the Facebook desired by others.

Finally, it gets Facebook and Mark Zuckerberg out of the fantasy island approach where they are assigned unpaid work by others. New York Times, Mark Zuckerberg Is in Denial. (It’s another “hit” piece by Zeynep Tufekci.)

If you know Mark Zuckerberg, please pass this along to him.