As you may recall from Clinton/Podesta Emails – Towards A More Complete Graph (Part 2), I didn’t check to see if “|” was in use as a separator in the extracted emails subject lines so when I tried to create node lists based on “|” as a separator, it failed.

That happens. More than many people are willing to admit.

In the meantime, a new dump of emails has arrived so I created the new DKIM-incomplete-podesta-1-22.txt.gz file. Which mean picking a new separator to use for the resulting file.

Advice: Check your proposed separator against the data file before using it. I forgot, you shouldn’t.

My new separator? |/|

Which I checked against the file to make sure there would be no conflicts.

The sed commands to remove < and > are the same as in Part 2.

Sigh, back to failure land, again.

Just as one sample:

awk 'FS="|/|" { print $7}' test.me

where test.me is:

9991 00013434.eml|/|False|/|2015-11-21 17:15:25-05:00|/|Eryn Sepp eryn.sepp@gmail.com|/|John Podesta john.podesta@gmail.com|/|Re: Nov 30 / Future Plans / Etc.!|/|8A6B3E93-DB21-4C0A-A548-DB343BD13A8C@gmail.com

returns:

Future Plans

I also checked that with gawk and nawk, with the same result.

For some unknown (to me) reason, all three are treating the first “/” in field 6 (by my count) as a separator, along with the second “/” in that field.

To test that theory, what do you think { print $8 } will return?

You’re right!

Etc.!|

So with the “|/|” separator, I’m going to have up to at least 9 fields, perhaps more, varying depending on whether “/” characters occur in the subject line.

🙁

That’s not going to work.

OK, so I toss the 10+ MB DKIM-complete-podesta-1-22.txt.gz into Emacs, whose regex treatment I trust, and change “|/|” to “@@@@@” and save that file as DKIM-complete-podesta-1-22-03.txt.

Another sanity check, which got us into all this trouble last time:

awk 'FS="@@@@@" { print $7}' podesta-1-22-03.txt | grep @ | wc -l

returns 36504, which plus the 16 files I culled as failures, equals 36520, the number of files in the Podesta 1-22 release.

Recall that all message-ids contain an @ sign to the correct answer on the number of files gives us confidence the file is ready for further processing.

Apologies for it taking this much prose to go so little a distance.

Our fields (numbered for reference) are:

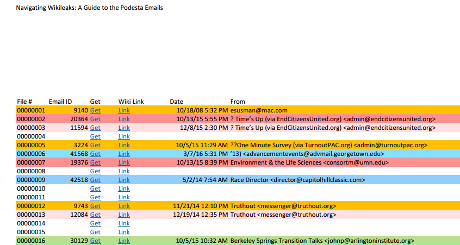

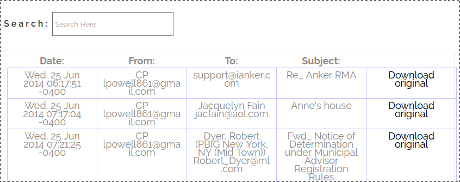

ID – 1| Verified – 2| Date – 3| From – 4| To – 5| Subject -6| Message-Id – 7

Our first node for the node list (Clinton/Podesta Emails – Towards A More Complete Graph (Part 1)) was to capture the emails themselves.

Using Message-Id (field 7) as the identifier and Subject (field 6) as its label.

We are about to encounter another problem but let’s walk through it.

An example of what we are expecting:

CAC9z1zL9vdT+9FN7ea96r+Jjf2=gy1+821u_g6VsVjr8U2eLEg

@mail.gmail.com;”Knox Knotes”;

CAKM1B-9+LQBXr7dgE0pKke7YhQC2dZ2akkgmSbRFGHUx-0NNPg

@mail.gmail.com;”Re: Tomorrow”;

We have the Message-Id with a closing “;”, followed by the Subject, surrounded in double quote marks and also terminated by a “;”.

FYI: Mixing single and double quotes in awk is a real pain. I struggled with it but then was reminded I can declare variables:

-v dq='"'

which allows me to do this:

awk -v dq='"' 'FS="@@@@@" { print $7 ";" dq $6 dq ";"}' podesta-1-22-03.txt

The awk variable trick will save you considerable puzzling over escape sequences and the like.

Ah, now we are to the problem I mentioned above.

In the part 1 post I mentioned that while:

CAC9z1zL9vdT+9FN7ea96r+Jjf2=gy1+821u_g6VsVjr8U2eLEg

@mail.gmail.com;”Knox Knotes”;

CAKM1B-9+LQBXr7dgE0pKke7YhQC2dZ2akkgmSbRFGHUx-0NNPg@mail.gmail.com;”Re: Tomorrow”;

works,

but having:

CAC9z1zL9vdT+9FN7ea96r+Jjf2=gy1+821u_g6VsVjr8U2eLEg

@mail.gmail.com;”Knox Knotes”;https://wikileaks.org/podesta-emails/emailid/9998;

CAKM1B-9+LQBXr7dgE0pKke7YhQC2dZ2akkgmSbRFGHUx-0NNPg@mail.gmail.com;”Re: Tomorrow”;https://wikileaks.org/podesta-emails/emailid/9999;

with Wikileaks links is more convenient for readers.

As you may recall, the last two lines read:

9998 00022160.eml@@@@@False@@@@@2015-06-23 23:01:55-05:00@@@@@Jerome Tatar jerry@TatarLawFirm.com@@@@@Jerome Tatar Jerome jerry@tatarlawfirm.com@@@@@Knox Knotes@@@@@CAC9z1zL9vdT+9FN7ea96r

+Jjf2=gy1+821u_g6VsVjr8U2eLEg@mail.gmail.com

9999 00013746.eml@@@@@False@@@@@2015-04-03 01:14:56-04:00@@@@@Eryn Sepp eryn.sepp@gmail.com@@@@@John Podesta john.podesta@gmail.com@@@@@Re: Tomorrow@@@@@CAKM1B-9+LQBXr7dgE0pKke7YhQC2dZ2akkgmSbRFGHUx-0NNPg@mail.gmail.com

Which means in addition to printing Message-Id and Subject as fields one and two, we need to split ID on the space and use the result to create the URL back to Wikileaks.

It’s late so I am going to leave you with DKIM-incomplete-podesta-1-22.txt.gz. This is complete save for 16 files that failed to parse. Will repost tomorrow with those included.

I have the first node file script working and that will form the basis for the creation of the edge lists.

PS: Look forward to running awk files tomorrow. It makes a number of things easier.