Roll call votes in the US House of Representatives are a stable of local, state and national news. If you go looking for the “official” version, what you find is as boring as your 5th grade civics class.

Trigger Warning: Boring and Minimally Informative Page Produced By Following Link: Final Vote Results For Roll Call 705.

Take a deep breath and load the page. It will open in a new browser tab. Boring. Yes? (You were warned.)

It is the recent roll call vote to fund the US government, take another slice of privacy from citizens, and make a number of other dubious policy choices. (Everything after the first comma depending upon your point of view.)

Whatever your politics though, you have to agree this is sub-optimal presentation, even for a government document.

This is no accident, sans the header, you will find the identical presentation of this very roll call vote at: page H10696, Congressional Record for December 18, 2015 (pdf).

Disappointing so much XML, XSLT, XQuery, etc., has been wasted duplicating non-informative print formatting. Or should I say less-informative formatting than is possible with XML?

Once the data is in XML, legend has it, users can transform that XML in ways more suited to their purposes and not those of the content providers.

I say “legend has it,” because we all know if content providers had their way, web navigation would be via ads and not bare hyperlinks. You want to see the next page? You must select the ad + hyperlink, waiting for the ad to clear before the resource appears.

I can summarize my opinion about content provider control over information legally delivered to my computer: Screw that!

If a content provider enables access to content, I am free to transform that content into speech, graphics, add information, take away information, in short do anything that my imagination desires and my skill enables.

Let’s take the roll call vote in the House of Representatives, Final Vote Results For Roll Call 705.

Just under the title you will read:

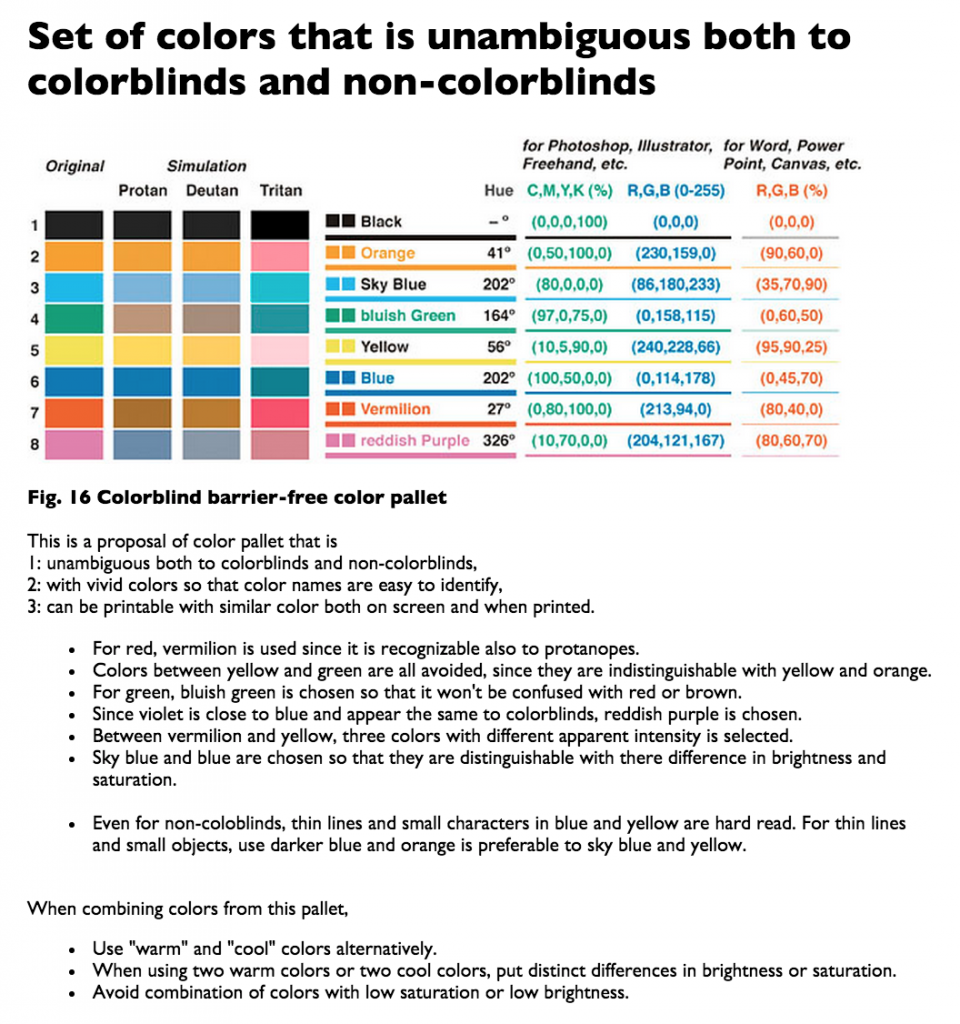

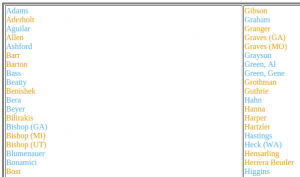

(Republicans in roman; Democrats in italic; Independents underlined)

Boring.

For a bulk display of voting results, we can do better than that.

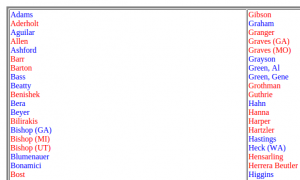

What if we had small images to identify the respective parties? Here are some candidates (sic) for the Republicans:

Of course we would have to reduce them to icons size, but XML processing is rarely ever just XML processing. Nearly every project includes some other skill set as well.

Which one do you think looks more neutral? 😉

Certainly be more colorful and depending upon your inclinations, more fun to play about with than the difference in roman and italic. Yes?

Presentation of the data in http://clerk.house.gov/evs/2015/roll705.xml is only one of the possibilities that XQuery offers. Follow along and offer your suggestions for changes, additions and modifications.

First steps:

In the browser tab with Final Vote Results For Roll Call 705, use CNTR-u to view the page source. First notice that the boring web presentation is controlled by http://clerk.house.gov/evs/vote.xsl.

Copy and paste: http://clerk.house.gov/evs/vote.xsl into a new browser tab and select return. The resulting xsl:stylesheet is responsible for generating the original page, from the vote totals to column presentation of the results.

Pay particular attention to the generation of totals from the <vote-data> element and its children. That generation is powered by these lines in vote.xsl:

<xsl:apply-templates select=”/rollcall-vote/vote-metadata”/>

<!– Create total variables based on counts. –>

<xsl:variable name=”y” select=”count(/rollcall-vote/vote-data/recorded-vote/vote[text()=’Yea’])”/>

<xsl:variable name=”a” select=”count(/rollcall-vote/vote-data/recorded-vote/vote[text()=’Aye’])”/>

<xsl:variable name=”yeas” select=”$y + $a”/>

<xsl:variable name=”nay” select=”count(/rollcall-vote/vote-data/recorded-vote/vote[text()=’Nay’])”/>

<xsl:variable name=”no” select=”count(/rollcall-vote/vote-data/recorded-vote/vote[text()=’No’])”/>

<xsl:variable name=”nays” select=”$nay + $no”/>

<xsl:variable name=”nvs” select=”count(/rollcall-vote/vote-data/recorded-vote/vote[text()=’Not Voting’])”/>

<xsl:variable name=”presents” select=”count(/rollcall-vote/vote-data/recorded-vote/vote[text()=’Present’])”/>

<br/>

(Not entirely, I omitted the purely formatting stuff.)

For tomorrow I will be working on a more “visible” way to identify political party affiliation and “borrowing” the count code from vote.xsl.

Enjoy!

You may be wondering what XQuery has to do with topic maps? Well, if you think about it, every time we select, aggregate, etc., data, we are making choices based on notions of subject identity.

That is we think the data we are manipulating represents some subjects and/or information about some subjects, that we find sensible (for some unstated reason) to put together for others to read.

The first step towards a topic map, however, is the putting of information together so we can judge what subjects need explicit representation and how we choose to identify them.

Prior topic map work was never explicit about how we get to a topic map, putting that possibly divisive question behind us, we simply start with topic maps, ab initio.

I was in the car when we took that turn and for the many miles since then. I have come to think that a better starting place is choosing subjects, what we want to say about them and how we wish to say it, so that we have only so much machinery as is necessary for any particular set of subjects.

Some subjects can be identified by IRIs, others by multi-dimensional vectors, still others by unspecified processes of deep learning, etc. Which ones we choose will depend upon the immediate ROI from subject identity and relationships between subjects.

I don’t need triples, for instance, to recognize natural languages to a sufficient degree of accuracy. Unnecessary triples, topics or associations are just padding. If you are on a per-triple contract, they make sense, otherwise, not.

A long way of saying that subject identity lurks just underneath the application of XQuery and we will see where it is useful to call subject identity to the fore.