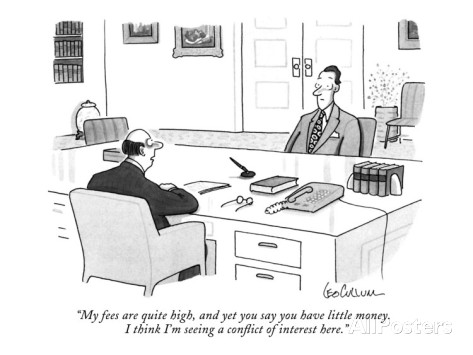

The Kardashian index: a measure of discrepant social media profile for scientists by Neil Hall.

Abstract:

In the era of social media there are now many different ways that a scientist can build their public profile; the publication of high-quality scientific papers being just one. While social media is a valuable tool for outreach and the sharing of ideas, there is a danger that this form of communication is gaining too high a value and that we are losing sight of key metrics of scientific value, such as citation indices. To help quantify this, I propose the ‘Kardashian Index’, a measure of discrepancy between a scientist’s social media profile and publication record based on the direct comparison of numbers of citations and Twitter followers.

A playful note on a new index based on a person’s popularity on twitter and their citation record. Not to be taken too seriously but not to be ignored altogether. The influence of popularity, the media asking Neil deGrasse Tyson, an astrophysicist and TV scientist, his opinion about GMOs, is a good example.

Tyson sees no difference between modern GMOs and selective breeding, which has been practiced for thousands of years. Tyson overlooks selective breeding’s requirement of an existing trait to bred towards. In other words, selective breeding has a natural limit built into the process.

For example, there are no naturally fluorescent Zebrafish:

so you can’t selectively breed fluorescent ones.

On the other hand, with genetic modification, you can produce a variety of fluorescent Zebrafish know as GloFish:

Genetic modification has no natural boundary as is present in selective breeding.

With that fact in mind, I think everyone would agree that selective breeding and genetic modification aren’t the same thing. Similar but different.

A subtle distinction that eludes Kardashian TV scientist Neil deGrasse Tyson (Twitter, 2.26M followers).

I first saw this in a tweet by Steven Strogatz.