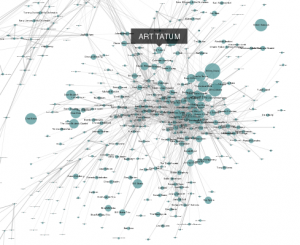

Graph data management has seen a resurgence in recent years, because of an increasing realization that querying and reasoning about the structure of the interconnections between entities can lead to interesting and deep insights into a variety of phenomena. The application domains where graph or network analytics are regularly applied include social media, finance, communication networks, biological networks, and many others. Despite much work on the topic, graph data management is still a nascent topic with many open questions. At the same time, I feel that the research in the database community is fragmented and somewhat disconnected from application domains, and many important questions are not being investigated in our community. This blog post is an attempt to summarize some of my thoughts on this topic, and what exciting and important research problems I think are still open.

At its simplest, graph data management is about managing, querying, and analyzing a set of entities (nodes) and interconnections (edges) between them, both of which may have attributes associated with them. Although much of the research has focused on homogeneous graphs, most real-world graphs are heterogeneous, and the entities and the edges can usually be grouped into a small number of well-defined classes.

Graph processing tasks can be broadly divided into a few categories. (1) First, we may to want execute standard SQL queries, especially aggregations, by treating the node and edge classes as relations. (2) Second, we may have queries focused on the interconnection structure and its properties; examples include subgraph pattern matching (and variants), keyword proximity search, reachability queries, counting or aggregating over patterns (e.g., triangle/motif counting), grouping nodes based on their interconnection structures, path queries, and others. (3) Third, there is usually a need to execute basic or advanced graph algorithms on the graphs or their subgraphs, e.g., bipartite matching, spanning trees, network flow, shortest paths, traversals, finding cliques or dense subgraphs, graph bisection/partitioning, etc. (4) Fourth, there are “network science” or “graph mining” tasks where the goal is to understand the interconnection network, build predictive models for it, and/or identify interesting events or different types of structures; examples of such tasks include community detection, centrality analysis, influence propagation, ego-centric analysis, modeling evolution over time, link prediction, frequent subgraph mining, and many others [New10]. There is much research still being done on developing new such techniques; however, there is also increasing interest in applying the more mature techniques to very large graphs and doing so in real-time. (5) Finally, many general-purpose machine learning and optimization algorithms (e.g., logistic regression, stochastic gradient descent, ADMM) can be cast as graph processing tasks in appropriately constructed graphs, allowing us to solve problems like topic modeling, recommendations, matrix factorization, etc., on very large inputs [Low12].

Prior work on graph data management could itself be roughly divided into work on specialized graph databases and on large-scale graph analytics, which have largely evolved separately from each other; the former has considered end-to-end data management issues including storage representations, transactions, and query languages, whereas the latter work has typically focused on processing specific tasks or types of tasks over large volumes of data. I will discuss those separately, focusing on whether we need “new” systems for graph data management and on open problems.

…

Very much worth a deep, slow read. Despite marketing claims about graph databases, fundamental issues remain to be solved.