Jim Comey, ISIS, and “Going Dark” by Benjamin Wittes.

From the post:

FBI Director James Comey said Thursday his agency does not yet have the capabilities to limit ISIS attempts to recruit Americans through social media.

It is becoming increasingly apparent that Americans are gravitating toward the militant organization by engaging with ISIS online, Comey said, but he told reporters that “we don’t have the capability we need” to keep the “troubled minds” at home.

“Our job is to find needles in a nationwide haystack, needles that are increasingly invisible to us because of end-to-end encryption,” Comey said. “This is the ‘going dark’ problem in high definition.”

Comey said ISIS is increasingly communicating with Americans via mobile apps that are difficult for the FBI to decrypt. He also explained that he had to balance the desire to intercept the communication with broader privacy concerns.

“It is a really, really hard problem, but the collision that’s going on between important privacy concerns and public safety is significant enough that we have to figure out a way to solve it,” Comey said.

Let’s unpack this.

As has been widely reported, the FBI has been busy recently dealing with ISIS threats. There have been a bunch of arrests, both because ISIS has gotten extremely good at the inducing self-radicalization in disaffected souls worldwide using Twitter and because of the convergence of Ramadan and the run-up to the July 4 holiday.

…

Just as an empirical matter, phrases like, “…ISIS has gotten extremely good at…”, should be discarded as noise. You have heard of the three teenage girls from the UK who “attempted” to join the Islamic State of Iraq and Syria. Taking the “teenage” population of the UK to fall between 10 to 19 years of age, the UK teen population for 2014 was 7,667,000.

Three (3) teens from a population of 7,667,000 doesn’t sound like “…extremely good…” recruitment to me.

Moreover, unless they have amended the US Constitution quite recently, as a US citizen I am free to read publications by any organization on the face of the Earth. There are some minor exceptions for child pornography but political speech, which Islamic State of Iraq and Syria publications clearly fall under, are under the highest level of protection by the constitution.

Unlike the Big Lie statements about Islamic State of Iraq and Syria and social media, there is empirical research on the impact of surveillance on First Amendment rights:

This article brings First Amendment theory into conversation with social science research. The studies surveyed here show that surveillance has certain effects that directly implicate the theories behind the First Amendment, beyond merely causing people to stop speaking when they know they are being watched. Specifically, this article finds that social science research supports the protection of reader and viewer privacy under many of the theories used to justify First Amendment protection.

If the First Amendment serves to foster a marketplace of ideas, surveillance thwarts this purpose by preventing the development of minority ideas. Research indicates that surveillance more strongly affects those who do not yet hold strong views than those who do.

If the First Amendment serves to encourage democratic selfgovernance, surveillance thwarts this purpose as well. Surveillance discourages individuals with unformed ideas from deviating from majority political views. And if the First Amendment is intended to allow the fullest development of the autonomous self, surveillance interferes with autonomy. Surveillance encourages individuals to follow what they think others expect of them and conform to perceived norms instead of engaging in unhampered self-development.

The quote is from the introduction to: The Conforming Effect: First Amendment Implications of Surveillance, Beyond Chilling Speech by Margot E. Kaminski and Shane Witnov. (Kaminski, Margot E. and Witnov, Shane, The Conforming Effect: First Amendment Implications of Surveillance, Beyond Chilling Speech (January 1, 2015). University of Richmond Law Review, Vol. 49, 2015; Ohio State Public Law Working Paper No. 288. Available at SSRN: http://ssrn.com/abstract=2550385))

The abstract from Kaminski and Witnov reads:

First Amendment jurisprudence is wary not only of direct bans on speech, but of the chilling effect. A growing number of scholars have suggested that chilling arises from more than just a threat of overbroad enforcement — surveillance has a chilling effect on both speech and intellectual inquiries. Surveillance of intellectual habits, these scholars suggest, implicates First Amendment values. However, courts and legislatures have been divided in their understanding of the extent to which surveillance chills speech and thus causes First Amendment harms.

This article brings First Amendment theory into conversation with social psychology to show that not only is there empirical support for the idea that surveillance chills speech, but surveillance has additional consequences that implicate multiple theories of the First Amendment. We call these consequences “the conforming effect.” Surveillance causes individuals to conform their behavior to perceived group norms, even when they are unaware that they are conforming. Under multiple theories of the First Amendment — the marketplace of ideas, democratic self-governance, autonomy theory, and cultural democracy — these studies suggest that surveillance’s effects on speech are broad. Courts and legislatures should keep these effects in mind.

Conformity to the standard US line on Islamic State of Iraq and Syria is the more likely goal of FBI Director James Comey than stopping “successful” Islamic State of Iraq and Syria recruitment over social media.

The article also looks at First Amendment cases, including one that is directly on point for ISIS social media:

The Supreme Court has stated that laws that deter the expression of minority viewpoints by airing the identities of their holders are also unconstitutional. In Lamont, the Court found unconstitutional a requirement that mail recipients write in to request communist literature. The state had an impermissible role in identifying this minority viewpoint and condemning it. The Court reasoned that ― any addressee is likely to feel some inhibition in sending for literature which federal officials have condemned as ‘communist political propaganda.‘ The individual‘s inhibition stems from the state‘s obvious condemnation, but also from a fear of social repercussions (by the state as an employer). The Court found that the requirement that a person identify herself as a communist ―is almost certain to have a deterrent effect. (I omitted the footnote numbers for ease of reading.)

For more on Lamont (8-0), see: Lamont vs. Postmaster General (Wikipedia). Lamont v. Postmaster General 381 U.S. 301 (1965) Text of the decision, Justia.

The date of the decision is important as well, 1965. In 1965, China and Russia, had a total combined population of 842 million people. One assumes the vast majority of who were communists.

Despite the presence of a potential 843 million communists in the world, the Lamont Court found that even chilling access to communist literature was not permitted under the United States Constitution.

Before someone challenges my claim that social media has not been successful for the Islamic State of Iraq and Syria, remember that at best the Islamic State of Iraq and Syria has recruited 30,000 fighters from outside of Syria, with no hard evidence on whether they were motivated by social media or not.

Even assuming the 30,000 were all from social media, how does that compare to the 843 million known communists of 1965?

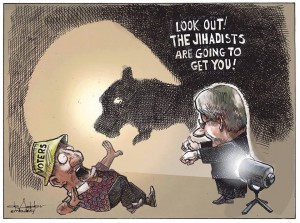

Why is Comey so frightened of a few thousand people? Frightened enough to to abridge the freedom of speech rights of every American and who knows what he wants to do to non-Americans.

As best I understand the goals of the Islamic State of Iraq and Syria, the overriding one is to have a Muslim government that is not subservient to Western powers. I don’t find that remotely threatening. Whether the Islamic State of Iraq and Syria will be the one to establish such a government is unclear. Governing is far more tedious and difficult than taking territory.

A truly Muslim government would be a far cry from the favoritism and intrigue that has characterized Western relationships with Muslim governments for centuries.

Citizens of the United States are in more danger from the FBI than they ever will be from members of the Islamic State of Iraq and Syria. Keep that in mind when you hear FBI Director James Comey talks about surveillance. The target of that surveillance is you.