Mathematicians Reduce Big Data Using Ideas from Quantum Theory by M. De Domenico, V. Nicosia, A. Arenas, V. Latora.

From the post:

A new technique of visualizing the complicated relationships between anything from Facebook users to proteins in a cell provides a simpler and cheaper method of making sense of large volumes of data.

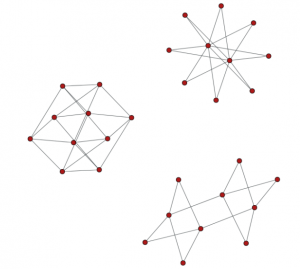

Analyzing the large volumes of data gathered by modern businesses and public services is problematic. Traditionally, relationships between the different parts of a network have been represented as simple links, regardless of how many ways they can actually interact, potentially loosing precious information. Only recently a more general framework has been proposed to represent social, technological and biological systems as multilayer networks, piles of ‘layers’ with each one representing a different type of interaction. This approach allows a more comprehensive description of different real-world systems, from transportation networks to societies, but has the drawback of requiring more complex techniques for data analysis and representation.

A new method, developed by mathematicians at Queen Mary University of London (QMUL), and researchers at Universitat Rovira e Virgili in Tarragona (Spain), borrows from quantum mechanics’ well tested techniques for understanding the difference between two quantum states, and applies them to understanding which relationships in a system are similar enough to be considered redundant. This can drastically reduce the amount of information that has to be displayed and analyzed separately and make it easier to understand.

The new method also reduces computing power needed to process large amounts of multidimensional relational data by providing a simple technique of cutting down redundant layers of information, reducing the amount of data to be processed.

The researchers applied their method to several large publicly available data sets about the genetic interactions in a variety of animals, a terrorist network, scientific collaboration systems, worldwide food import-export networks, continental airline networks and the London Underground. It could also be used by businesses trying to more readily understand the interactions between their different locations or departments, by policymakers understanding how citizens use services or anywhere that there are large numbers of different interactions between things.

You can hop over to Nature, Structural reducibility of multilayer networks, where if you don’t have an institutional subscription:

ReadCube: $4.99 Rent, $9.99 to buy, or Purchase a PDF for $32.00.

Let me save you some money and suggest you look at:

Layer aggregation and reducibility of multilayer interconnected networks

Abstract:

Many complex systems can be represented as networks composed by distinct layers, interacting and depending on each others. For example, in biology, a good description of the full protein-protein interactome requires, for some organisms, up to seven distinct network layers, with thousands of protein-protein interactions each. A fundamental open question is then how much information is really necessary to accurately represent the structure of a multilayer complex system, and if and when some of the layers can indeed be aggregated. Here we introduce a method, based on information theory, to reduce the number of layers in multilayer networks, while minimizing information loss. We validate our approach on a set of synthetic benchmarks, and prove its applicability to an extended data set of protein-genetic interactions, showing cases where a strong reduction is possible and cases where it is not. Using this method we can describe complex systems with an optimal trade–off between accuracy and complexity.

Both articles have four (4) illustrations. Same four (4) authors. The difference being the second one is at http://arxiv.org. Oh, and it is free for downloading.

I remain concerned by the focus on reducing the complexity of data to fit current algorithms and processing models. That said, there is no denying that such reduction methods have proven to be useful.

The authors neatly summarize my concerns with this outline of their procedure:

The whole procedure proposed here is sketched in Fig. 1 and can be summarised as follows: i) compute the quantum Jensen-Shannon distance matrix between all pairs of layers; ii) perform hierarchical clustering of layers using such a distance matrix and use the relative change of Von Neumann entropy as the quality function for the resulting partition; iii) �finally, choose the partition which maximises the relative information gain.

With my corresponding concerns:

i) The quantum Jensen-Shannon distance matrix presumes a metric distance for its operations, which may or may not reflect the semantics of the layers (or than by simplifying assumption).

ii) The relative change of Von Neumann entropy is a difference measurement based upon an assumed metric, which may or not represent the underlying semantics of the relationships between layers.

iii) The process concludes by maximizing a difference measurement based upon an assigned metric, which has been assigned to the different layers.

Maximizing a difference, based on an entropy calculation, which is itself based on an assigned metric doesn’t fill me with confidence.

I don’t doubt that the technique “works,” but doesn’t that depend upon what you think is being measured?

A question for the weekend: Do you think this is similar to the questions about dividing continuous variables into discrete quantities?