Nancy Baym tweeted:

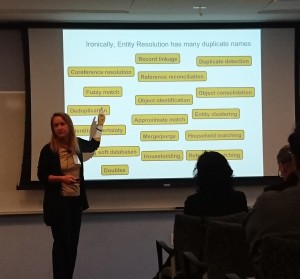

“Ironically, Entity Resolution has many duplicate names” – Lise Getoor

I can’t think of any subject that doesn’t have duplicate names.

Can you?

In a “search driven” environment, not knowing the “duplicate” names for a subject means data loss.

Data loss that could include “smoking gun” data.

Topic mappers have been making that pitch for decades but it never has really caught fire.

I don’t think anyone doubts that data loss occurs, but the gravity of that data loss remains elusive.

For example, let’s take three duplicate names for entity resolution from the slide, duplicate detection, reference reconciliation, coreference resolution.

Supplying all three as quoted strings to CiteSeerX, any guesses on the number of “hits” returned?

As of April 13, 2016:

- duplicate detection – 3,854

- reference reconciliation – 253

- coreference resolution – 3,290

When running the query "duplicate detection" "coreference resolution", only 76 “hits” are returned, meaning that there are only 76 overlapping cases reported in the total of 7,144 for both of those terms separately.

That’s assuming CiteSeerX isn’t shorting me on results due to server load, etc. I would have to cross-check the data itself before I would swear to those figures.

But consider just the raw numbers I report today: duplicate detection – 3,854, coreference resolution – 3,290, with 76 overlapping cases.

That’s two distinct lines of research on the same problem, for the most part, ignoring the other.

What do you think the odds are of duplication of techniques, experiences, etc., spread out over those 7,144 articles?

Instead of you or your client duplicating a known-to-somebody solution, you could be building an enhanced solution.

Well, except for the data loss due to “duplicate names” in a search environment.

And that you would have to re-read all the articles in order to find which technique or advancement was made in each article.

Multiply that by everyone who is interested in a subject and its a non-trivial amount of effort.

How would you like to avoid data loss and duplication of effort?